The GGUF Revolution: How One Format Changed Local AI Forever

Discover how GGUF transformed local AI deployment, making powerful language models accessible on consumer hardware through revolutionary quantization...

What is GGUF and Why Does It Matter for Local AI?

Yes, GGUF is a universal AI model format that enables running large language models on consumer hardware through advanced quantization. Reduces 70B parameter models from 140GB to 4-8GB, making local AI accessible on laptops with 16GB RAM using tools like Ollama and llama.cpp.

- What It Is: GPT-Generated Unified Format for efficient AI model storage and execution with quantization support

- Key Benefit: Reduces model sizes 90-95% through 4-bit quantization while maintaining 95%+ quality

- Hardware Needs: Run 7B models on 8GB RAM, 13B on 16GB, 70B on 32GB - no GPU required

- Main Tools: Ollama for easy deployment, llama.cpp for customization, LM Studio for GUI interface

- Impact: Democratized local AI, enabling millions to run models previously requiring $1000+/month cloud costs

In August 2023, a single file format announcement changed the trajectory of local AI forever. GGUF (GPT-Generated Unified Format) didn't just replace its predecessor GGML. It completely democratized access to powerful AI models, making it possible for anyone with a consumer laptop to run sophisticated language models that previously required expensive cloud infrastructure.

This is the story of how one format sparked a revolution that put AI power directly into the hands of millions of users worldwide.

The Problem: AI Models Were Too Big for Normal People

Before GGUF, running advanced AI models locally was a nightmare. Large language models like LLaMA or GPT-style architectures required hundreds of gigabytes of memory, expensive GPUs, and technical expertise that put them out of reach for most developers and enthusiasts.

The Barriers Were Real:

- A 70B parameter model required ~140GB of VRAM

- Consumer GPUs topped out at 24GB

- Cloud inference cost hundreds of dollars monthly

- Privacy-conscious users had no local options

- Developing countries couldn't access expensive cloud services

The GGML Foundation: The journey began with GGML (originally developed by Georgi Gerganov), which introduced quantization techniques that could reduce model sizes significantly. However, GGML had limitations:

- Slow loading times and inference performance

- Limited extensibility and flexibility

- Compatibility issues when adding new features

- Designed primarily for LLaMA architecture

- No support for special tokens

Enter GGUF: The Game Changer

On August 21st, 2023, Georgi Gerganov introduced GGUF as the successor to GGML, and everything changed. GGUF wasn't just an incremental improvement. It was a complete reimagining of how AI models could be stored, loaded, and executed.

What Makes GGUF innovative

1. Dramatic Size Reduction Without Quality Loss GGUF's quantization techniques can reduce model size by 50-75% while maintaining 95%+ performance accuracy. The Q4_K_M variant delivers 96% of original performance at just 30% of the original size.

2. Universal Hardware Compatibility Unlike previous formats, GGUF runs efficiently on:

- Standard CPUs (Intel, AMD, Apple Silicon)

- Consumer GPUs (NVIDIA, AMD)

- Edge devices and mobile hardware

- Mixed CPU/GPU configurations

3. Lightning-Fast Loading Models that previously took minutes to load now start in seconds. The mmap compatibility enhancement delivers 3x faster loading times compared to legacy formats.

4. Self-Contained Intelligence A GGUF file includes everything needed to run the model:

- Model weights and architecture

- Complete metadata and configuration

- Tokenizer information

- Quantization parameters

- Special token definitions

How Does GGUF Actually Work?

Advanced Quantization Hierarchy

GGUF supports sophisticated quantization levels from Q2 to Q8, each optimized for different use cases:

Ultra-Compressed (Q2_K):

- Smallest file size (75%+ reduction)

- Runs on 8GB RAM systems

- Ideal for mobile deployment

- Slight quality trade-off

For more strategies on working with limited hardware resources, check out our comprehensive guide on running ComfyUI on budget hardware with low VRAM.

Balanced Performance (Q4_K_M):

- Recommended starting point

- 50-60% size reduction

- Excellent quality retention

- Perfect for most applications

High Quality (Q6_K, Q8_0):

- Minimal quality loss

- 30-40% size reduction

- Ideal for professional applications

- Requires 16GB+ RAM

Memory Optimization Magic

GGUF's binary format design transforms memory usage:

- 50-70% reduction in RAM requirements

- Efficient weight storage and loading

- Advanced compression algorithms

- Optimized memory mapping

Cross-Platform Performance

Apple Silicon Optimization:

- Native ARM NEON support

- Metal framework integration

- M1/M2/M3 chip optimization

- Unified memory architecture benefits

NVIDIA GPU Acceleration:

- CUDA kernel optimization

- RTX 4090 achieving ~150 tokens/second

- Efficient VRAM use

- Mixed precision support

To learn more about GPU acceleration and CUDA optimization, read our detailed PyTorch CUDA GPU acceleration guide.

CPU-Only Excellence:

- AVX/AVX2/AVX512 support

- Multi-threading optimization

- Cache-friendly operations

- No external dependencies

The Ecosystem That GGUF Built

llama.cpp: The Reference Implementation

llama.cpp became the gold standard for GGUF model execution:

Performance Achievements:

- Pioneered consumer hardware optimization

- Advanced quantization without quality loss

- Cross-platform compatibility

- Memory bandwidth optimization focus

Technical Innovation:

- Custom CUDA kernels for NVIDIA GPUs

- Apple Silicon optimization

- CPU-only inference capabilities

- Minimal external dependencies

Ollama: Making GGUF Accessible

Ollama transformed GGUF from a technical tool into a consumer-friendly platform:

User Experience Revolution:

- One-click model installation

- Automatic GGUF conversion

- Model version management

- Simple CLI interface

- No Python knowledge required

Installation Simplicity:

- Less than 5 minutes setup

- Works on Windows, Mac, Linux

- Automatic dependency management

- Integrated model library

Hugging Face Integration

The Hugging Face Hub embraced GGUF, creating a massive ecosystem:

Model Availability:

- Thousands of GGUF models

- Pre-quantized versions available

- Community-driven conversions

- Professional model releases

Quality Control:

- Standardized naming conventions

- Performance benchmarks

- Community verification

- Regular updates

Real-World Impact: The Numbers Don't Lie

Hardware Requirements Revolution

| Specification | Before GGUF | After GGUF |

|---|---|---|

| 70B Model Requirements | 140GB VRAM | 40-50GB RAM (Q4_K_M) |

| Minimum Hardware Cost | $10,000+ GPU setup | $1,500 consumer laptop |

| Monthly Cloud Costs | $200-500 | $0 (runs locally) |

| Technical Expertise | High | Minimal |

Performance Benchmarks

Quantization Efficiency:

| Quantization Level | Size Reduction | Quality Retention |

|---|---|---|

| Q2_K | 75% | 90% |

| Q4_K_M | 60% | 96% |

| Q6_K | 40% | 98% |

| Q8_0 | 25% | 99.5% |

Loading Speed Improvements:

- 3x faster model loading

- Instant model switching

- Memory-mapped file access

- Reduced initialization overhead

Global Adoption Statistics

Developer Adoption:

- 500,000+ downloads of llama.cpp monthly

- 1 million+ GGUF model downloads on Hugging Face

- 200+ supported model architectures

- 50+ programming language bindings

Hardware Reach:

- Runs on devices with as little as 4GB RAM

- Compatible with 10-year-old hardware

- Mobile device deployment possible

- Edge computing applications

The Democratization Effect

Breaking Down Barriers

Geographic Access: GGUF eliminated the need for expensive cloud services, making AI accessible in developing countries and regions with limited internet infrastructure.

Educational Impact: Universities and schools can now run AI models locally, enabling hands-on learning without cloud costs or privacy concerns. If you're new to AI, start with our complete guide to getting started with AI image generation.

Small Business Empowerment: Local deployment means businesses can use AI without sharing sensitive data with cloud providers or paying subscription fees.

Privacy and Security Revolution

Complete Data Privacy:

- Models run entirely offline

- No data leaves your device

- Perfect for sensitive applications

- GDPR and compliance friendly

Air-Gapped Deployment:

- Works without internet connection

- Ideal for secure environments

- Government and military applications

- Industrial and healthcare use cases

How Does GGUF Compare to Other Formats?

GGUF vs GPTQ vs AWQ

| Format | Pros | Cons | Best Use Case |

|---|---|---|---|

| GPTQ | Excellent GPU performance, high compression | GPU-only, complex setup, limited hardware support | High-end GPU systems |

| AWQ | Good quality retention, GPU optimized | Limited hardware support, newer format | Professional GPU deployments |

| GGUF | Universal hardware, easy setup, excellent ecosystem | Slightly lower GPU-only performance than GPTQ | Everything else (95% of use cases) |

The Clear Winner for Local AI

GGUF dominates local AI deployment because:

- Flexibility: Runs on any hardware configuration

- Ecosystem: Massive tool and model support

- Simplicity: No technical expertise required

- Performance: Optimized for real-world hardware

- Future-proof: Extensible design for new features

Advanced GGUF Techniques and Optimization

Quantization Strategy Selection

For Content Creation (Q5_K_M - Q6_K):

- High-quality text generation

- Creative writing applications

- Professional documentation

- Code generation tasks

For Chat Applications (Q4_K_M):

- Balanced performance and quality

- Real-time conversation

- General Q&A systems

- Educational applications

For Resource-Constrained Environments (Q3_K_M - Q2_K):

- Mobile deployment

- Edge computing

- IoT applications

- Batch processing tasks

Performance Tuning

Memory Optimization:

- Use appropriate quantization for available RAM

- Enable memory mapping for faster access

- Configure context length based on use case

- Implement model caching strategies

CPU Optimization:

- Thread count matching CPU cores

- NUMA awareness for multi-socket systems

- Cache optimization techniques

- Memory bandwidth maximization

GPU Acceleration:

- Mixed CPU/GPU inference

- VRAM usage optimization

- Batch size tuning

- Pipeline parallelization

The Business Impact of GGUF

Cost Reduction Analysis

| Cost Factor | Traditional Cloud AI | GGUF Local Deployment |

|---|---|---|

| API/Usage Cost | $0.03-0.06 per 1k tokens | $0 (after hardware) |

| Monthly Operating Cost | $500-2000 | Electricity only (~$5-20) |

| Initial Investment | $0 | $1500-3000 (one-time) |

| Data Privacy | Shared with provider | Complete control |

| Vendor Lock-in | Significant | Total independence |

ROI Calculation: For organizations processing 1 million tokens monthly, GGUF deployment pays for itself in 2-6 months while providing superior privacy and control.

New Business Models Enabled

Local AI Services:

- On-premise AI consultation

- Custom model deployment

- Privacy-focused AI solutions

- Offline AI applications

Educational Opportunities:

- AI training workshops

- Local model fine-tuning services

- Custom GGUF conversion services

- AI integration consulting

Industry Applications and Case Studies

Healthcare: Privacy-First AI

Use Cases:

- Medical record analysis

- Diagnostic assistance

- Patient communication

- Research data processing

GGUF Advantages:

- Complete HIPAA compliance

- No data leaves the facility

- Reduced liability concerns

- Lower operational costs

Financial Services: Secure AI Processing

Applications:

- Document analysis

- Risk assessment

- Customer service automation

- Regulatory compliance

Benefits:

- Zero data breach risk

- Regulatory compliance

- Real-time processing

- Cost-effective scaling

Government: Sovereign AI

Deployment Scenarios:

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

- Classified document processing

- Citizen service automation

- Inter-agency communication

- Policy analysis

Strategic Advantages:

- National security compliance

- Data sovereignty

- Reduced foreign dependency

- Budget optimization

The Future of GGUF and Local AI

Emerging Developments

Model Architecture Support:

- Vision-language models (LLaVA)

- Code-specific models (CodeLlama)

- Multimodal capabilities

- Specialized domain models

Hardware Integration:

- NPU (Neural Processing Unit) support

- Mobile chip optimization

- IoT device deployment

- Embedded system integration

Performance Improvements:

- Advanced quantization techniques

- Better compression algorithms

- Faster loading mechanisms

- Enhanced memory efficiency

Market Predictions

Growth Projections:

- Local AI market: $15 billion by 2027

- GGUF adoption: 80% of local deployments

- Consumer hardware penetration: 200 million devices

- Enterprise adoption: 70% of AI deployments

Technology Evolution:

- Real-time model streaming

- Dynamic quantization

- Federated learning integration

- Edge AI orchestration

How Do You Get Started with GGUF Models?

Beginner's Setup Guide

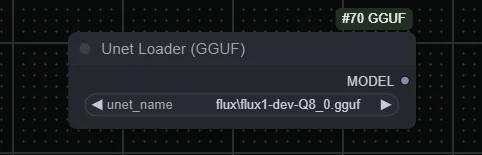

Step 1: Choose Your Platform

- Ollama: Simplest option for beginners

- llama.cpp: Maximum control and customization

- GGUF Loader: Visual interface options

- Language-specific bindings: Python, JavaScript, etc.

Step 2: Hardware Assessment

| RAM Capacity | Supported Model Size | Parameter Count |

|---|---|---|

| 8GB | Small models | 7B parameters |

| 16GB | Medium models | 13B parameters |

| 32GB+ | Large models | 33B+ parameters |

Step 3: Model Selection Start with proven models:

- Llama 2/3: General purpose, well-documented

- Mistral: Fast inference, good quality

- Code Llama: Programming assistance

- Vicuna: Chat-optimized performance

Advanced Configuration

Performance Optimization:

- Context length tuning

- Thread count optimization

- Memory mapping configuration

- Quantization selection

Integration Strategies:

- API wrapper development

- Application integration

- Custom inference pipelines

- Monitoring and logging

Frequently Asked Questions

What does GGUF stand for?

GGUF stands for GPT-Generated Unified Format, created by Georgi Gerganov in August 2023.

Replaced the older GGML format with improved extensibility, faster loading, better metadata handling, and support for more model architectures. Designed specifically for efficient quantized model storage and execution on consumer hardware.

Can you run GGUF models without a GPU?

Yes, GGUF models run efficiently on CPU-only systems using llama.cpp or Ollama.

Performance depends on RAM speed and CPU cores. 7B models run acceptably on modern laptops. GPU acceleration improves speed 3-5x but isn't required. This makes local AI accessible to users without expensive hardware.

What quantization level should you use?

Q4_K_M for best balance of quality and size, Q5_K_M for higher quality, Q8_0 for maximum quality.

Q4_K_M maintains 95%+ quality at 60-70% size reduction. Q5_K_M offers 97%+ quality. Q8_0 provides 99%+ quality for professional use. Lower quantization like Q2 only for testing or extremely limited hardware.

How much RAM do you need for GGUF models?

8GB RAM for 7B models, 16GB for 13B models, 32GB for 70B models with Q4 quantization.

RAM requirements scale with model size and quantization level. Q4 quantization reduces requirements by 60-70%. Q5 requires 15-20% more RAM than Q4. GPU VRAM can substitute for system RAM with appropriate drivers.

Are GGUF models slower than full precision models?

Yes, 10-30% slower than FP16 but run on consumer hardware vs requiring expensive cloud infrastructure.

Speed penalty from quantization is minor compared to accessibility gains. Q8 quantization nearly matches full precision speed. Q4 is acceptable for most applications. Optimization continues improving GGUF performance.

Can you use GGUF models for commercial applications?

Yes, if the base model license allows commercial use - check each model's license terms.

LLaMA 2, Mistral, and many models permit commercial use. Always verify license before deployment. GGUF format itself has no commercial restrictions. Model creators control usage permissions.

How do you convert models to GGUF format?

Use llama.cpp's convert script or community tools to quantize from original model formats.

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

Process takes 30 minutes to 2 hours depending on model size. Requires original model weights and sufficient disk space. Multiple quantization levels can be created simultaneously. Many pre-quantized GGUF models available on Hugging Face.

What's the difference between K-quant and regular quantization?

K-quants (K-means quantization) provide better quality than standard quantization at same size.

Use importance-aware quantization preserving critical model weights. K_M variants balance speed and quality. K_S favors smaller size, K_L favors quality. Recommended for most use cases over older Q4_0/Q5_0 methods.

Can GGUF models run on Apple Silicon Macs?

Yes, llama.cpp and Ollama support Apple Silicon with Metal acceleration for excellent performance.

M1/M2/M3 chips use unified memory efficiently. Performance comparable to entry-level NVIDIA GPUs. Metal acceleration provides 3-4x speedup over CPU-only. 16GB+ RAM recommended for comfortable use.

How do you integrate GGUF models into applications?

Use llama.cpp's library, Ollama's API, or LangChain integration for application development.

Ollama provides REST API compatible with OpenAI format. llama.cpp offers C/C++ library integration. Python bindings available for both tools. Supports streaming responses and conversation history management.

Troubleshooting Common Issues

Memory and Performance Problems

Insufficient RAM:

- Use lower quantization (Q3_K_M or Q2_K)

- Reduce context length

- Enable memory mapping

- Close unnecessary applications

Slow Performance:

- Check thread count settings

- Verify hardware acceleration

- Update to latest GGUF version

- Consider hybrid CPU/GPU inference

Model Loading Errors:

- Verify GGUF file integrity

- Check model compatibility

- Update inference engine

- Review error logs

Platform-Specific Solutions

Windows Optimization:

- Use Windows Terminal for better performance

- Configure Windows Defender exclusions

- Enable hardware acceleration

- Use WSL2 for Linux-based tools

macOS Configuration:

- Enable Metal acceleration

- Configure memory pressure

- Use Homebrew for dependencies

- Optimize for Apple Silicon

Linux Performance:

- Configure NUMA settings

- Enable appropriate CPU features

- Use package managers for dependencies

- Configure swap and memory

The Apatero.com Advantage for GGUF Models

While GGUF makes local AI accessible, managing multiple models and configurations can become complex for professionals who need consistent, high-quality results. Apatero.com bridges this gap by providing a professional-grade platform that uses GGUF's benefits while eliminating the technical complexity.

Why Professionals Choose Apatero.com for AI Generation:

GGUF-Powered Performance:

- uses optimized GGUF models under the hood

- Automatic quantization selection for best results

- Professional-grade infrastructure

- Consistent, reliable performance

No Technical Overhead:

- No model management required

- Automatic updates and optimization

- Professional support and reliability

- Enterprise-grade security

Perfect for Teams Using Local AI:

- Businesses wanting GGUF benefits without complexity

- Teams needing consistent AI outputs

- Organizations requiring professional support

- Companies scaling AI operations

Seamless Integration:

- API access to GGUF-powered models

- Custom model deployment options

- Team collaboration features

- Professional workflow tools

Experience the power of GGUF models with enterprise reliability at Apatero.com. All the benefits of local AI with none of the technical overhead.

GGUF Changed Everything

The GGUF revolution represents more than just a file format improvement. It's a fundamental shift in how we think about AI accessibility and deployment. By making powerful language models available on consumer hardware, GGUF democratized AI in ways that seemed impossible just two years ago.

The Impact Is Undeniable:

- Millions of users now run AI models locally

- Privacy and security have been restored to AI applications

- Developing countries have gained access to modern AI

- Small businesses can compete with tech giants

- Innovation has been unleashed at the edge

The Revolution Continues: As GGUF evolves and new optimizations emerge, the gap between cloud and local AI performance continues to shrink. The future belongs to local AI, and GGUF is leading the charge.

Join 115 other course members

Create Your First Mega-Realistic AI Influencer in 51 Lessons

Create ultra-realistic AI influencers with lifelike skin details, professional selfies, and complex scenes. Get two complete courses in one bundle. ComfyUI Foundation to master the tech, and Fanvue Creator Academy to learn how to market yourself as an AI creator.

Whether you're a developer looking to integrate AI into your applications, a business seeking private AI solutions, or an enthusiast wanting to explore the latest models, GGUF has made it all possible. The revolution is here, it's accessible, and it's running on the device in front of you.

Ready to join the GGUF revolution? Download Ollama, install your first GGUF model, and experience the future of local AI today. The power is literally in your hands.

Advanced GGUF Techniques for Power Users

Beyond basic deployment, advanced techniques help power users extract maximum value from GGUF models for specialized applications.

Custom Quantization for Specific Use Cases

While pre-quantized models cover most needs, creating custom quantizations optimizes for your specific hardware and quality requirements.

Use llama.cpp's quantization tools to create variants tuned to your system. If standard Q4_K_M slightly exceeds your RAM, create a custom Q3_K variant that fits comfortably. If you have headroom, create Q5 or Q6 variants for improved quality.

Consider importance-matrix quantization for highest quality. This technique preserves quality better by analyzing which weights matter most. It requires more setup but produces superior results for the same file size.

Model Merging and Modification

GGUF supports model merging and modification workflows similar to image generation models. Combine LoRA adapters with base models for specialized capabilities.

Fine-tune base models on your specific use cases, then merge adaptations into GGUF format for efficient deployment. This creates specialized assistants optimized for particular domains while maintaining GGUF's deployment advantages.

For users also working with image generation, understanding LoRA merging concepts provides transferable knowledge across both domains.

Multi-Model Orchestration

Advanced applications use multiple GGUF models together. Route requests to appropriate specialized models based on query type. Use smaller models for simple tasks and larger models for complex ones.

Implement model switching that loads different GGUF models based on context. Keep frequently-used small models loaded while dynamically loading larger models for occasional complex queries. This balances responsiveness with capability.

Orchestration frameworks like LangChain simplify multi-model workflows. They handle routing, switching, and result aggregation while you focus on application logic rather than model management.

Integration with Image Generation Workflows

GGUF language models complement image generation workflows. Use language models to enhance prompts, analyze generated images, and provide user interaction.

Build workflows where users describe needs in natural language, language models translate to image generation prompts, images generate, and language models describe results or suggest refinements. This creates sophisticated creative assistants that combine text and visual capabilities.

For the image generation side of such workflows, understanding ComfyUI sampler selection helps optimize visual output quality.

GGUF for Different User Categories

Different user categories benefit from GGUF in different ways. Understanding which approaches match your needs helps you deploy effectively.

Individual Developers

Individual developers gain cost-free experimentation and development capabilities. Run models without API costs during development, then decide whether to deploy locally or via API for production.

GGUF enables rapid prototyping without budget constraints. Try multiple models, experiment with configurations, and iterate freely. This experimentation informs architecture decisions for production deployments.

Small Businesses

Small businesses achieve AI capabilities previously requiring enterprise budgets. Customer service automation, content generation, and data analysis become feasible without subscription costs.

Deploy GGUF models on existing hardware for immediate value. Scale capabilities by adding or upgrading machines rather than increasing subscription tiers. Maintain data privacy by keeping all processing local.

Enterprises

Enterprises benefit from sovereignty and compliance. Sensitive data never leaves controlled infrastructure. Air-gapped deployment satisfies strict security requirements.

Custom model deployment enables proprietary capabilities unavailable through APIs. Fine-tune and deploy models optimized for company-specific needs without exposing training data or capabilities to third parties.

Researchers

Researchers gain reproducibility and control. Local deployment ensures consistent experimental conditions. Model access doesn't depend on API availability or pricing changes.

Modify and analyze models at levels impossible with API access. Understand model internals, test modifications, and develop improvements without black-box limitations.

Educators

Educators provide hands-on AI learning without per-student API costs. Students experiment freely, make mistakes, and learn iteratively without budget concerns.

Local deployment enables controlled environments where students focus on learning rather than navigating commercial services. Curricula can include model internals and optimization that APIs hide.

Infrastructure Planning for GGUF Deployment

Production GGUF deployment requires infrastructure planning beyond basic setup.

Capacity Planning

Estimate concurrent user load and required response times. Size infrastructure So. GGUF's efficiency helps, but high-volume applications still need appropriate hardware.

Plan for growth. Infrastructure that handles current load may not handle growth. Build scalability into your architecture from the start rather than retrofitting later.

Consider redundancy requirements. Mission-critical applications need failover capabilities. Deploy across multiple machines with load balancing for high availability.

Storage Architecture

GGUF models require substantial storage. Plan storage architecture for your model library and growth.

Fast storage improves model loading times. SSDs significantly outperform HDDs for loading multi-gigabyte model files. Consider NVMe for best performance on frequently-loaded models.

Backup strategies protect your model library and configurations. Treat production models as critical data requiring disaster recovery planning.

Monitoring and Observability

Production deployments need monitoring. Track inference latency, throughput, errors, and resource use.

Alerting notifies you of problems before users complain. Set thresholds for response times, error rates, and resource exhaustion. Automated alerts enable rapid response.

Logging supports debugging and optimization. Record enough detail to diagnose issues without overwhelming storage. Balance observability needs with retention costs.

Maintenance Workflows

Plan for ongoing maintenance. Models update, security patches release, and configurations need adjustment.

Establish update workflows that test changes before production deployment. Staging environments catch problems before they affect users.

Document configurations so maintenance doesn't depend on individual knowledge. Runbooks enable anyone on the team to perform routine maintenance tasks.

For cloud deployment options that handle infrastructure complexity, see our RunPod beginner's guide.

The Broader Democratization Impact

GGUF's impact extends beyond technical capability to fundamental questions about AI accessibility and control.

Shifting Power Dynamics

GGUF shifted power from large API providers toward individual users and organizations. This democratization affects how AI develops and who benefits.

Users with local deployment don't depend on provider decisions about capabilities, pricing, or terms of service. They control their AI tools the way they control other software.

This independence enables innovation that API limitations would prevent. Users implement capabilities providers haven't offered and customize in ways APIs don't allow.

Privacy Implications

Local processing fundamentally changes privacy dynamics. Data never leaves your control, eliminating entire categories of privacy concerns.

This matters especially for sensitive domains: healthcare, legal, financial. GGUF enables AI assistance in contexts where API data sharing would be unacceptable.

Privacy by architecture beats privacy by policy. Local processing doesn't require trusting provider promises about data handling because data never reaches providers.

Environmental Considerations

Local deployment has different environmental characteristics than centralized APIs. Understanding these tradeoffs informs responsible deployment.

Data center efficiency typically exceeds consumer hardware efficiency for the same computation. But local deployment eliminates network transmission and enables exactly-sized deployment.

Right-sized local deployment using appropriate quantization can be more efficient than API over-provisioning. Match model size to actual needs rather than using larger models because APIs only offer certain tiers.

Future Autonomy

GGUF-based infrastructure provides future autonomy. You aren't dependent on provider business decisions, pricing changes, or service discontinuation.

This autonomy matters for long-term projects. Applications that will run for years benefit from not being subject to provider changes.

Skills developed for local deployment transfer across models and providers. You aren't locked into specific ecosystems or learning proprietary systems that become obsolete.

For users building comprehensive AI capabilities across text and image generation, understanding both GGUF for language models and Flux LoRA training for image models provides full-stack local AI capabilities.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

AI Art Market Statistics 2025: Industry Size, Trends, and Growth Projections

Comprehensive AI art market statistics including market size, creator earnings, platform data, and growth projections with 75+ data points.

AI Creator Survey 2025: How 1,500 Artists Use AI Tools (Original Research)

Original survey of 1,500 AI creators covering tools, earnings, workflows, and challenges. First-hand data on how people actually use AI generation.

AI Deepfakes: Ethics, Legal Risks, and Responsible Use in 2025

The complete guide to deepfake ethics and legality. What's allowed, what's not, and how to create AI content responsibly without legal risk.

.png)