Getting Started with RunPod: Beginner's AI Guide

Start using RunPod for AI workloads with this beginner guide. Account setup, GPU selection, pod configuration, and cost management explained.

You want to train AI models or run Stable Diffusion workflows but lack a powerful local GPU. Cloud GPU rental sounds complicated with confusing pricing, mysterious templates, and technical setup requirements that intimidate beginners. This runpod guide will show you that Runpod makes cloud GPU access straightforward with pay-as-you-go billing, pre-configured templates, and beginner-friendly interfaces. Understanding key concepts in this runpod guide prevents expensive mistakes and frustrating troubleshooting. If you're completely new to AI image generation, start with our complete beginner guide first.

Quick Answer: This runpod guide covers everything you need to know: Runpod provides on-demand cloud GPU rental starting at $0.16/hour with no subscriptions, billing per second for actual usage. Deploy pods (virtual machines with GPUs) using 50+ pre-configured templates for PyTorch, ComfyUI, Stable Diffusion, or Jupyter notebooks. Add credits to your account ($10-50 to start), choose GPU type (RTX 4090, A5000, H100, etc.), select a template, deploy on-demand or spot instances, and connect via web browser. Network volumes provide persistent storage across pod sessions. This runpod guide will help you understand storage costs, choosing between Secure vs Community Cloud, and properly stopping pods to prevent billing surprises.

- Pay-as-you-go with per-second billing, no subscriptions or minimum commitments

- 50+ templates simplify deployment for common AI tasks (ComfyUI, Automatic1111, training)

- On-demand pods guarantee availability, spot instances save 50-70% but can be interrupted

- Network volumes persist data between pod sessions ($0.10/GB monthly)

- Stop pods when not using to avoid charges, but container disk still costs $0.20/GB monthly when stopped

What Is Runpod and Why Use It for AI Work?

This section of our runpod guide explains the fundamentals. Runpod provides cloud-based GPU infrastructure optimized for AI and machine learning workloads. Instead of buying expensive GPUs locally, you rent them temporarily through Runpod, paying only for actual usage time.

The platform targets AI practitioners, researchers, content creators, and developers needing powerful GPUs without capital investment. Whether you're training LoRAs, running ComfyUI workflows, fine-tuning language models, or experimenting with AI tools, Runpod provides the compute power.

The pay-as-you-go model eliminates subscriptions and contracts. Add credits to your account (starting at $10), use what you need, and billing happens per second. When you stop your pod, charges stop (except persistent storage). This flexibility prevents paying for unused capacity.

GPU selection ranges from consumer cards like RTX 3090 and RTX 4090 to professional cards like A5000, A6000, and datacenter GPUs like A100 and H100. Different GPUs suit different workloads and budgets. More powerful GPUs cost more per hour but complete work faster, potentially saving money overall.

- No upfront investment: Avoid $1500-5000 GPU purchase costs

- Instant access: Deploy pods in seconds, no hardware setup or waiting

- Flexible scaling: Use powerful H100 for big jobs, cheaper RTX 4090 for experiments

- Pre-configured templates: ComfyUI, Automatic1111, training environments ready instantly

- No maintenance: No driver updates, cooling concerns, or hardware failures to manage

Templates represent pre-configured environments with software already installed. Instead of manually setting up PyTorch, CUDA, dependencies, and applications, choose a template and get working environments immediately. This runpod guide recommends starting with official templates as over 50 official and community templates cover common use cases.

The two-tier cloud structure offers choices. Secure Cloud provides enterprise-grade datacenter GPUs with 99.9% uptime guarantees and reliable availability. Community Cloud aggregates GPUs from individuals and smaller providers, offering lower prices but potentially less reliability. Beginners typically start with Secure Cloud for consistency.

Browser-based access simplifies connectivity. Connect to Jupyter notebooks, SSH terminals, or application interfaces directly through your browser without complex SSH key setup or local tools. This accessibility makes Runpod less intimidating than traditional cloud platforms.

For users wanting AI capabilities without managing any infrastructure, platforms like Apatero.com provide fully hosted solutions with no setup required, though cloud GPU rental like Runpod offers maximum flexibility.

How Do You Create Your First Runpod Pod?

This is the most important section of our runpod guide. Creating your first pod involves several straightforward steps. Understanding these basics from this runpod guide prevents confusion and helps you get started quickly.

Account creation begins at runpod.io with email registration or Google sign-in. Verify your email and complete basic profile information. New accounts receive occasional promotional credits, but plan to add payment credits for real usage.

Adding credits happens through the billing section. Minimum credit purchase typically starts at $10, though you can add more for extended use. Payment methods include credit cards and cryptocurrency. Credits don't expire, so unused amounts remain available for future sessions.

Navigate to the Pods section after adding credits. This dashboard shows available GPU types, pricing, and deployment options. The interface displays current availability for different GPUs across Secure and Community Cloud.

- Add at least $10-20 credits to avoid immediate funding interruptions

- Choose on-demand over spot for first deployment to guarantee availability

- Select a template matching your immediate needs (ComfyUI, Jupyter, etc.)

- Understand stopping pods is manual - they continue billing until you stop them

- Check the pricing per hour for your chosen GPU before deploying

GPU selection depends on your task and budget. For Stable Diffusion generation and light training, RTX 4090 ($0.50-0.70/hour) provides excellent value. For serious LoRA training or fine-tuning covered in our LoRA training troubleshooting guide, A5000 or A6000 ($0.29-0.70/hour) offer more VRAM. For large model training, A100 or H100 ($1.50-2.50/hour) deliver professional performance.

Template selection from the dropdown menu determines what software comes pre-installed. Popular beginner choices include RunPod PyTorch for general ML work, RunPod Stable Diffusion for Automatic1111 WebUI, RunPod ComfyUI for ComfyUI workflows, and RunPod Jupyter for interactive Python notebooks.

GPU count typically stays at 1 for beginners. Multi-GPU setups serve advanced distributed training scenarios that beginners don't need. Stick with single GPU to keep costs manageable.

Instance pricing offers two options. On-demand instances guarantee availability but cost full price. Spot instances can save 50-70% but may be interrupted if higher-paying customers need capacity. For learning and experimentation, spot instances work well. For important deadlines, pay for on-demand reliability.

Container disk size defaults to 50GB but can adjust up to several hundred GB. This temporary storage exists only while your pod runs. For persistent storage across sessions, configure network volumes separately.

Deploy On-Demand or Deploy Spot button starts your pod. Deployment takes 30-120 seconds as Runpod provisions the GPU, loads the template, and initializes the environment. Progress appears in the pods dashboard.

Once running, the Connect button appears with multiple connection options. Choose from SSH Terminal (command-line access), HTTP Services (web interfaces like ComfyUI or Jupyter), or direct port connections depending on your template and needs.

What Do All the Connection Options Mean?

This runpod guide section covers connectivity options. Runpod provides multiple ways to interact with your pod. Understanding these options from this runpod guide helps you access your work effectively.

HTTP Services appear when templates expose web interfaces. ComfyUI templates show :8188 for the ComfyUI interface. Automatic1111 templates show :3000 for the WebUI. Jupyter templates show :8888 for Jupyter Lab. Click these to open the application in your browser without additional setup.

SSH Terminal provides command-line access to your pod. The browser-based terminal works immediately without SSH keys or local tools. Use this for running commands, installing additional packages, or managing files. More advanced users can use the SSH connection string for local terminal access.

- HTTP :8188: ComfyUI web interface

- HTTP :3000: Automatic1111 WebUI

- HTTP :8888: Jupyter Lab notebook environment

- SSH Terminal: Command-line access via browser

- TCP connections: Direct service access for advanced use

Jupyter Lab (port 8888) provides an interactive Python notebook environment. Use this for code experimentation, data analysis, file management, and installing packages through notebook cells. The file browser on the left lets you upload/download files.

ComfyUI (port 8188) opens the full ComfyUI node-based interface. Load workflows, generate images, manage models, and access all ComfyUI features through your browser. The interface performs identically to local ComfyUI but uses your rented GPU. For learning ComfyUI basics, see our essential nodes guide.

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

Automatic1111 (port 3000) provides the Stable Diffusion WebUI most users know. Generate images, train models, manage extensions, and use familiar workflows. Your pod's GPU handles generation while you control everything through the browser.

File management happens through Jupyter's file browser or SSH terminal commands. Upload models, datasets, or files through Jupyter's upload button. Download generated images or trained models through the browser. For large transfers, wget commands in SSH terminals often work better than browser uploads.

Custom ports require explicit exposure in pod settings. If you install software listening on different ports, add those port numbers (comma-separated) in the "Expose HTTP Ports" field when deploying. This makes services accessible through the Connect menu.

The connection options appear only while your pod runs. Once stopped, connections become unavailable until you restart the pod. Work in progress should be saved to network volumes or downloaded before stopping.

How Does Pricing Actually Work on Runpod?

This runpod guide section is critical for budget management. Understanding Runpod's pricing prevents billing surprises and helps optimize costs. Several components contribute to total charges.

GPU rental forms the primary cost, billed per second based on GPU type. Prices range from approximately $0.16/hour for lower-end GPUs on Community Cloud to $2.50/hour for H100s on Secure Cloud. Common options include RTX 3090 at $0.20-0.30/hour, RTX 4090 at $0.50-0.70/hour, A5000 at $0.29/hour, and A6000 at $0.50-0.70/hour.

On-demand versus spot pricing creates the largest cost difference. On-demand guarantees availability at listed prices. Spot instances offer 50-70% discounts but can be interrupted. For a $0.50/hour on-demand GPU, the spot price might be $0.15-0.25/hour. Significant savings accumulate over hours of use.

- Use spot instances: Save 50-70% for non-urgent work

- Stop pods immediately: Billing continues until you manually stop

- Right-size GPUs: Don't rent H100 for simple SD generation

- Use network volumes: Avoid re-downloading models each session

- Batch work: Complete multiple tasks in one session to minimize startup overhead

Container disk storage costs $0.10/GB per month while running and $0.20/GB per month when stopped. The default 50GB container costs $0.42/month when stopped. This storage is temporary and deleted when you terminate (not stop) your pod. Many beginners don't realize stopped pods still incur container storage charges.

Network volumes provide persistent storage across sessions. Billing varies by usage pattern. Actively mounted volumes cost hourly rates while running. Unmounted volumes pay monthly rates ($0.10/GB). Network volumes persist independently of pods, so you can stop pods without losing data.

Data transfer costs are minimal for most users. Runpod includes substantial free egress, and typical AI workflows don't generate enough traffic to exceed included amounts. Large-scale inference serving or constant massive file downloads could incur transfer fees.

Minimum charges don't exist beyond your actual usage. You can deploy a pod for 5 minutes, complete a task, and pay only for those 5 minutes. This granularity makes experimenting affordable.

Credit depletion causes automatic pod termination. If your balance reaches zero, running pods stop to prevent negative balances. Keep adequate credits to avoid mid-training interruptions. Set low-balance notifications in account settings.

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

Billing transparency in the dashboard shows real-time usage. Check current charges, usage history, and credit balance anytime. This visibility helps you understand costs and adjust usage patterns.

What Are Network Volumes and Do You Need One?

This runpod guide strongly recommends network volumes. Network volumes provide persistent storage that survives pod terminations. Understanding when and how to use them prevents data loss and storage cost surprises.

Network volumes exist independently from pods as persistent SSD-backed storage. Create a network volume once, then attach it to any pod. Data stored there persists when you stop or terminate pods, preventing loss of downloaded models, training datasets, and generated outputs.

The primary use case involves avoiding repeated downloads. ComfyUI models total 10-50GB depending on your collection. Re-downloading these every time you deploy a new pod wastes time and bandwidth. Store models in a network volume, attach that volume to new pods, and access them immediately.

- Store model checkpoints, LoRAs, and other reusable files in network volumes

- Use 50-100GB volumes for typical Stable Diffusion workflows

- Attach the same volume to different pods to share models across sessions

- Detach volumes before terminating pods to preserve data

- Regularly clean unused files to minimize storage costs

Size selection balances capacity and cost. A 50GB volume costs $5/month, 100GB costs $10/month, 200GB costs $20/month. Choose based on your model collection size. Stable Diffusion users typically need 50-150GB.

Creation happens in the Storage section of the dashboard. Specify size, select datacenter region, name the volume, and create. Empty volumes are billed from creation, so create them when you're ready to use them, not far in advance.

Attachment to pods occurs during deployment or while pods run. In the deployment screen, expand the advanced options and select your network volume from the dropdown. It mounts at /workspace or a specified path depending on template configuration.

File organization within network volumes affects workflow efficiency. Create directories for models, outputs, datasets, and temporary files. Most templates expect models in specific paths like /workspace/models or /workspace/ComfyUI/models. Check template documentation for expected structure.

Data transfer to network volumes happens through file uploads in Jupyter, wget commands in SSH terminals, or cloning git repositories. For large files, wget or direct download links work better than browser uploads. Many users create scripts to automatically download common models on first pod deployment.

Detachment must happen before terminating pods to prevent volume deletion. Stop your pod, detach the network volume in settings, then terminate the pod. Terminating a pod with an attached volume may delete or corrupt the volume depending on settings.

Multiple pods can share one network volume sequentially but not simultaneously. You can't attach the same volume to multiple running pods. This prevents file corruption from concurrent access. For simultaneous access, each pod needs its own volume or you share files through other means.

Earn Up To $1,250+/Month Creating Content

Join our exclusive creator affiliate program. Get paid per viral video based on performance. Create content in your style with full creative freedom.

How Do You Avoid Common Beginner Mistakes?

This runpod guide section could save you significant money. New Runpod users commonly make predictable mistakes that waste money or cause frustration. Understanding these pitfalls from this runpod guide helps you avoid them.

Forgetting to stop pods represents the most expensive mistake. Pods continue running and billing until you explicitly stop them. Closing your browser doesn't stop the pod. Finishing your work doesn't stop the pod. You must click the Stop button in the dashboard. Set phone reminders if needed.

Misunderstanding container disk costs causes surprise charges. Stopped pods still bill for container disk storage at $0.20/GB monthly. A stopped pod with 100GB container disk costs $1.60/month. This adds up across multiple stopped pods. Terminate pods you're done with completely rather than stopping them indefinitely.

- Leaving pods running: Check dashboard before logging off, always stop idle pods

- Storing everything in container disk: Use network volumes for persistent important data

- Choosing wrong GPU: Match GPU to task, don't waste H100 time on simple work

- Re-downloading models every session: Use network volumes to cache models

- Not monitoring credits: Keep adequate balance to prevent mid-training interruptions

Choosing overpowered GPUs wastes money. Running Stable Diffusion image generation doesn't need an H100 at $2.50/hour when an RTX 4090 at $0.60/hour performs identically for that task. Match GPU selection to workload requirements rather than always choosing the most powerful option.

Ignoring network volumes leads to repetitive work. Downloading 20GB of models every pod session wastes 10-30 minutes and bandwidth. One-time network volume setup saves this repeated overhead across all future sessions.

Template confusion causes setup problems. Choosing a PyTorch template when you want ComfyUI means manually installing ComfyUI. Choose templates matching your actual needs. The template picker shows descriptions helping identify appropriate options.

Insufficient storage size creates mid-task failures. Estimating storage needs conservatively prevents running out of space during generation or training. If you need 80GB, allocate 100GB for safety margin.

Not reading template documentation leads to confusion. Each template has specific file paths, startup procedures, and quirks. Spending 5 minutes reading template docs prevents hours of troubleshooting. Official templates have documentation links in descriptions.

Spot instance interruptions surprise beginners. Spot instances can be reclaimed with short notice, potentially losing unsaved work. For long training runs or critical deadlines, use on-demand instances. For experimentation and generation, spot instances work fine.

SSH connection confusion happens when beginners expect complex setup. The browser-based SSH terminal works immediately without keys or configuration. Click "SSH Terminal" in the Connect menu and a terminal opens. No local SSH client required for basic use.

File transfer struggles occur when trying to upload large files through browser interfaces. For files over 100MB, use wget commands in the SSH terminal or Jupyter terminal. Provide direct download links and wget handles transfers efficiently.

Frequently Asked Questions

How much does Runpod actually cost for beginners?

Typical beginner usage ranges from $5-30/month depending on frequency and GPU choice. Occasional Stable Diffusion generation (5-10 hours/month on RTX 4090 at $0.60/hour) costs $3-6/month. Regular LoRA training (20 hours/month on A5000 at $0.29/hour) costs $6/month plus $5-10/month for network storage. Heavy users training frequently might spend $20-50/month. The pay-per-second model means actual costs match actual usage.

What happens if I run out of credits while a pod is running?

Runpod automatically stops your pod when credits reach zero to prevent negative balances. Any unsaved work in volatile storage (not network volumes) may be lost. Processes terminate immediately without graceful shutdown. To prevent this, monitor your credit balance and enable low-balance notifications. Keep a buffer of credits above your expected daily usage to avoid interruptions during long training runs.

Can I use Runpod for free to try it out?

Runpod occasionally offers promotional credits for new accounts (typically $5-10) but has no permanent free tier. You must add payment credits to deploy pods beyond promotional amounts. The minimal commitment (pennies for short pod sessions) makes trying Runpod affordable. Deploy an RTX 4090 pod for 15 minutes and spend only $0.15 experimenting with the platform.

Which GPU should I choose for Stable Diffusion and ComfyUI?

RTX 4090 provides the best value for Stable Diffusion generation and ComfyUI workflows, offering 24GB VRAM at $0.50-0.70/hour. The 24GB handles SDXL, Flux, and complex ComfyUI workflows comfortably. For VRAM optimization techniques, see our VRAM flags guide. RTX 3090 at $0.20-0.30/hour works for SD 1.5 and lighter workflows. Only move to A100 or H100 for actual model training or large batch processing where the speed improvement justifies higher costs.

How do I download models to my pod without re-downloading every time?

Create a network volume (50-100GB), attach it to your pod during deployment, and download models to paths within the network volume (typically /workspace). The network volume persists after stopping the pod. When you deploy new pods, attach the same network volume and models are immediately available. Use wget commands in SSH terminals for efficient large file downloads.

What's the difference between stopping and terminating a pod?

Stopping pauses your pod, keeping its configuration and container disk but stopping GPU billing. You can restart stopped pods later. Container disk storage still costs $0.20/GB monthly when stopped. Terminating deletes the pod entirely including container disk, stopping all charges. Network volumes persist independently. Stop pods you'll reuse soon, terminate pods you're done with completely.

Can I run multiple pods simultaneously?

Yes, you can run multiple pods at once if you have sufficient credits. Each pod bills independently. Useful for running separate ComfyUI and training pods, or comparing different GPU performance. Most beginners stick to one pod at a time to control costs, but multi-pod use is fully supported.

How do I connect to ComfyUI or Automatic1111 on my pod?

Click the "Connect" button on your running pod in the dashboard. Select "HTTP Service" and choose the appropriate port (8188 for ComfyUI, 3000 for Automatic1111). A new browser tab opens with the interface. If you see errors, wait 30-60 seconds for services to fully initialize after pod deployment, then try again. Templates automatically configure these services.

What if my pod gets interrupted on a spot instance?

Spot instances can be reclaimed with 30-60 seconds notice, terminating your pod. Unsaved work in container disk is lost. Work saved to network volumes persists. If interruptions cause problems, switch to on-demand instances for guaranteed availability. Spot instances work well for generation and exploration where interruptions are inconvenient but not catastrophic.

How do I install additional Python packages or software?

Access your pod via SSH Terminal through the Connect menu. Use standard package managers like pip for Python packages (pip install package-name) or apt for system packages (apt-get install software). Changes to container disk persist while the pod runs but disappear after termination unless using network volumes. For permanent custom environments, consider creating custom templates.

Mastering Runpod for AI Workflows

This runpod guide has covered the essential knowledge for success. Runpod democratizes access to powerful GPUs through accessible pay-as-you-go pricing, pre-configured templates, and browser-based interfaces. The platform removes barriers that traditionally kept powerful compute resources exclusive to those who could afford hardware investments.

Understanding the fundamentals of pod deployment, template selection, storage options, and connection methods enables productive use from your first session. The key insights involve properly managing pod lifecycle to control costs, using network volumes to avoid repeated setup, and matching GPU selection to actual workload requirements.

Common beginner mistakes around forgetting to stop pods, misunderstanding storage costs, and choosing inappropriate GPUs become avoidable with awareness. The platform's per-second billing and transparent costs make it practical to experiment, learn, and grow usage as your needs increase.

For users wanting AI capabilities without any infrastructure management, platforms like Apatero.com provide fully hosted solutions with zero setup, though cloud GPU rental through Runpod offers maximum flexibility and control for users willing to handle basic pod management.

As AI tools continue advancing and computational requirements grow, cloud GPU access through platforms like Runpod enables practitioners at all levels to participate in AI development, training, and deployment without capital barriers. The combination of flexible pricing, comprehensive GPU selection, and beginner-friendly templates positions Runpod as an accessible entry point to cloud GPU computing in 2025. We hope this runpod guide has given you the confidence to get started with cloud GPU rental.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

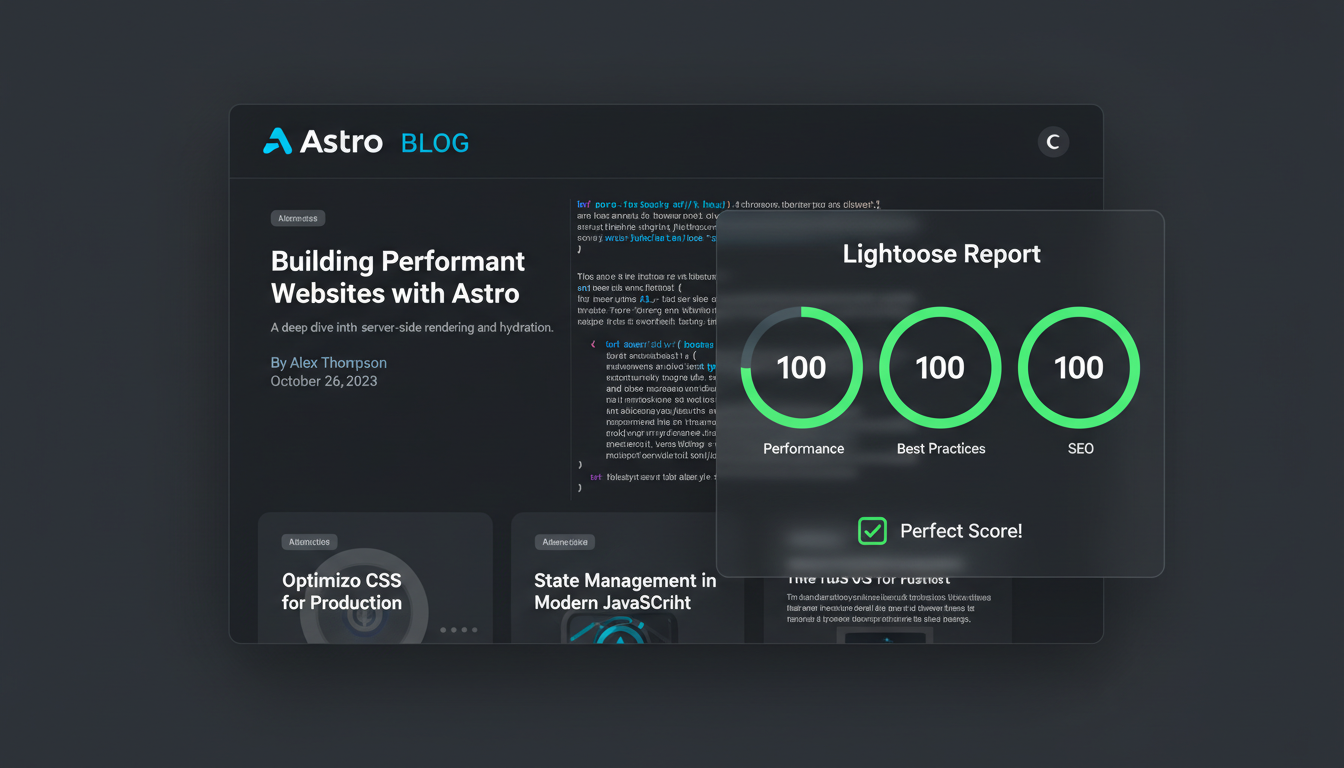

Astro Web Framework: The Complete Developer Guide for 2025

Discover why Astro is changing web development in 2025. Complete guide to building lightning-fast websites with zero JavaScript overhead and modern tooling.

Best AI for Programming in 2025

Comprehensive analysis of the top AI programming models in 2025. Discover why Claude Sonnet 3.5, 4.0, and Opus 4.1 dominate coding benchmarks and...

Best Astro Blog Templates for SEO: Developer-Friendly Options in 2025

Discover the best Astro blog templates optimized for SEO and performance. Compare features, customization options, and deployment strategies for developer blogs.