ComfyUI Basic Essential Nodes Every Beginner Needs to Know

Master the fundamental ComfyUI nodes in 2025. Complete beginner's guide to Load Checkpoint, CLIP Text Encode, KSampler, VAE Decode, and basic workflow creation.

What Are the 5 Essential ComfyUI Nodes?

The 5 essential ComfyUI nodes are: Load Checkpoint (loads AI models), CLIP Text Encode (converts text to AI language), Empty Latent Image (sets canvas size), KSampler (generates images), and VAE Decode (makes images visible). Master these nodes and you can create any AI image.

- Load Checkpoint: Loads your AI model (SD 1.5, SDXL, FLUX) and provides MODEL, CLIP, and VAE outputs

- CLIP Text Encode: Converts your text prompts into AI-understandable format (use two: positive and negative)

- Empty Latent Image: Creates your canvas size (512x512 for testing, 1024x1024 for quality)

- KSampler: The generation engine - use 20-30 steps, CFG 7-12, euler_a or dpmpp_2m sampler

- VAE Decode: Converts AI data into visible images you can save and share

Quick Start: Connect Load Checkpoint → CLIP Text Encode → KSampler → VAE Decode. Set 512x512 size, 25 steps, CFG 8. Generate your first image in under 5 minutes.

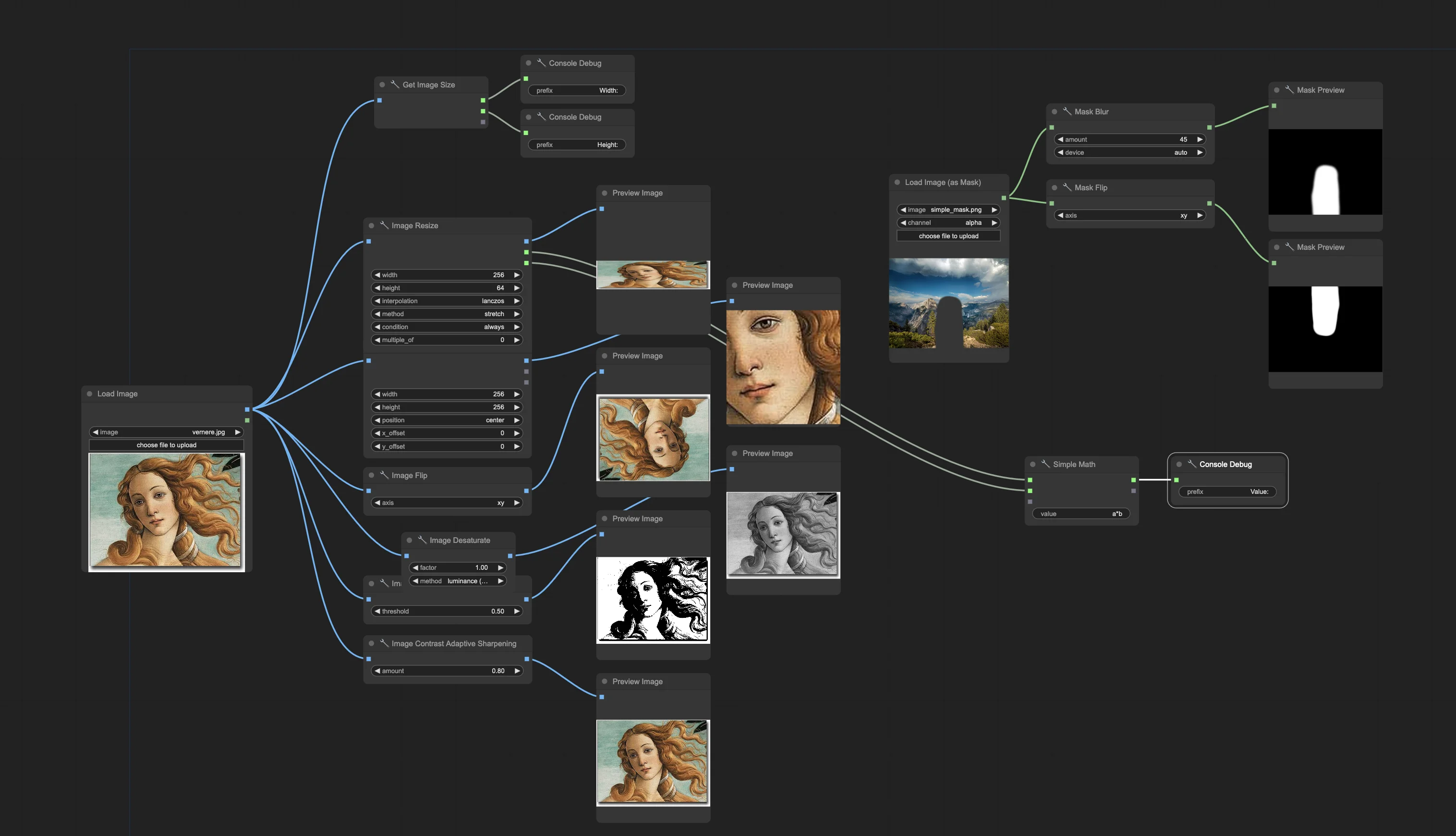

You've heard about ComfyUI's incredible power for AI image generation, but opening it for the first time feels like staring at a circuit board.

Nodes everywhere, connections that make no sense, and workflows that look more like engineering diagrams than creative tools.

Every expert started exactly where you are right now - confused by the interface but excited by the possibilities.

The difference between giving up and mastering ComfyUI comes down to understanding five essential nodes that form the backbone of every workflow.

Once you grasp these fundamentals, ComfyUI transforms from an intimidating maze into an intuitive canvas where your creative ideas become stunning AI-generated images.

What Are ComfyUI Nodes and How Do They Work?

Think of ComfyUI nodes like LEGO blocks for AI image generation.

Each node performs one specific task, and you connect them together to create complete workflows.

Unlike other AI tools that hide the process behind simple buttons, ComfyUI shows you exactly how image generation works.

What Makes Nodes Powerful: Every node has three parts - inputs (left side), outputs (right side), and parameters (center controls). You connect outputs from one node to inputs of another, creating a flow of data that transforms your text prompt into a final image.

Why This Matters: Understanding nodes gives you complete control over the generation process. Instead of being limited by preset options, you can customize every aspect of how your images are created.

While platforms like Apatero.com provide instant AI image generation without any setup complexity, learning ComfyUI's node system gives you remarkable creative control and customization possibilities.

The 5 Essential Nodes You Must Know

1. Load Checkpoint - Your AI Model Foundation

What It Does: The Load Checkpoint node loads your AI model (like Stable Diffusion 1.5, SDXL, or FLUX) and provides three essential components for image generation.

Three Outputs Explained:

- MODEL: The actual image generator that creates pictures from noise

- CLIP: The text processor that understands your prompts

- VAE: The translator between human-viewable images and AI-readable data

How to Use It:

- Add a Load Checkpoint node to your canvas

- Click the model name dropdown to select your preferred model

- Connect the three outputs to other nodes that need them

Common Models for Beginners:

- Stable Diffusion 1.5: Fast, reliable, great for learning

- SDXL: Higher quality, slightly slower

- FLUX: Latest technology, excellent results

2. CLIP Text Encode - Converting Words to AI Language

What It Does: CLIP Text Encode nodes convert your text prompts into mathematical representations that the AI model can understand and use for image generation.

Two Types You Need:

- Positive Prompt: Describes what you want in the image

- Negative Prompt: Describes what you don't want

Basic Setup:

- Add two CLIP Text Encode nodes to your workflow

- Connect the CLIP output from Load Checkpoint to both nodes

- Type your positive prompt in the first node

- Type your negative prompt in the second node (like "blurry, low quality")

Prompt Writing Tips:

- Keep prompts simple and descriptive

- Use commas to separate different elements

- Start with basic descriptions before adding style elements

3. Empty Latent Image - Setting Your Canvas Size

What It Does: Creates a blank "canvas" in the AI's mathematical space (called latent space) where your image will be generated. Think of it as setting your image dimensions.

Key Settings:

- Width & Height: Your final image size in pixels

- Batch Size: How many images to generate at once

Recommended Sizes for Beginners:

- 512x512: Fast generation, good for testing

- 768x768: Better quality, slightly slower

- 1024x1024: High quality, requires more VRAM

Connection: The latent output connects to the KSampler node as the starting point for image generation.

4. KSampler - The Heart of Image Generation

What It Does: KSampler is where the magic happens. It takes your text prompt, the blank canvas, and the AI model, then gradually transforms random noise into your desired image through a denoising process.

Essential Settings:

| Setting | Recommended Value | What It Does |

|---|---|---|

| Steps | 20-30 | How many refinement passes to make |

| CFG Scale | 7-12 | How closely to follow your prompt |

| Sampler Name | euler_a or dpmpp_2m | The generation algorithm |

| Scheduler | normal | How steps are spaced out |

| Seed | -1 (random) | Starting point for generation |

To learn more about seeds and how to get reproducible results, read our comprehensive seed management guide.

Required Connections:

- MODEL input: From Load Checkpoint

- Positive input: From positive CLIP Text Encode

- Negative input: From negative CLIP Text Encode

- Latent image: From Empty Latent Image

5. VAE Decode - Making Images Visible

What It Does: Converts the AI's mathematical representation (latent space) back into a regular image you can see and save. Without this step, you'd only have data the AI understands.

Simple Setup:

- Add a VAE Decode node

- Connect VAE input to the VAE output from Load Checkpoint

- Connect samples input to the LATENT output from KSampler

- The IMAGE output shows your final generated image

Building Your First Basic Workflow

Step-by-Step Workflow Creation

Step 1: Add Your Nodes Right-click on the empty canvas and add these five nodes:

- Load Checkpoint

- CLIP Text Encode (add two of these)

- Empty Latent Image

- KSampler

- VAE Decode

Step 2: Configure Your Model Click the Load Checkpoint node and select a Stable Diffusion model from the dropdown menu.

Step 3: Set Your Canvas Size In the Empty Latent Image node, set width and height to 512 (good starting size for beginners).

Step 4: Write Your Prompts

- First CLIP Text Encode: "a beautiful sunset over mountains, photorealistic"

- Second CLIP Text Encode: "blurry, low quality, distorted"

Step 5: Connect Everything Make these connections by dragging from output dots to input dots:

| From Node | Output | To Node | Input |

|---|---|---|---|

| Load Checkpoint | MODEL | KSampler | model |

| Load Checkpoint | CLIP | CLIP Text Encode #1 | clip |

| Load Checkpoint | CLIP | CLIP Text Encode #2 | clip |

| Load Checkpoint | VAE | VAE Decode | vae |

| CLIP Text Encode #1 | CONDITIONING | KSampler | positive |

| CLIP Text Encode #2 | CONDITIONING | KSampler | negative |

| Empty Latent Image | LATENT | KSampler | latent_image |

| KSampler | LATENT | VAE Decode | samples |

Step 6: Generate Your Image Click "Queue Prompt" and watch your first ComfyUI image generate!

Essential Node Parameters Explained

KSampler Settings Deep Dive

Steps (20-30 recommended): More steps = higher quality but slower generation. Start with 20 for testing, increase to 30 for final images.

CFG Scale (7-12 recommended): Controls prompt adherence. Lower values (7-8) = more creative freedom. Higher values (10-12) = stricter prompt following.

Sampler Methods:

- euler_a: Fast, good quality, great for beginners

- dpmpp_2m: Slightly better quality, minimal speed difference

- ddim: Older method, still reliable

Scheduler Options:

- normal: Standard spacing, works for most cases

- karras: Slightly different step spacing, sometimes better results For a deep dive into how schedulers affect your images, check out our Karras scheduler explanation guide.

Common Parameter Combinations

| Image Type | Steps | CFG | Sampler | Best For |

|---|---|---|---|---|

| Quick Test | 15 | 7 | euler_a | Rapid iteration |

| Balanced Quality | 25 | 8 | dpmpp_2m | General use |

| High Quality | 30 | 10 | dpmpp_2m | Final outputs |

| Creative Freedom | 20 | 6 | euler_a | Artistic exploration |

Working with Different Image Sizes

Resolution Guidelines

Standard Sizes for Different Models:

| Model Type | Recommended Sizes | VRAM Usage | Generation Time |

|---|---|---|---|

| SD 1.5 | 512x512, 512x768 | Low | Fast |

| SDXL | 1024x1024, 768x1344 | Medium | Moderate |

| FLUX | 1024x1024, 896x1152 | High | Slower |

Aspect Ratio Tips:

- Square (1:1): 512x512, 1024x1024

- Portrait (3:4): 512x768, 768x1024

- space (4:3): 768x512, 1024x768

- Widescreen (16:9): 512x896, 1024x576

Understanding Node Connections

Connection Types and Colors

Visual Connection Guide:

| Connection Color | Data Type | Common Use |

|---|---|---|

| Purple | MODEL | AI generation engine |

| Yellow | CLIP | Text processing |

| Red | VAE | Image encoding/decoding |

| Orange | CONDITIONING | Processed prompts |

| White | LATENT | AI's working space |

| Green | IMAGE | Final visible images |

Connection Rules

What Can Connect:

- Same color outputs and inputs can connect

- One output can connect to multiple inputs

- Each input accepts only one connection

Common Connection Mistakes:

- Trying to connect different colored connections

- Forgetting to connect all required inputs

- Connecting outputs to outputs (impossible)

If you're struggling with errors, check out our 10 common ComfyUI beginner mistakes guide for complete troubleshooting solutions.

Troubleshooting Common Beginner Issues

"Missing Input" Errors

Problem: Red text showing missing connections Solution: Check that all required inputs (dots on the left) have connections from appropriate outputs

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

Required Connections Checklist:

- KSampler needs: model, positive, negative, latent_image

- VAE Decode needs: vae, samples

- CLIP Text Encode needs: clip

Black or Corrupted Images

Problem: Generated images appear black or distorted Solution:

- Verify VAE Decode is connected properly

- Check that you're using compatible model/VAE combinations

- Ensure image size matches model requirements

Out of Memory Errors

Problem: Generation fails with CUDA/memory errors Solution:

- Reduce image dimensions (try 512x512)

- Lower batch size to 1

- Restart ComfyUI to clear memory

- Close other GPU-intensive applications

Slow Generation Speed

Performance Optimization Tips:

- Use smaller image sizes for testing

- Reduce number of steps for quick iterations

- Choose efficient samplers (euler_a, dpmpp_2m)

- Generate one image at a time initially

Practical Workflow Examples

Basic Portrait Generation

Workflow Setup:

- Load Checkpoint: Realistic model

- Positive Prompt: "professional headshot photo of a person, studio lighting, sharp focus"

- Negative Prompt: "blurry, distorted, low quality, cartoon"

- Size: 512x768 (portrait orientation)

- Steps: 25, CFG: 8

Simple space Creation

Workflow Setup:

- Load Checkpoint: space-focused model

- Positive Prompt: "scenic mountain space, golden hour, detailed, photorealistic"

- Negative Prompt: "people, buildings, text, blurry"

- Size: 768x512 (space orientation)

- Steps: 30, CFG: 9

Quick Concept Art

Workflow Setup:

- Load Checkpoint: Artistic model

- Positive Prompt: "concept art, fantasy castle, dramatic lighting, detailed"

- Negative Prompt: "photorealistic, blurry, low detail"

- Size: 512x512 (square)

- Steps: 20, CFG: 7

Next Steps After Mastering Basics

Gradual Skill Building

Week 1-2: Master the Essentials

- Practice connecting the 5 basic nodes

- Experiment with different prompts

- Try various image sizes and settings

Week 3-4: Expand Your Toolkit

- Add Preview Image nodes to see intermediate results

- Try different models and understand their strengths

- Experiment with batch generation

Month 2: Explore Intermediate Features

- Learn about different VAE models

- Understand seed control for consistent results

- Explore advanced sampling methods

Building Workflow Libraries

Organization Tips:

- Save successful workflows with descriptive names

- Create templates for different image types

- Document settings that work well for specific models

Workflow Naming Convention:

- "Basic_Portrait_SD15.json"

- "Landscape_SDXL_HighQuality.json"

- "ConceptArt_FLUX_Fast.json"

Choosing Your Learning Path

While mastering ComfyUI's node system provides incredible creative control and deep understanding of AI image generation, it's worth considering your goals and time investment.

ComfyUI Path Benefits:

- Complete control over every generation parameter

- Understanding of how AI image generation actually works

- Ability to create custom workflows for specific needs

- Access to modern models and techniques

Alternative Considerations: For creators focused on results rather than technical mastery, platforms like Apatero.com provide professional-quality AI image generation with the latest models and optimizations, without requiring node-based workflow creation.

The choice depends on whether you want to become a ComfyUI expert or simply create amazing images efficiently.

Advanced Node Techniques for Intermediate Users

Once you've mastered basic node usage, these intermediate techniques expand your capabilities.

Prompt Token Management

CLIP Text Encode has hidden complexity around token limits:

Token Considerations:

- Standard limit: 77 tokens per prompt

- Tokens are subword units, not words

- Common words use fewer tokens

- Unusual terms use more tokens

Long Prompt Strategies:

- Use CLIP Text Encode (SDXL) for extended prompts

- Chain multiple CLIP nodes for token expansion

- Prioritize important terms at prompt start

- Remove redundant or low-impact terms

Understanding tokens helps you craft more effective prompts within model limitations.

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

Empty Latent Optimization

Empty Latent Image settings affect more than just resolution:

Batch Size Uses:

- Batch size 1: Single generation for iteration

- Batch size 2-4: Quick variation exploration

- Batch size 8+: Production runs when satisfied

Memory Impact: Each batch item multiplies VRAM usage. A batch of 4 at 1024x1024 uses roughly 4x the memory of single generation.

Resolution Strategy: Generate at lower resolution for composition testing, then upscale to final resolution. This saves significant time during iteration.

KSampler Advanced Techniques

Beyond basic settings, KSampler offers subtle control:

Scheduler Combinations: Different scheduler/sampler combinations produce different results. Test combinations for your preferred aesthetic:

- Euler a + normal: Smooth, painterly

- DPM++ 2M + karras: Sharp, detailed

- UniPC + simple: Fast, balanced

CFG Scale Interaction: CFG scale interacts with steps and scheduler. Higher CFG needs more steps to avoid artifacts. Lower CFG with fewer steps can produce soft, painterly results.

Seed Management:

- Save seeds of successful generations

- Use same seed with different settings for comparison

- Random seeds for exploration

- Fixed seeds for production consistency

Model Loading Optimization

Load Checkpoint strategy affects workflow performance:

Model Caching: ComfyUI caches loaded models. First generation loads models (slow), subsequent generations reuse cache (fast).

Workflow Design: Design workflows to minimize model switching. Loading a new checkpoint clears the cache and incurs load time.

Memory vs Speed: More VRAM allows more caching. Less VRAM means more loading overhead but lower system requirements.

Building on Fundamentals: Common Workflow Patterns

Standard patterns that extend basic nodes into practical workflows.

Img2Img Workflow

Transform existing images using the diffusion process:

Node Additions:

- Load Image node for source image

- VAE Encode to create starting latent

- Use this latent instead of Empty Latent

- Lower denoise in KSampler (0.3-0.7)

This workflow refines existing images rather than generating from scratch.

ControlNet Integration

Add structural control to generations:

Workflow Extension:

- Add ControlNet nodes (requires custom nodes)

- Process reference image for control signal

- Inject control signal into generation

- Balance control strength with creativity

ControlNet transforms basic workflows into precise composition tools. Learn more in our ControlNet combinations guide.

LoRA Style Modification

Apply trained styles to your generations:

Join 115 other course members

Create Your First Mega-Realistic AI Influencer in 51 Lessons

Create ultra-realistic AI influencers with lifelike skin details, professional selfies, and complex scenes. Get two complete courses in one bundle. ComfyUI Foundation to master the tech, and Fanvue Creator Academy to learn how to market yourself as an AI creator.

LoRA Workflow:

- Load LoRA node after Load Checkpoint

- Connect checkpoint outputs through LoRA

- Adjust LoRA strength (0.5-1.0 typical)

- Multiple LoRAs can chain together

LoRAs provide fine-tuned aesthetic control without changing base models.

Inpainting Workflow

Selectively regenerate image regions:

Inpainting Setup:

- Load image with mask

- Use VAE Encode for Inpainting

- Apply appropriate inpainting model

- Control regenerated area with prompts

Inpainting enables precise image editing. See our mask editor guide for professional techniques.

Performance Optimization Fundamentals

Get better performance from basic nodes with these optimizations.

Resolution Efficiency

Resolution dramatically impacts performance:

Performance Guidelines:

- 512x512: ~1 second per step (baseline)

- 768x768: ~2 seconds per step

- 1024x1024: ~4 seconds per step

- 1536x1536: ~9 seconds per step

Optimal Workflow: Generate at efficient resolution, then upscale. Many users generate at 512 or 768 then upscale to 1024 or higher.

Step Count Optimization

More steps isn't always better:

Step Guidelines by Sampler:

- Euler a: 20-30 steps optimal

- DPM++ 2M: 25-35 steps optimal

- DDIM: 40-50 steps for quality

Beyond optimal points, additional steps add time without quality improvement.

VAE Performance

VAE encoding/decoding adds overhead:

VAE Optimization:

- Standard VAE: Good quality, moderate speed

- VAE tiling: Enables higher resolutions

- VAE slicing: Reduces VRAM for batch processing

For comprehensive performance optimization, many techniques build on understanding these fundamental node behaviors.

Troubleshooting Basic Node Issues

Common problems with essential nodes and their solutions.

Connection Errors

When nodes won't connect:

Diagnose Connection Type:

- Colors must match (purple to purple, yellow to yellow)

- Check data type compatibility

- Verify node isn't expecting different data

Common Fixes:

- Use appropriate converter nodes

- Check node documentation for expected inputs

- Verify correct node version

Generation Failures

When generation produces errors:

KSampler Errors:

- Check model/CLIP/VAE compatibility

- Verify resolution divisible by 8

- Reduce batch size for VRAM issues

- Update ComfyUI for latest fixes

Model Loading Errors:

- Verify model file integrity

- Check sufficient storage space

- Confirm model format compatibility

Quality Issues

When generation works but results are poor:

Prompt Issues:

- Add quality terms (masterpiece, high quality)

- Use negative prompts for common issues

- Balance prompt specificity

Settings Issues:

- Increase steps for details

- Adjust CFG for prompt adherence

- Try different sampler/scheduler

Extending Your Knowledge

Resources for continued learning beyond basics.

Next Steps

After mastering these nodes:

- ControlNet - Precise composition control

- Upscaling - High-resolution output

- Face Enhancement - Improved facial details

- Video Generation - Using Wan 2.2

Each extension builds on these fundamental nodes.

Community Resources

Connect with ComfyUI community:

Learning Resources:

- Official ComfyUI documentation

- Community workflow libraries

- Tutorial video channels

- Discord communities

Workflow Libraries

Study existing workflows:

Benefits:

- Learn from working examples

- Understand professional patterns

- Discover new techniques

- Build on tested foundations

Conclusion and Your ComfyUI Journey

Mastering these five essential nodes - Load Checkpoint, CLIP Text Encode, Empty Latent Image, KSampler, and VAE Decode - gives you the foundation to create any AI image you can imagine. Every advanced ComfyUI workflow builds upon these same basic building blocks.

Your Learning Roadmap:

- This Week: Master connecting the 5 essential nodes

- Next Week: Experiment with different models and prompts

- Month 1: Build confidence with various image types and sizes

- Month 2: Explore intermediate nodes and workflow optimization

- Month 3+: Create custom workflows for your specific creative needs

Key Takeaways:

- Start simple with the 5 essential nodes

- Focus on understanding connections before adding complexity

- Practice with different prompts and settings

- Save workflows that work well for future use

- Be patient - ComfyUI mastery comes through hands-on experience

Immediate Action Steps:

- Download ComfyUI and install a Stable Diffusion model

- Create your first workflow using the 5 essential nodes

- Generate 10 different images by changing only the prompts

- Experiment with different image sizes and KSampler settings

- Save your first successful workflow as a template

Remember, every ComfyUI expert started with these same basic nodes. The complexity that seems overwhelming today will become second nature as you practice. Whether you continue building advanced ComfyUI expertise or choose streamlined alternatives like Apatero.com, understanding these fundamentals gives you the knowledge to make informed decisions about your AI image generation workflow.

Your creative journey with AI image generation starts with these five nodes - master them, and you've mastered the foundation of limitless visual creativity.

Frequently Asked Questions About ComfyUI Essential Nodes

What are the 5 essential ComfyUI nodes for beginners?

The 5 essential nodes are Load Checkpoint, CLIP Text Encode, Empty Latent Image, KSampler, and VAE Decode. These nodes form the complete pipeline for generating AI images.

How do I connect ComfyUI nodes for basic image generation?

Connect Load Checkpoint's MODEL to KSampler's model input, CLIP to two CLIP Text Encode nodes, VAE to VAE Decode. Connect both CLIP Text Encode outputs to KSampler's positive and negative inputs, Empty Latent Image to KSampler's latent_image, and KSampler's output to VAE Decode.

What KSampler settings should I use as a beginner?

Use 20-30 steps, CFG scale 7-12, euler_a or dpmpp_2m sampler, normal scheduler, and seed -1 for random generation. These settings provide balanced quality and speed for learning.

Why is my ComfyUI workflow showing missing input errors?

Missing input errors occur when required connections aren't made. Check that KSampler has connections for model, positive, negative, and latent_image. VAE Decode needs vae and samples connections. CLIP Text Encode needs clip connection from Load Checkpoint.

What image size should I use for first ComfyUI generations?

Start with 512x512 pixels for fast generation and testing. Once comfortable, try 768x768 for better quality. SD 1.5 models work well at 512x512-768x768, SDXL at 1024x1024, and FLUX at 1024x1024 or higher.

How long does it take to generate an image with ComfyUI?

Generation time depends on hardware and settings. With RTX 3060, expect 15-30 seconds for 512x512 images at 25 steps. RTX 4090 generates the same in 4-8 seconds. Larger images and more steps increase generation time proportionally.

Can I save my ComfyUI workflow for later use?

Yes, save workflows using the "Save" button in ComfyUI's menu. Workflows save as .json files containing all node configurations and connections. Load saved workflows using the "Load" button to reuse exact settings and configurations.

What's the difference between positive and negative prompts?

Positive prompts describe what you want in the image (subject, style, lighting, details). Negative prompts describe what you don't want (blurry, low quality, distorted features). Both help guide the AI toward your desired result by providing directional guidance.

Do I need different nodes for different AI models?

No, the same 5 essential nodes work with all AI models (SD 1.5, SDXL, FLUX). Load Checkpoint loads any model type. The workflow structure remains identical - only the loaded model and optimal image sizes change between model types.

How do I fix black or corrupted output images?

Black or corrupted images usually indicate VAE Decode connection issues or incompatible model/VAE combinations. Verify VAE Decode has connections from Load Checkpoint's VAE output and KSampler's LATENT output. Try using a different VAE model if problems persist.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

10 Most Common ComfyUI Beginner Mistakes and How to Fix Them in 2025

Avoid the top 10 ComfyUI beginner pitfalls that frustrate new users. Complete troubleshooting guide with solutions for VRAM errors, model loading...

25 ComfyUI Tips and Tricks That Pro Users Don't Want You to Know in 2025

Discover 25 advanced ComfyUI tips, workflow optimization techniques, and pro-level tricks that expert users leverage.

360 Anime Spin with Anisora v3.2: Complete Character Rotation Guide ComfyUI 2025

Master 360-degree anime character rotation with Anisora v3.2 in ComfyUI. Learn camera orbit workflows, multi-view consistency, and professional...

.png)