Low VRAM? No Problem: Running ComfyUI on Budget Hardware

Practical guide to running ComfyUI with limited GPU memory. Learn optimization tricks, CPU fallback options, cloud alternatives, and hardware upgrade...

Staring at "CUDA out of memory" errors while everyone else seems to generate stunning AI art effortlessly? You're not alone in this ComfyUI low VRAM frustration, and the solution might be simpler than upgrading to that expensive RTX 4090. ComfyUI low VRAM optimization can transform your budget hardware into a capable AI art machine.

Direct Answer: You can achieve ComfyUI low VRAM success on 4GB cards using --lowvram flag with SD 1.5 models (3.5GB) at 512x512 resolution. For 6GB cards, use --normalvram --force-fp16 flags and pruned SDXL models (4.7GB) at 768x768. For 8GB cards, run full SDXL with --preview-method auto --force-fp16, achieving 40-60% ComfyUI low VRAM reduction through optimization.

- 4GB Cards: Use --lowvram flag, SD 1.5 models (3.5GB), 512x512 resolution (1.2GB VRAM)

- 6GB Cards: Use --normalvram --force-fp16, pruned SDXL (4.7GB), 768x768 resolution

- 8GB Cards: Use --preview-method auto --force-fp16, full SDXL possible with optimization

- CPU Mode: 10-50x slower but works (8-15 minutes for 512x512 on modern CPUs)

- Best Budget GPU: GTX 1080 Ti (11GB) used, RTX 2070 Super (8GB), RTX 3060 (12GB) new

- VRAM Savings: Optimization flags reduce usage by 40-60% versus default settings

- LoRA Strategy: Base model (3.5GB) + LoRA (0.5GB) = 4GB total vs large model (7GB)

For a complete troubleshooting guide to this error, check our 10 common ComfyUI beginner mistakes. Picture this scenario. You've downloaded ComfyUI, excited to create your first masterpiece, only to watch it crash repeatedly. Meanwhile, platforms like Apatero.com deliver professional results without any hardware requirements, but you're determined to make your current setup work.

Can You Really Run ComfyUI on 4GB-8GB VRAM?

The Reality Check About VRAM Requirements

ComfyUI's memory usage varies dramatically based on model size and image resolution. Understanding these requirements helps you set realistic expectations and choose the right optimization strategy.

- Stable Diffusion 1.5: 3.5GB base + 2GB for workflow overhead = 5.5GB minimum

- SDXL Models: 6.9GB base + 3GB overhead = 10GB minimum

- ControlNet Addition: +2-4GB depending on preprocessor

- Multiple LoRAs: +500MB-1GB per LoRA model

The good news? These numbers represent worst-case scenarios. Smart ComfyUI low VRAM optimization can reduce requirements by 40-60%, making ComfyUI low VRAM viable on cards with just 4GB VRAM.

VRAM vs System RAM vs CPU Performance

Most tutorials focus solely on GPU memory, but ComfyUI can intelligently offload processing when configured correctly. Here's how different components impact performance.

| Component | Role | Impact on Speed | Memory Usage |

|---|---|---|---|

| VRAM | Active model storage | 10x faster than system RAM | Fastest access |

| System RAM | Model swapping buffer | 3x faster than storage | Medium access |

| CPU | Fallback processing | 50-100x slower than GPU | Uses system RAM |

| Storage | Model storage | Only during loading | Permanent storage |

Essential Launch Parameters for ComfyUI Low VRAM Systems

Launch parameters tell ComfyUI how to manage memory before it starts loading models. These ComfyUI low VRAM flags can mean the difference between working and crashing. Mastering ComfyUI low VRAM parameters is essential for budget GPU success.

For 4GB VRAM Cards (GTX 1650, RTX 3050)

python main.py --lowvram --preview-method auto

- --lowvram: Keeps only active parts in VRAM, swaps rest to system RAM

- --preview-method auto: Uses efficient preview generation during sampling

For 6GB VRAM Cards (GTX 1060, RTX 2060)

python main.py --normalvram --preview-method auto --force-fp16

The --force-fp16 flag cuts model memory usage in half with minimal quality loss, perfect for this VRAM range.

For 8GB VRAM Cards (RTX 3070, RTX 4060)

python main.py --preview-method auto --force-fp16

8GB cards can handle most workflows but benefit from FP16 precision for complex multi-model setups.

Advanced Launch Parameters

Aggressive memory management:

python main.py --lowvram --cpu-vae --preview-method none

CPU fallback for parts of the pipeline:

python main.py --lowvram --cpu-vae --normalvram

Maximum compatibility mode:

python main.py --cpu --preview-method none

Note that each additional parameter trades speed for compatibility.

Model Selection Strategy for ComfyUI Low VRAM

Not all AI models are created equal when it comes to memory efficiency. Strategic model selection for ComfyUI low VRAM systems can dramatically improve your experience.

SD 1.5 Models (Memory Champions)

Stable Diffusion 1.5 models require only 3.5GB VRAM and generate excellent results. Top picks for low VRAM systems include the following options.

- DreamShaper 8: Versatile, photorealistic results with 3.97GB model size

- Realistic Vision 5.1: Portrait specialist at 3.97GB

- AbsoluteReality 1.8.1: Balanced style at 3.97GB

- ChilloutMix: Anime-focused at 3.76GB

Pruned SDXL Models (Best Compromise)

Full SDXL models weigh 6.9GB, but pruned versions reduce this to 4.7GB while maintaining most quality benefits.

- SDXL Base (Pruned): 4.7GB with full 1024x1024 capability

- Juggernaut XL (Pruned): 4.7GB specialized for fantasy art

- RealStock SDXL (Pruned): 4.7GB optimized for photography

Memory-Efficient LoRA Strategy

LoRA models add style and concept control with minimal memory overhead. Each LoRA adds only 500MB-1GB, making them perfect for low VRAM customization.

Base Model (3.5GB) + LoRA (0.5GB) = 4GB total vs Large Custom Model (7GB) = 7GB total

Image Resolution Optimization

Resolution has the biggest impact on VRAM usage during generation. Strategic resolution choices let you create large images without exceeding memory limits.

The 512x512 Sweet Spot

For SD 1.5 models, 512x512 represents the optimal balance of quality and memory usage.

| Resolution | VRAM Usage | Generation Time | Quality |

|---|---|---|---|

| 512x512 | 1.2GB | 15 seconds | Excellent |

| 768x768 | 2.8GB | 35 seconds | Better details |

| 1024x1024 | 5.1GB | 65 seconds | SD 1.5 struggles |

Generation Plus Upscaling Strategy

Instead of generating large images directly, create at 512x512 then upscale using AI tools. This approach uses less VRAM and often produces superior results. For more on upscaling methods, read our AI image upscaling comparison.

Two-Stage Generation Workflow

- Stage 1: Generate at 512x512 with your limited VRAM

- Stage 2: Use Real-ESRGAN or ESRGAN for 2x-4x upscaling

- Stage 3: Optional img2img pass for detail refinement

Memory usage stays under 4GB throughout the entire process.

CPU Mode Setup and Optimization for ComfyUI Low VRAM

When ComfyUI low VRAM limitations become insurmountable, CPU mode offers a viable alternative. While slower, modern CPUs can generate images in reasonable timeframes when ComfyUI low VRAM solutions aren't sufficient.

CPU Mode Performance Reality Check

CPU generation takes significantly longer but works reliably on any system with 16GB+ RAM.

| Hardware | 512x512 Time | 768x768 Time | Best Use Case |

|---|---|---|---|

| Intel i7-12700K | 8-12 minutes | 18-25 minutes | Patient creation |

| AMD Ryzen 7 5800X | 10-15 minutes | 22-30 minutes | Overnight batches |

| M1 Pro Mac | 5-8 minutes | 12-18 minutes | Surprisingly good |

| Older CPUs (i5-8400) | 20-35 minutes | 45-60 minutes | Last resort |

Optimizing CPU Performance

Maximum CPU optimization:

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

python main.py --cpu --preview-method none --disable-xformers

- Close all other applications: Free up system resources

- Use smaller models: SD 1.5 models work better than SDXL

- Reduce steps: 15-20 steps instead of 30-50 for acceptable quality

- Generate batches overnight: Queue multiple images for morning

Apple Silicon Advantage

M1 and M2 Macs perform surprisingly well in CPU mode due to unified memory architecture and optimized PyTorch builds.

Optimized for Apple Silicon:

python main.py --force-fp16 --preview-method auto

The unified memory allows M1/M2 systems to use 16GB+ for AI generation without traditional VRAM limitations.

Cloud and Remote Solutions

Sometimes the best solution for low VRAM limitations is avoiding them entirely through cloud computing. Several options provide powerful GPU access without hardware investment.

Free Cloud Options

Free GPU Access:

- Google Colab (Free Tier): 15GB VRAM Tesla T4, 12 hours session limit

- Kaggle Kernels: 16GB VRAM P100, 30 hours/week limit

- GitHub Codespaces: 2-core CPU only, better than nothing

Paid Cloud Services

For consistent access, paid services offer better performance and reliability than free tiers.

| Service | GPU Options | Hourly Cost | Best For |

|---|---|---|---|

| RunPod | RTX 4090, A100 | $0.50-2.00/hr | Power users |

| Vast.ai | Various GPUs | $0.20-1.50/hr | Budget conscious |

| Lambda Labs | A100, H100 | $1.10-4.90/hr | Professional work |

| Paperspace | RTX 5000, A6000 | $0.76-2.30/hr | Consistent access |

For detailed RunPod setup instructions, see our ComfyUI Docker setup guide.

Setup Guide for Google Colab

Free ComfyUI on Colab (Step by Step)

- Open Google Colab

- Create new notebook with GPU runtime (Runtime → Change runtime type → GPU)

- Install ComfyUI with this code block

!git clone https://github.com/comfyanonymous/ComfyUI

%cd ComfyUI

!pip install -r requirements.txt

!python main.py --share --listen

- Access ComfyUI through the generated public URL

- Upload models through the Colab interface or download directly

Hardware Upgrade Strategies

When optimization reaches its limits, strategic hardware upgrades provide the best long-term solution. Smart shopping can dramatically improve performance without massive investment.

GPU Upgrade Roadmap by Budget

Under $200 Used Market:

- GTX 1080 Ti (11GB) - Excellent value for SD workflows

- RTX 2070 Super (8GB) - Good balance of features and VRAM

- RTX 3060 (12GB) - Modern architecture with generous VRAM

$300-500 Range:

- RTX 3060 Ti (8GB) - Fast but VRAM limited for SDXL

- RTX 4060 Ti 16GB - Perfect for AI workloads

- RTX 3070 (8GB) - High performance for SD 1.5

$500+ Investment:

- RTX 4070 Super (12GB) - Current sweet spot for AI

- RTX 4070 Ti (12GB) - Higher performance tier

- Used RTX 3080 Ti (12GB) - Great value if available

VRAM vs Performance Balance

More VRAM doesn't always mean better performance. Consider your typical workflow needs when choosing upgrades.

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

| Use Case | Minimum VRAM | Recommended GPU | Why |

|---|---|---|---|

| SD 1.5 Only | 6GB | RTX 3060 12GB | Excellent value |

| SDXL Workflows | 10GB | RTX 4070 Super | Future-proof |

| Multiple LoRAs | 12GB+ | RTX 4070 Ti | Headroom for complexity |

| Professional Use | 16GB+ | RTX 4080 | No compromises |

System RAM Considerations

GPU upgrades work best with adequate system RAM for model swapping and workflow complexity.

- 16GB System RAM: Minimum for comfortable AI workflows

- 32GB System RAM: Recommended for complex multi-model setups

- 64GB System RAM: Professional tier for massive models

ComfyUI Low VRAM Workflow Optimization Techniques

Beyond hardware limitations, workflow design dramatically impacts ComfyUI low VRAM success. Smart workflow construction can reduce ComfyUI low VRAM requirements by 30-50%.

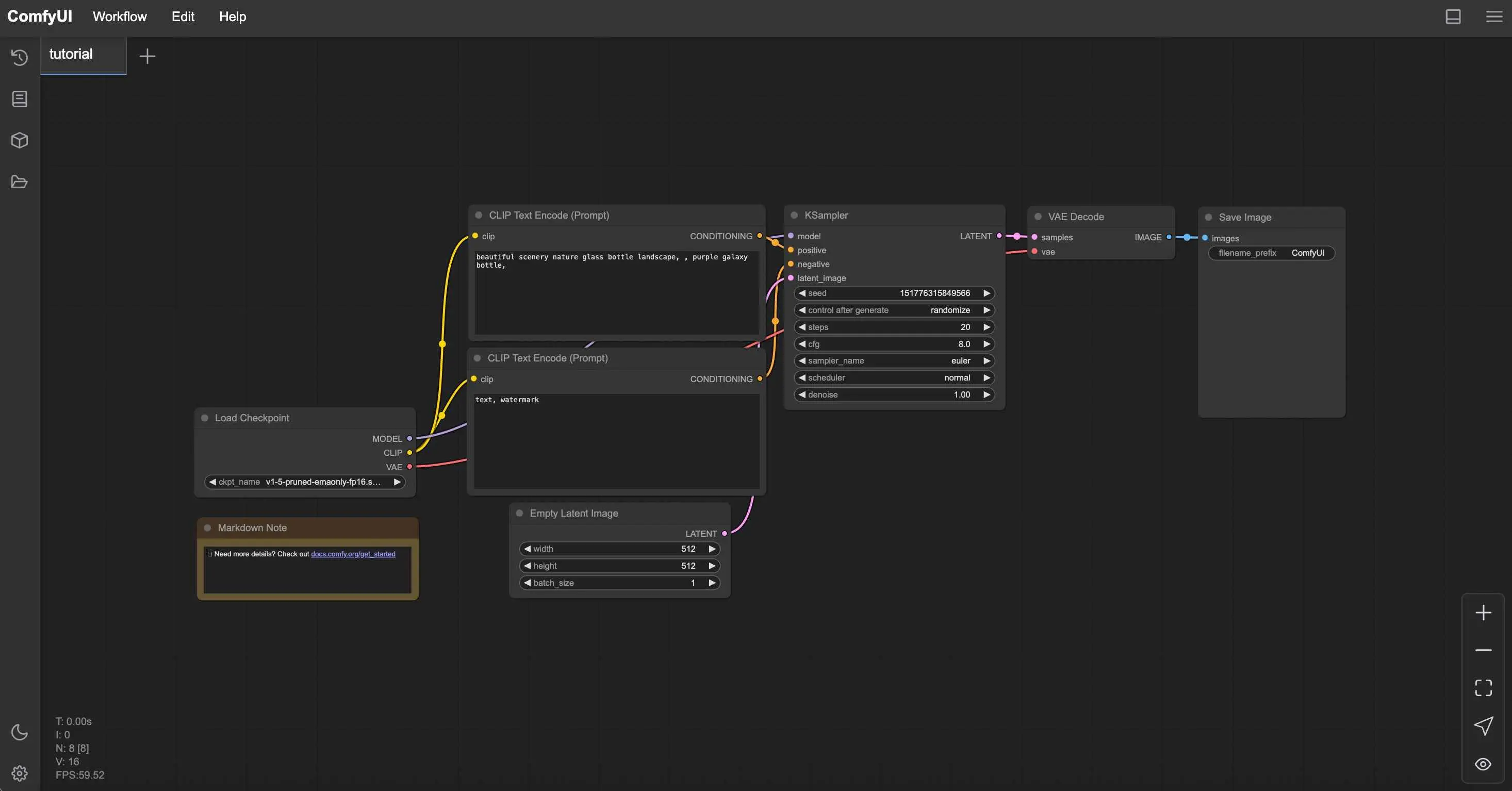

Memory-Efficient Node Placement

Node order affects memory usage throughout generation. Strategic placement reduces peak usage.

- Load models once: Avoid multiple checkpoint loading nodes

- Reuse CLIP encoders: Connect one encoder to multiple nodes

- Minimize VAE operations: Decode only when necessary

- Use efficient samplers: Euler and DPM++ use less memory than others

Batch Processing Strategies

Generating multiple images efficiently requires different approaches on low VRAM systems.

Instead of generating 10 images simultaneously (which crashes), try generating 2-3 images per batch and repeat batches for consistent generation without memory errors.

ComfyUI Manager Optimization

ComfyUI Manager provides one-click installation for memory optimization nodes.

Essential Manager Installs for Low VRAM

Memory Management Nodes:

- FreeU - Reduces memory usage during generation

- Model Management - Automatic model unloading

- Efficient Attention - Memory-optimized attention mechanisms

Installation Steps:

- Install ComfyUI Manager via Git clone

- Restart ComfyUI to activate Manager button

- Search and install memory optimization nodes

- Restart again to activate new nodes

Monitoring and Troubleshooting

Understanding your system's behavior helps optimize performance and prevent crashes before they happen.

Memory Monitoring Tools

Track VRAM usage in real-time to understand your system's limits and optimize So.

Join 115 other course members

Create Your First Mega-Realistic AI Influencer in 51 Lessons

Create ultra-realistic AI influencers with lifelike skin details, professional selfies, and complex scenes. Get two complete courses in one bundle. ComfyUI Foundation to master the tech, and Fanvue Creator Academy to learn how to market yourself as an AI creator.

Windows Users:

- Task Manager → Performance → GPU for basic monitoring

- GPU-Z for detailed VRAM tracking

- MSI Afterburner for continuous monitoring

Linux Users: Real-time VRAM monitoring:

nvidia-smi -l 1

# or

watch -n 1 nvidia-smi

Mac Users: Activity Monitor → GPU tab or terminal monitoring:

sudo powermetrics -n 1 -i 1000 | grep -i gpu

Common Error Solutions

"CUDA out of memory"

- Restart ComfyUI to clear VRAM

- Reduce image resolution by 25%

- Add --lowvram to launch parameters

"Model loading failed"

- Check available system RAM (models load to RAM first)

- Try smaller model variants

- Close other applications

"Generation extremely slow"

- Verify GPU is being used (check nvidia-smi)

- Update GPU drivers

- Check for thermal throttling

Performance Benchmarking

Establish baseline performance metrics to measure optimization effectiveness.

| Test | Your Hardware | Target Time | Optimization Success |

|---|---|---|---|

| 512x512 SD 1.5, 20 steps | _______ | < 30 seconds | Yes/No |

| 768x768 SD 1.5, 20 steps | _______ | < 60 seconds | Yes/No |

| 1024x1024 SDXL, 30 steps | _______ | < 120 seconds | Yes/No |

When to Choose Alternatives

Sometimes the best optimization is recognizing when ComfyUI isn't the right tool for your current hardware situation.

Apatero.com Advantages for Low VRAM Users

- Zero Hardware Requirements: Works on any device including phones

- No Memory Limitations: Generate any resolution without VRAM concerns

- Premium Model Access: Latest SDXL and custom models included

- Instant Results: No waiting 20+ minutes for CPU generation

- Professional Features: Advanced controls without technical complexity

- Cost Effective: Often cheaper than GPU electricity costs

Hybrid Approach Strategy

The smartest approach often combines multiple solutions based on your specific needs.

Use ComfyUI For:

- Learning AI generation principles

- Custom workflow development

- Offline generation needs

- When you have adequate hardware

Use Apatero.com For:

- Professional client work

- High-resolution images

- Time-sensitive projects

- Experimenting with latest models

Frequently Asked Questions

1. Can I really run ComfyUI on 4GB VRAM and what models will work?

Yes, with optimization. Use --lowvram flag, SD 1.5 models (3.5GB like DreamShaper 8 or Realistic Vision), generate at 512x512 (1.2GB VRAM), and enable --force-fp16. This allows ComfyUI on GTX 1650, GTX 1060 4GB, and similar cards. SDXL requires 6GB+ VRAM even with optimization. Expect 40-60% VRAM reduction from default settings.

2. What are the best launch parameters for my specific VRAM amount?

4GB cards: python main.py --lowvram --preview-method auto (keeps active parts in VRAM, swaps rest to RAM). 6GB cards: python main.py --normalvram --force-fp16 (FP16 precision cuts memory in half). 8GB cards: python main.py --preview-method auto --force-fp16 (handles most workflows with optimization). Adjust based on specific workflow complexity.

3. Which budget GPU offers best value for ComfyUI under $200-300?

Used market: GTX 1080 Ti (11GB) best value for SD workflows, RTX 2070 Super (8GB) good balance of features and VRAM, RTX 3060 (12GB) excellent VRAM amount with modern architecture. New: RTX 3060 12GB best option around $300. All handle SD 1.5 comfortably and SDXL with optimization. Prioritize VRAM amount over GPU speed for AI generation.

4. Can I run ComfyUI without a GPU using only CPU and how slow is it?

Yes, use --cpu flag but expect 10-50x slower generation. Intel i7-12700K generates 512x512 in 8-12 minutes, AMD Ryzen 7 5800X in 10-15 minutes, M1 Pro Mac in 5-8 minutes (surprisingly fast due to unified memory). Best for overnight batch generation or when GPU unavailable. Requires 16GB+ system RAM for models.

5. How much VRAM do I actually need for SDXL in ComfyUI with optimization?

SDXL base: 10GB minimum for full models (6.9GB) without optimization. With optimization: 8GB cards work using --lowvram flag, pruned SDXL models (4.7GB instead of 6.9GB), and generating at 768x768 instead of 1024x1024. 6-8GB cards need aggressive settings but can run SDXL acceptably. 12GB+ recommended for comfortable SDXL workflows.

6. What's the LoRA strategy for saving VRAM and why does it work?

Base model (3.5GB SD 1.5) + LoRA (500MB) = 4GB total memory vs large custom model (7GB) = 7GB total. LoRAs add styles and concepts to base models with minimal memory overhead. You can load base model once and swap LoRAs quickly (50-150MB each) rather than loading different 7GB models. Perfect for low VRAM customization and experimentation.

7. Will upgrading my system RAM help with low VRAM generation?

Yes, significantly. ComfyUI with --lowvram offloads models to system RAM when not actively processing. 16GB RAM: minimum for --lowvram mode, 32GB RAM: comfortable for complex workflows with model swapping, 64GB RAM: enables multiple large models in RAM for fast swapping. RAM acts as buffer between storage and VRAM, speeding up model loading dramatically.

8. Can I use image resolution tricks to reduce VRAM usage?

Yes, resolution has huge VRAM impact. 512x512 uses 1.2GB VRAM, 768x768 uses 2.8GB (2.3x more), 1024x1024 uses 5.1GB (4.2x more). Strategy: generate at 512x512 (low VRAM), then use AI upscaling with ESRGAN or Real-ESRGAN (separate process) to 2048x2048. Two-stage approach stays under 4GB throughout but produces high-resolution final output.

9. Why does ComfyUI still crash with "CUDA out of memory" despite optimization flags?

Common causes: other applications using VRAM (close browsers, Discord, other GPU apps), workflow too complex for available VRAM (reduce nodes, simplify), cached models not cleared (restart ComfyUI between generations), LoRAs stacking beyond capacity (use fewer LoRAs simultaneously), or generation resolution still too high. Monitor VRAM usage with nvidia-smi to identify actual consumption.

10. Is it worth buying a budget GPU or should I use cloud services instead?

Math: RTX 3060 12GB costs $300, electricity costs $5-10/month. Cloud GPU (RunPod, vast.ai) costs $0.50-2/hour. Break-even: 150-300 hours of usage (3-6 months of moderate use). Buy GPU if: generating regularly (5+ hours/week), want offline access, privacy concerns with cloud. Use cloud if: occasional use (few hours/month), testing before hardware investment, need powerful GPU temporarily for project.

The Bottom Line on ComfyUI Low VRAM Budget AI Generation

ComfyUI low VRAM doesn't have to end your AI art journey. With proper ComfyUI low VRAM optimization, strategic hardware choices, and smart workflow design, even 4GB cards can produce impressive results.

Your Optimization Action Plan

- Apply appropriate launch parameters for your VRAM level

- Choose SD 1.5 models for maximum compatibility

- Use 512x512 generation with AI upscaling

- Consider cloud solutions for occasional high-end generation

- Plan hardware upgrades based on your actual usage patterns

- Try [Apatero.com](https://apatero.com) when you need results without technical hassle

Remember that hardware limitations often spark creativity. Some of the most innovative AI art comes from artists who learned to work within constraints rather than despite them.

Whether you choose to optimize your current setup, upgrade your hardware, or use cloud solutions like Apatero.com, the key is finding the approach that lets you focus on creating rather than troubleshooting.

Ready to start generating amazing AI art regardless of your hardware? Apatero.com eliminates all technical barriers and gets you creating immediately. Because the best AI art tool is the one that gets out of your way and lets you create.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

10 Most Common ComfyUI Beginner Mistakes and How to Fix Them in 2025

Avoid the top 10 ComfyUI beginner pitfalls that frustrate new users. Complete troubleshooting guide with solutions for VRAM errors, model loading...

25 ComfyUI Tips and Tricks That Pro Users Don't Want You to Know in 2025

Discover 25 advanced ComfyUI tips, workflow optimization techniques, and pro-level tricks that expert users leverage.

360 Anime Spin with Anisora v3.2: Complete Character Rotation Guide ComfyUI 2025

Master 360-degree anime character rotation with Anisora v3.2 in ComfyUI. Learn camera orbit workflows, multi-view consistency, and professional...

.png)