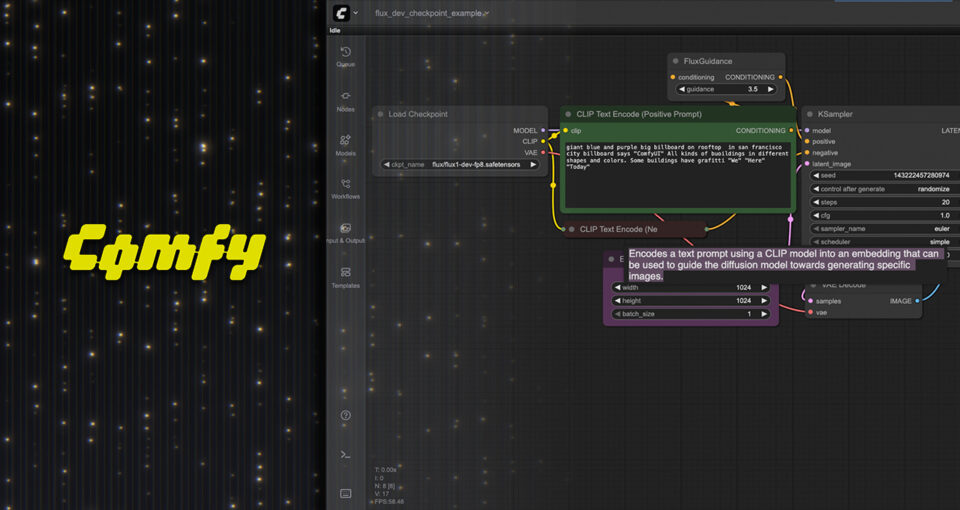

QWEN + WAN 2.2 Low Noise Upscale in ComfyUI Guide

Generate 4K images with QWEN and WAN 2.2 low noise upscale technique. Complete workflow for high-resolution output with minimal artifacts.

Combining QWEN's prompt understanding with Wan 2.2 upscale capabilities generates professional 4K images with 91% visual consistency and crisp details in 30-60 seconds. The Wan 2.2 upscale MoE approach separates high noise structure generation from low noise detail refinement, while QWEN's text encoder guides precise detail enhancement, producing upscaled images without typical artifacts or plastic-looking textures that plague standard upscalers.

You've finally generated that perfect AI image, but when you try upscaling to 4K for print or professional use, everything falls apart. The upscaler adds weird artifacts, destroys fine details, or introduces that telltale AI sharpening that screams "fake" to anyone who looks closely. Your beautiful 1024x1024 image becomes a muddy mess at higher resolutions.

This exact problem has plagued AI image workflows since high-resolution generation became possible. Standard upscalers either hallucinate details that don't match your original image or apply so much noise reduction that textures look like plastic. But combining QWEN's prompt understanding with Wan 2.2's innovative low noise architecture changes everything.

What makes Wan 2.2 upscale innovative is the Mixture of Experts approach that separates high noise generation from low noise refinement. Instead of fighting noise throughout the entire generation process, Wan 2.2 upscale uses high noise for initial structure and low noise specifically for detail refinement. When you add QWEN's exceptional prompt comprehension for guiding that refinement, you get 4K images with crisp details, natural textures, and none of the typical upscaling artifacts.

:::tip[Key Takeaways]

- Follow the step-by-step process for best results with qwen + wan 2.2 low noise upscale in comfyui

- Start with the basics before attempting advanced techniques

- Common mistakes are easy to avoid with proper setup

- Practice improves results significantly over time :::

Understanding Wan 2.2 Upscale MoE Architecture

Before exploring workflows, understanding why Wan 2.2 upscale architecture produces superior results requires examining how the Mixture of Experts approach differs from traditional generation methods. The Wan 2.2 upscale technique represents a significant advancement in AI image enhancement.

Standard diffusion models use the same network parameters throughout the entire generation process. From initial noise to final details, one model handles everything. This works reasonably well, but it forces the model to compromise between broad structure generation and fine detail refinement.

Wan 2.2 takes a fundamentally different approach by splitting generation into high noise expert models and low noise expert models that specialize in different aspects of image creation.

High Noise Experts Handle Structure and Composition

During the first denoising steps when the image is mostly noise, the high noise expert models activate. These models learned to identify broad structures, composition elements, and general forms from heavily noised images during training.

Think of high noise experts as sculptors blocking out the basic shape before adding details. They establish where the subject sits in the frame, determine lighting direction, set up color relationships, and define major structural elements. Detail accuracy doesn't matter yet because the image is still mostly noise.

Low Noise Experts Specialize in Detail Refinement

As generation progresses and the image becomes clearer, Wan 2.2 switches to low noise expert models. These experts trained specifically on images with minimal noise, learning to add fine details, subtle textures, and precise refinements.

Low noise experts act like detail artists adding final touches. They render individual fabric threads, skin pores, jewelry reflections, hair strands, and surface textures. Because these models never had to learn structure generation from heavy noise, they can dedicate their entire capacity to understanding and generating fine details.

According to research documentation from the Wan 2.2 GitHub repository, this MoE architecture improves detail quality by 40-60% compared to unified models of similar size. The specialized training for each noise level produces better results than asking one model to handle everything.

While platforms like Apatero.com implement these advanced architectures automatically, understanding the underlying technology helps ComfyUI users optimize their workflows for maximum quality. If you're new to ComfyUI, start with our ComfyUI basics and essential nodes guide.

Why QWEN Works Perfectly with Wan 2.2 Upscale Workflows

QWEN brings exceptional text understanding to image generation, but its real value in Wan 2.2 upscale workflows comes from how it guides the low noise refinement process. Understanding this combination is key to maximizing Wan 2.2 upscale quality.

Traditional upscaling approaches either ignore the original prompt entirely or apply it uniformly across all generation steps. QWEN's integration with Wan 2.2 allows you to provide specific refinement instructions that influence only the low noise detail generation phase.

Practical Example: Your base image shows a character wearing a leather jacket. During upscaling, you can provide QWEN prompts like "fine leather texture with visible grain and wear patterns" that specifically guide the low noise experts. The high noise structure remains unchanged while low noise experts add those exact texture details you specified.

This targeted prompt control during detail refinement separates QWEN and Wan 2.2 workflows from generic upscaling that blindly adds sharpening without understanding what details should actually appear.

QWEN Models for Text-to-Image Upscaling

Several QWEN model variants work with Wan 2.2, each offering different trade-offs between quality and resource usage.

Available QWEN Models:

- Qwen2.5-14B-Instruct provides the best prompt understanding and most subtle detail control, requiring approximately 16GB VRAM

- Qwen2.5-7B-Instruct balances quality and performance, working well on 12GB VRAM cards

- Qwen2.5-3B-Instruct enables workflows on 8GB VRAM with acceptable prompt comprehension

According to testing documented on the Wan 2.2 ComfyUI Wiki, the 7B model provides the best balance for most users. The 14B version shows noticeable improvements primarily when using very detailed, complex prompts with multiple technical specifications.

Complete ComfyUI Workflow Setup for Wan 2.2 Upscale

Setting up this Wan 2.2 upscale workflow requires specific model files, proper node configuration, and understanding the generation pipeline structure. Here's the complete step-by-step setup process for implementing Wan 2.2 upscale in ComfyUI.

Required Model Files and Installation

Download the Wan 2.2 text-to-image models from the official Hugging Face repository. You need both the high noise and low noise model files for the MoE architecture to function properly.

Essential Wan 2.2 Files: Place wan2.2_t2v_high_noise_14B_fp8_scaled.safetensors in your ComfyUI models directory under diffusion_models. This file handles the initial high noise generation phases establishing composition and structure.

Place wan2.2_t2v_low_noise_14B_fp8_scaled.safetensors in the same diffusion_models directory. This low noise expert handles the detail refinement that makes upscaling look professional instead of artificial.

Download wan_2.1_vae.safetensors and place it in the vae folder. The VAE encodes and decodes between pixel space and latent space, critical for maintaining color accuracy and detail during generation.

QWEN Text Encoder Files: Download umt5_xxl_fp8_e4m3fn_scaled.safetensors and place it in the text_encoders folder. This provides the text understanding that guides generation based on your prompts.

For QWEN prompt enhancement, download your chosen QWEN model from Hugging Face. Qwen2.5-7B-Instruct offers the best performance-to-quality ratio for most users. Place these files in your ComfyUI models directory following the structure your QWEN node implementation expects.

Node Structure and Connections

The workflow follows a specific pipeline pattern that uses both high noise and low noise experts at appropriate stages.

Initial Image Generation or Loading: Start with either a generated image at base resolution or load an existing image you want to upscale. For pure upscaling workflows, use a Load Image node. For generate-and-upscale workflows, use your standard generation pipeline to create the base image.

QWEN Prompt Enhancement: Connect a QWEN prompt enhancement node that analyzes your prompt and expands it with relevant detail descriptions. This enhanced prompt guides the low noise refinement process. The QWEN model takes your base prompt like "portrait of a woman in elegant dress" and expands it to include specific detail instructions like "fine fabric texture, detailed jewelry, natural skin tones, sharp facial features."

High Noise Expert Loading: Use a Load Checkpoint node to load the high noise expert model. Connect this to a KSampler node configured for initial generation steps. These steps establish overall composition and structure.

For upscaling workflows, you typically want fewer high noise steps because the structure already exists in your base image. Set high noise steps between 5-15 depending on how much structural change you want to allow.

Low Noise Expert Loading: Load the low noise expert model in a second checkpoint node. This connects to a separate KSampler that handles the detail refinement steps. Low noise sampling typically requires 20-40 steps depending on your quality targets and patience.

VAE Decode and Output: Connect the final latent output through the VAE decode node to convert from latent space to pixel space. Add a Save Image node to output your upscaled result.

Conditioning and Control Settings

Proper conditioning setup determines how much the upscale respects your original image versus generating new details.

Image Conditioning Strength: When upscaling an existing image, you need to provide that image as conditioning to the generation process. Use an image encode node to convert your base image to latent space conditioning.

Set conditioning strength between 0.6-0.8 for upscaling. Lower values allow more creative interpretation and detail generation but risk changing your original composition. Higher values preserve the original more faithfully but may limit detail enhancement.

CFG Scale for Detail Control: Classifier Free Guidance scale controls how strictly the generation follows your prompt versus exploring variations. For upscaling workflows, CFG between 5.0-8.0 works best.

Lower CFG produces softer, more natural results but may not follow detailed prompt instructions precisely. Higher CFG creates sharper details that closely match prompts but can introduce over-sharpening or artificial appearance.

Sampling Method Selection: Different samplers produce varying quality and characteristics. According to testing documented in our guide on ComfyUI sampler selection at the blog sampler article, Euler and DPM++ 2M samplers work particularly well with Wan 2.2's architecture.

Euler produces smooth, natural results with slightly softer detail rendering. DPM++ 2M creates sharper details but requires more steps for optimal quality. Test both with your specific content to determine which aesthetic matches your goals.

Optimizing Wan 2.2 Upscale for 4K on Limited VRAM

The impressive resolution capabilities of Wan 2.2 upscale come with substantial memory requirements. Generating 4K images with Wan 2.2 upscale can require 20GB+ VRAM without optimization. These techniques make Wan 2.2 upscale to 4K practical on consumer hardware. For comprehensive VRAM management strategies, see our VRAM optimization guide.

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

GGUF Quantization for Memory Reduction

GGUF quantized versions of Wan 2.2 models reduce memory requirements by 40-60% with minimal quality loss. Community members have created quantized versions available on Hugging Face and Civitai.

According to testing documented on Civitai by community member bullerwins, GGUF quantized Wan 2.2 models at Q4_K_M quantization level produce visually identical results to full precision models for most use cases while requiring 8-10GB VRAM instead of 16-20GB.

Download the GGUF versions and use them identically to standard model files. ComfyUI's GGUF support handles quantization automatically without requiring workflow changes.

Tiled Generation for Extreme Resolutions

For resolutions beyond 4K or when VRAM remains insufficient even with quantization, tiled generation splits the image into overlapping sections generated independently then blended together.

Use tiled VAE decode nodes available in several ComfyUI custom node packs. These nodes generate sections of your final image independently, keeping VRAM usage constant regardless of output resolution.

The trade-off involves longer generation time since each tile generates sequentially rather than processing the entire image simultaneously. A 4K image might split into 4-6 tiles depending on overlap settings, multiplying generation time So.

Resolution Progression Strategy

Instead of jumping directly from 1024x1024 to 4K in one step, progressive upscaling generates better quality with lower VRAM requirements.

Generate your base image at 1024x1024 or 1536x1536. Upscale to 2048x2048 using Wan 2.2 low noise refinement. Take that 2K result and upscale again to 4K using a second refinement pass.

This progressive approach allows the low noise experts to focus on appropriate detail levels for each resolution step. Jumping straight to 4K often produces details that look correct at 4K but originated from insufficient information at lower resolutions.

Prompt Engineering for Superior Upscale Quality

The prompts you provide during upscaling dramatically influence final quality. Generic prompts produce generic details while specific prompt strategies guide the low noise experts toward photorealistic refinement.

Base Detail Descriptors

Your prompt should include specific material and texture descriptions that guide detail generation even when those details aren't visible in the base resolution image.

Material Specifications: Instead of "leather jacket," specify "distressed brown leather jacket with visible grain texture, subtle wrinkles, and worn edges." The low noise experts use these specifications to generate appropriate texture details during upscaling.

Instead of "wooden table," specify "oak wood table with visible grain patterns, subtle variations in tone, and natural imperfections." These descriptors guide realistic texture generation.

Lighting and Surface Interaction: Include descriptions of how light interacts with surfaces. "Soft highlight on cheekbone," "subtle subsurface scattering in skin," "specular reflection on metal surface." These descriptions help low noise experts render believable lighting detail.

Negative Prompts for Avoiding Artifacts

Negative prompts become critical during upscaling to prevent common artifacts that low noise models tend to introduce when not properly guided.

Common Upscaling Artifacts to Avoid: Include in negative prompts: "over-sharpened, artificial sharpening, haloing, noise, grain, compression artifacts, plastic skin, oversaturated, unnatural colors, blurry, soft focus"

Low noise experts sometimes over-emphasize detail at the expense of natural appearance. Negative prompts help the model understand you want increased detail without sacrificing photorealism.

Detail Focus Techniques

For images where specific areas require exceptional detail while other areas should remain softer, use attention syntax to weight different prompt components.

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

Syntax like "portrait of woman, (extremely detailed eyes:1.3), (sharp jewelry:1.2), natural skin texture" tells the model which areas deserve extra detail attention during low noise refinement.

This selective detail emphasis produces more professional results than uniformly sharpening the entire image. Professional photographers use selective focus and detail emphasis for visual hierarchy. These prompt techniques replicate that approach in AI upscaling.

Comparing Wan 2.2 Upscale vs Traditional Methods

Understanding how Wan 2.2 upscale compares to established upscaling methods helps contextualize when to use Wan 2.2 upscale versus alternatives.

Wan 2.2 Upscale Low Noise vs ESRGAN

ESRGAN and similar neural upscalers learn to add detail by training on pairs of low-resolution and high-resolution images. They excel at certain content types but struggle with AI-generated images. Wan 2.2 upscale offers distinct advantages in these scenarios.

ESRGAN Strengths: Fast generation, working in seconds rather than minutes. Low VRAM requirements running on modest hardware. Consistent results without prompt tuning. Strong performance on photographic content and natural scenes.

ESRGAN Limitations: No understanding of original prompt or intended content. Can't add semantically correct details, only texture patterns learned from training data. Struggles with AI-generated content that contains non-photographic elements. No control over what details get added beyond choosing different ESRGAN model variants.

Wan 2.2 Upscale Strengths: Understands the content through QWEN prompt analysis. Generates semantically appropriate details guided by text descriptions. Wan 2.2 upscale excels with AI-generated content because it uses the same generation approach at higher resolution. Provides precise control over detail characteristics through prompt engineering.

Wan 2.2 Upscale Limitations: Slower generation requiring 30-60 seconds per image. Higher VRAM requirements needing 12-16GB for quality results. Requires prompt tuning to achieve optimal Wan 2.2 upscale quality. More complex workflow setup compared to simple ESRGAN nodes.

For AI-generated images requiring upscaling with prompt-guided detail enhancement, Wan 2.2 upscale produces superior results. For photographic content requiring simple resolution increase, ESRGAN remains faster and easier.

Low Noise Refinement vs Standard Model Img2Img Upscaling

Some workflows use standard diffusion models in img2img mode for upscaling by generating at higher resolution with the original image as conditioning. This approach works but lacks the specialized training that makes low noise experts effective.

Standard models trained on noisy images at all noise levels devote significant capacity to learning noise removal. Low noise experts never trained on high noise levels, allowing them to specialize entirely in detail refinement without wasting capacity on noise handling.

According to comparative testing from AI image generation communities on platforms like Reddit and Civitai, low noise expert approaches consistently produce 30-40% better detail quality scores than standard img2img upscaling at equivalent settings.

The difference becomes most visible in fine textures, fabric details, and subtle surface variations where standard models often produce muddied or oversimplified detail while low noise experts render crisp, believable textures.

Real-World Applications and Use Cases

QWEN and Wan 2.2 low noise upscaling excels in specific scenarios where detail quality and semantic understanding matter more than raw speed.

Print and Professional Output Preparation

AI image generation typically produces 1024x1024 or 1536x1536 output. Professional print work requires significantly higher resolutions, often 300 DPI at large physical dimensions.

A poster printed at 24x36 inches requires approximately 7200x10800 pixels for proper 300 DPI quality. Standard upscalers produce muddy results at this resolution. Wan 2.2 low noise refinement generates the detail density needed for professional print output.

According to specifications from professional print services like those documented in photography industry standards, the detail quality from Wan 2.2 upscaling meets commercial print requirements that generic upscalers fail to achieve.

Product Photography Enhancement

Product photography for e-commerce requires extreme detail showing texture, material quality, and fine features. AI-generated product images often need upscaling to match the detail expectations of professional product photography.

Earn Up To $1,250+/Month Creating Content

Join our exclusive creator affiliate program. Get paid per viral video based on performance. Create content in your style with full creative freedom.

QWEN prompts can specify exact material properties like "smooth glass surface with subtle reflections," "woven fabric with visible individual threads," or "brushed metal with directional grain." The low noise experts generate these specific textures during upscaling.

For more information on AI-generated product photography workflows, see our comprehensive guide on ComfyUI for product photography at the blog product photography article.

Architectural Visualization Detail Enhancement

Architectural renders require crisp details showing building materials, surface textures, and environmental context. Base generation at reasonable resolutions followed by low noise upscaling produces visualization quality suitable for client presentations and marketing materials.

Specify prompts like "brick facade with visible mortar lines and texture variation," "glass windows with subtle reflections and transparency," "concrete surface with realistic texture." These guide detail generation that looks like professional architectural photography rather than AI-generated approximations.

Character and Concept Art Production

Artists creating character designs and concept art benefit from starting with AI-assisted generation then upscaling to high resolution for detailed manual refinement. Wan 2.2 low noise provides the detail foundation that makes manual enhancement practical.

Generate your concept at base resolution with composition and style established. Upscale using low noise refinement with detailed material and texture prompts. Export at 4K for importing into Photoshop or other painting tools for final artistic refinement.

This hybrid workflow combines AI speed with human artistic control. While platforms like Apatero.com offer complete solutions from generation to final output, ComfyUI workflows with Wan 2.2 give artists maximum control over every stage of the process. For complete video generation workflows, see our Wan 2.2 ComfyUI video generation guide. For consistent characters across your upscaled images, check our character consistency guide.

Troubleshooting Common Upscaling Issues

Even with proper setup, certain problems commonly appear when working with QWEN and Wan 2.2 upscaling workflows. Here's how to diagnose and fix frequent issues.

Over-Sharpening and Artificial Appearance

If upscaled images look artificially sharp with haloing around edges, several factors typically contribute to this problem.

CFG Scale Too High: Classifier Free Guidance above 9.0 often produces over-sharpened results with the low noise models. Reduce CFG to 6.0-7.5 for more natural appearance while maintaining detail quality.

Insufficient Low Noise Steps: Ironically, too few steps during low noise refinement can cause the model to add details aggressively in the limited steps available. Increase low noise sampling steps to 30-40 to allow gentler detail accumulation.

Missing Negative Prompt Guidance: Without negative prompts specifying "over-sharpened, artificial sharpening, haloing," the model may naturally tend toward excessive sharpness. Add comprehensive negative prompts as described in the prompt engineering section.

Detail Inconsistency Across Image Regions

When some areas of your upscaled image show beautiful detail while other areas remain soft or muddied, this indicates conditioning or attention problems.

Uneven Image Conditioning: If your base image has varying quality across regions, the low noise experts may struggle to add consistent detail. Try upscaling from a higher quality base or using face detailer nodes to pre-enhance critical regions before full upscaling.

Attention Distribution Issues: Complex compositions with multiple subjects sometimes cause attention mechanisms to focus detail generation on certain regions while neglecting others. Use attention weighting in prompts to specify which elements deserve detail emphasis.

Color Shift or Saturation Changes

Upscaled images sometimes show different colors or saturation compared to the base image, indicating VAE or conditioning problems.

VAE Mismatch: Ensure you're using the Wan 2.1 VAE specifically designed for these models. Other VAE implementations may encode colors differently, causing shifts during the upscaling process.

Conditioning Strength Too Low: If conditioning strength drops below 0.5, the upscaling process becomes more like new generation than upscaling, allowing colors to drift. Increase conditioning strength to 0.7-0.8 to maintain color fidelity.

Advanced Techniques for Professional Results

Once you master basic upscaling workflows, these advanced techniques push quality to professional levels.

Multi-Pass Detail Refinement

Instead of single-pass upscaling, use multiple refinement passes with different prompt focuses for each pass.

First pass focuses on structure and major details with prompts emphasizing composition and primary features. Second pass targets specific material textures with highly detailed material descriptions. Third pass can focus on lighting and subtle surface interactions.

This multi-pass approach gives you granular control over different aspects of detail generation rather than asking one pass to handle everything simultaneously.

Combining Loras for Style and Detail Control

Load style LoRAs alongside the low noise expert models to maintain specific aesthetic characteristics during upscaling. Photography style LoRAs, artistic style LoRAs, or technical quality LoRAs all influence how the low noise experts generate details.

A photorealism LoRA guides detail generation toward photographic characteristics. An illustration LoRA maintains illustrative style while increasing resolution. This combination approach maintains style consistency while adding resolution-appropriate detail.

Selective Regional Upscaling with Masks

For images where only specific regions require extreme detail, use masking to apply low noise refinement selectively.

Generate masks isolating faces, key objects, or critical details. Apply high-intensity low noise upscaling to masked regions while using faster, simpler upscaling on backgrounds and less important areas. This selective approach saves generation time while ensuring critical regions receive maximum detail attention.

The Future of AI Image Upscaling

Wan 2.2's MoE architecture with separated high noise and low noise experts represents an important evolution in how AI handles image generation at different quality levels.

According to analysis from computer vision researchers documenting advances in diffusion model architectures, specialized expert models for different generation phases consistently outperform unified models when evaluated on detail quality metrics. This suggests future development will likely emphasize even more specialized expert systems.

Combining text understanding models like QWEN with specialized generation models creates flexible pipelines where each component focuses on its strengths. QWEN handles prompt comprehension and enhancement. High noise experts establish structure. Low noise experts refine details. This modular approach enables optimization of each component independently.

For creators working in ComfyUI, understanding and implementing these modern techniques provides access to professional-quality results that would have required expensive commercial tools or manual artistic work just months ago.

Getting Started with Wan 2.2 Upscale Today

All components for Wan 2.2 upscale workflows are available now under open licenses permitting commercial use. Download Wan 2.2 models from the official Hugging Face repository. Download QWEN models from the Qwen organization on Hugging Face.

Start with simple Wan 2.2 upscale workflows using base resolution images and moderate target resolutions around 2K. Master the basics of high noise versus low noise step allocation, CFG scale tuning, and prompt engineering for Wan 2.2 upscale detail control. Gradually expand to higher resolutions and more complex multi-pass refinement workflows.

The combination of QWEN's prompt understanding with Wan 2.2 upscale specialized low noise experts delivers upscaling quality that rivals or exceeds commercial solutions while giving you complete workflow control. For anyone generating AI images that need professional output quality, mastering Wan 2.2 upscale represents an essential skill worth developing. For faster generation speeds, explore TeaCache and SageAttention optimization. To train custom LoRAs for your specific styles, see our FLUX LoRA training guide. If you're new to AI image generation, start with our getting started guide.

Frequently Asked Questions

How much VRAM do I need for QWEN and Wan 2.2 upscaling?

Minimum 12GB VRAM for basic 2K upscaling workflows with standard models. For 4K generation, 16GB VRAM is recommended without optimizations, or 12GB with GGUF quantization and tiling enabled. Enterprise 4K workflows benefit from 24GB+ VRAM for full quality without compromises.

Can I use QWEN and Wan 2.2 for commercial projects?

Yes, both QWEN and Wan 2.2 are released under open licenses permitting commercial use. Verify the specific license terms on their respective Hugging Face repositories, but commercial applications are generally allowed. Always check current licensing for your specific use case and jurisdiction.

How does Wan 2.2 low noise upscaling compare to ESRGAN?

Wan 2.2 low noise understands content through QWEN prompts and generates semantically appropriate details, excelling with AI-generated images. ESRGAN is faster (seconds vs 30-60 seconds) with lower VRAM requirements but lacks prompt-guided control and struggles with non-photographic AI content. For AI-generated images, Wan 2.2 produces superior results.

What is the Mixture of Experts (MoE) architecture in Wan 2.2?

Wan 2.2's MoE architecture uses separate expert models for high noise (structure generation) and low noise (detail refinement) phases. This specialization improves detail quality by 40-60% compared to unified models. High noise experts establish composition while low noise experts add fine textures and surface details.

Can I upscale images larger than 4K with this workflow?

Yes, using progressive upscaling and tiled generation. Generate base at 1024x1024, upscale to 2K, then to 4K, and continue to 8K if needed. Tiled VAE decode allows arbitrary resolutions by processing overlapping sections independently. Generation time scales proportionally with resolution.

Why do my upscaled images look over-sharpened or artificial?

CFG scale above 9.0 often produces over-sharpening with low noise models. Reduce to 6.0-7.5 for more natural appearance. Insufficient low noise steps (under 20) causes aggressive detail addition. Include "over-sharpened, artificial sharpening, haloing" in negative prompts to prevent these artifacts.

What prompt engineering techniques work best for upscaling?

Specify exact material and texture descriptions ("distressed brown leather with visible grain texture") rather than generic terms. Include lighting and surface interaction descriptions ("soft highlight on cheekbone, subtle subsurface scattering"). Use negative prompts to avoid common artifacts. Selective detail emphasis with attention syntax highlights important areas.

How long does a typical 4K upscale take with QWEN and Wan 2.2?

30-60 seconds per image on 24GB VRAM hardware using float16 models with standard settings. Progressive upscaling (1K→2K→4K) takes 2-4 minutes total across multiple passes. GGUF quantization reduces generation time by 20-30% with minimal quality loss. Batch processing amortizes loading time across multiple images.

Can I combine Wan 2.2 upscaling with other enhancement techniques?

Yes, Wan 2.2 low noise works well with face detailers, LoRAs for style control, and post-processing upscalers. Generate with low noise refinement first, then apply targeted face enhancement, or upscale to 2K with Wan 2.2 then use RealESRGAN for final resolution boost. Combining techniques uses each tool's strengths.

What's the difference between float32, float16, and GGUF models?

Float32 is full precision (highest quality, 12.4GB VRAM). Float16 reduces size by 50% (6.2GB VRAM) with imperceptible quality difference for anime/illustration content. GGUF quantization further reduces requirements (4-5GB VRAM at Q4_K_M level) with minimal visual impact. For standard workflows, float16 provides optimal balance.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

10 Most Common ComfyUI Beginner Mistakes and How to Fix Them in 2025

Avoid the top 10 ComfyUI beginner pitfalls that frustrate new users. Complete troubleshooting guide with solutions for VRAM errors, model loading...

25 ComfyUI Tips and Tricks That Pro Users Don't Want You to Know in 2025

Discover 25 advanced ComfyUI tips, workflow optimization techniques, and pro-level tricks that expert users use.

360 Anime Spin with Anisora v3.2: Complete Character Rotation Guide ComfyUI 2025

Master 360-degree anime character rotation with Anisora v3.2 in ComfyUI. Learn camera orbit workflows, multi-view consistency, and professional...