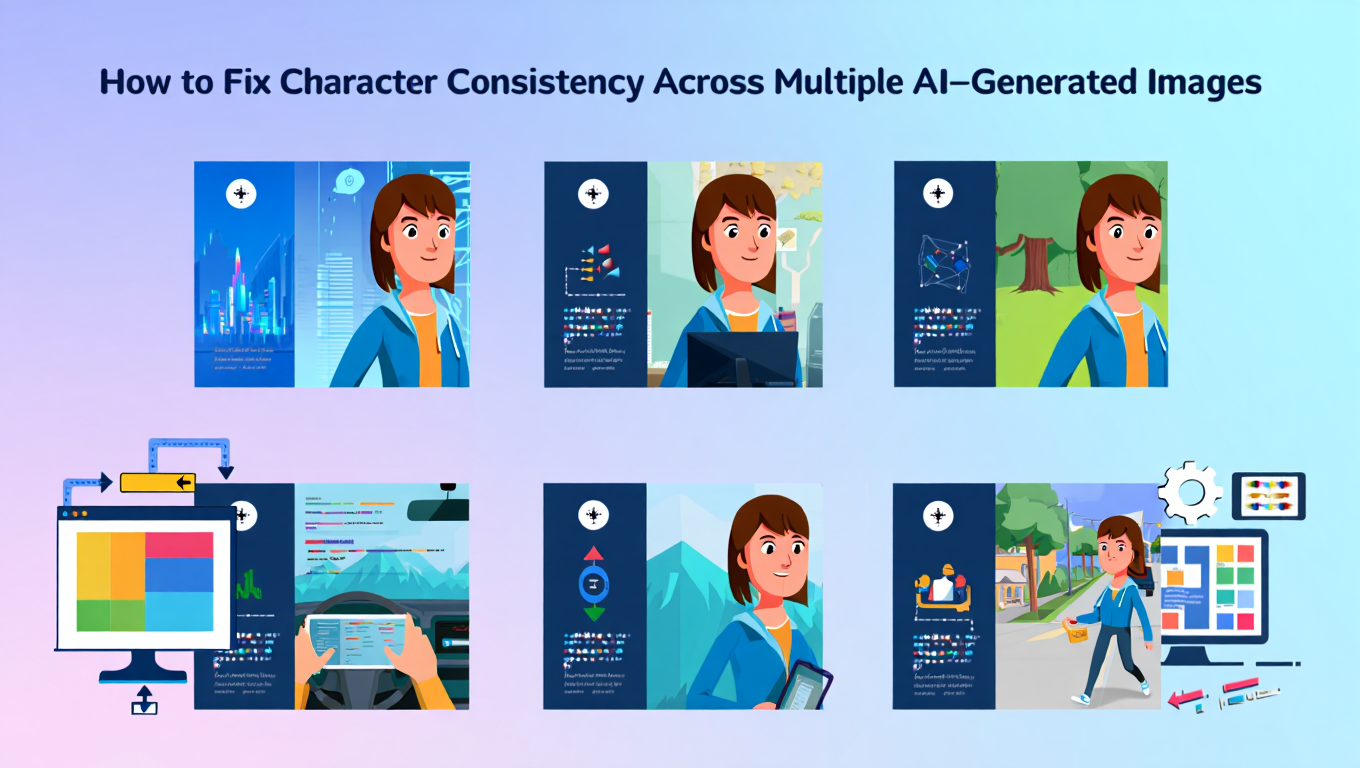

How to Fix Character Consistency Across Multiple AI-Generated Images

Master character consistency in Stable Diffusion and Flux using IP-Adapter, LoRA training, reference images, and proven workflow techniques

You've generated the perfect character. The face, style, and details are exactly what you envisioned. Now you need twenty more images of this same character in different poses, outfits, and settings. You regenerate with similar prompts and get twenty completely different people. Hair color shifts between images. Face shape morphs from angular to rounded. Eye color can't seem to decide between blue and green. The fundamental challenge of maintaining character consistency ai across multiple AI-generated images frustrates countless creators working on comics, visual novels, social media content, and any project requiring a recurring character. Solving character consistency ai problems is essential for professional AI-generated content.

The root cause lies in how diffusion models work. Each generation starts from random noise with no memory of previous generations. When you prompt for "woman with red hair," the model samples from its training distribution of all the different ways that prompt could be interpreted. Sometimes you get auburn, sometimes cherry, sometimes strawberry blonde. The model isn't being inconsistent; it's being generative, which is exactly what causes the character consistency ai problem. Solving character consistency ai requires techniques that constrain the model's interpretation to match a specific identity rather than sampling from the general distribution. For AI image generation fundamentals, see our complete beginner's guide.

Why Character Consistency AI Fails

Understanding the underlying mechanisms helps you choose appropriate solutions for your specific character consistency ai situation. These character consistency ai challenges affect all diffusion models.

The Generation Process

Diffusion models generate images by iteratively denoising random noise toward a distribution matching your text prompt. Each generation begins from completely different random noise and follows a different denoising path to reach the final image.

When you specify "a woman with shoulder-length auburn hair and green eyes," the model interprets these words against its learned associations from training data. But training data contains thousands of variations of "auburn hair" and "green eyes." Each generation samples from this distribution differently.

The model has no concept of a specific person. It understands attributes like hair color, eye color, and face shape as distributions rather than specific values. Every generation draws from these distributions, producing a plausible interpretation that varies from other equally plausible interpretations.

The Seed Misconception

Many users believe using the same random seed produces the same character. This is partially true but fundamentally limited.

Identical seed with identical prompt produces identical output. But this only works for exact repetition. Change the prompt at all, like shifting from "standing" to "sitting," and the same seed produces a completely different face.

Seeds control the random noise pattern that begins generation, not the identity of subjects within that generation. Different prompts create different denoising paths from that noise, leading to different results even with matching seeds.

Seeds provide reproducibility for specific generations but not character consistency across different prompts and scenarios.

Prompt Sensitivity

Natural language prompts introduce variation by design. Two prompts that seem equivalent activate different model weights and produce different results.

"A woman with long blonde hair" and "blonde woman with long hair" are semantically similar but linguistically different. Word order, phrasing, and emphasis all affect generation. The same character description phrased slightly differently yields different faces.

This linguistic sensitivity makes pure prompt-based consistency nearly impossible. You would need to maintain exactly identical phrasing while changing only scenario elements, and even then variations occur because the scenario context affects the entire generation.

IP-Adapter for Immediate Character Consistency AI

IP-Adapter provides the fastest path to character consistency ai without any training required. It extracts visual features from reference images and injects them into the generation process. For ComfyUI setup, see our essential nodes guide.

How IP-Adapter Works

IP-Adapter uses a CLIP image encoder to create embeddings from your reference image. These embeddings capture visual features like facial structure, coloring, and style. The adapter then transforms these embeddings to condition the diffusion process alongside text embeddings.

The result is that generated images inherit characteristics from your reference image without copying it directly. You get images that share facial features and coloring with your reference while following the prompt for pose, setting, and other elements.

Different IP-Adapter models target different features. Face embedding models specifically extract facial identity features and work best for character consistency. Generic models capture broader visual style and work better for style transfer.

Setting Up IP-Adapter in ComfyUI

Install IP-Adapter through ComfyUI Manager or manually from the GitHub repository. You need both the node pack and appropriate model weights.

For character consistency, download IP-Adapter-FaceID models. These are specifically trained to extract and preserve facial identity:

# Download these models to your models/ipadapter folder

IP-Adapter-FaceID-PlusV2.bin

IP-Adapter-FaceID-Plus.bin

IP-Adapter-FaceID.bin

Build a workflow with these components:

- Load your base model (Flux, SDXL, etc.)

- Load IP-Adapter FaceID model

- Encode your text prompt with CLIP

- Load your reference image

- Apply IP-Adapter to inject reference features

- Send to KSampler for generation

The IP-Adapter node sits between prompt encoding and sampling, modifying the conditioning with reference image features.

Optimal IP-Adapter Settings

The weight parameter controls how strongly the reference influences generation. For character consistency:

Close-up portraits: weight 0.8-0.9 Strong influence maintains facial features clearly when face is the focus.

Medium shots: weight 0.7-0.85 Balance between reference features and prompt elements.

Full body or action shots: weight 0.5-0.7 Lower weight allows more prompt control when face is smaller in frame.

Start with moderate weights and adjust based on results. Too high and the reference overpowers your prompt; too low and consistency weakens.

Best Practices for IP-Adapter Character Work

Use multiple reference images when possible. IP-Adapter can blend features from several images, producing more solid consistency than single image references:

# Load multiple references

reference_images = [face_front, face_side, face_angle]

# IP-Adapter averages their features

Choose clear reference images. Well-lit, front-facing photos with visible features work better than stylized or obscured images.

Match style between reference and target. Using a photographic reference when generating anime creates conflicts. Use style-matched references.

Maintain consistent negative prompts. Negative prompts affect how features are interpreted. Inconsistent negatives cause feature drift.

IP-Adapter Limitations

IP-Adapter preserves visual features but doesn't understand character semantics. It can't maintain outfit consistency or character backstory since these aren't visual features.

Strong weights can overwhelm prompt instructions. If your prompt requests different lighting but the reference has harsh shadows, results may maintain those shadows.

Multiple characters in one scene is challenging. IP-Adapter works best for single subject consistency. Each additional character requires separate IP-Adapter application with regional control.

Training Character LoRAs for Maximum Consistency

Character LoRAs provide the strongest consistency but require upfront investment in training. The LoRA learns your specific character's features across multiple images and contexts.

Why LoRAs Achieve Better Consistency

A trained LoRA modifies model weights to bias generation toward your character's specific features. When activated, the model "knows" your character rather than interpreting a description.

The LoRA learns from multiple training images showing your character in different lighting, angles, and expressions. It understands that these variations all represent the same character. During generation, it applies this understanding to new prompts automatically.

This learned understanding produces more natural results than reference-based methods. The character integrates properly into new scenes because the model understands the character, not just the reference image.

Training Requirements

Images: 10-20 images showing variety in pose, expression, lighting, and angle. Quality and variety matter more than quantity. Diverse images teach the LoRA to generalize.

Consistency in training data: While you want variety in pose and lighting, the actual character features should be consistent. If training images have inconsistent details (different eye colors, for example), the LoRA learns that inconsistency.

Captions: Each image needs a caption including your trigger word plus description of what's in that specific image:

ohwxperson, a woman with auburn hair, sitting at a cafe table, natural window lighting

ohwxperson, a woman with auburn hair, portrait with studio lighting, neutral background

ohwxperson, a woman with auburn hair, standing in a park, sunlight through trees

Training Configuration

Use Kohya SS or similar tools. Configure for character LoRA training:

# Network configuration

network_dim: 16 # Lower rank for characters

network_alpha: 16

# Training parameters

learning_rate: 1e-4

max_train_steps: 1000 # Characters need fewer steps

# Resolution

resolution: "512,512"

# Memory optimization for consumer GPUs

gradient_checkpointing: true

mixed_precision: "bf16"

Character LoRAs need lower rank than style LoRAs because character features are relatively simple to capture. Higher ranks can overfit to training images rather than learning generalizable features.

Using Your Character LoRA

Load the trained LoRA in your workflow with weight 0.7-0.9:

# ComfyUI LoRA Loader

lora_name = "ohwxperson.safetensors"

strength = 0.8

Include your trigger word in prompts to activate the character:

ohwxperson standing in a forest, natural lighting, full body shot

Vary other prompt elements freely. The LoRA handles character consistency while you control everything else through prompting.

Combining LoRA with IP-Adapter

For maximum consistency, use both together. The LoRA provides learned character understanding while IP-Adapter reinforces visual features from a reference.

Set moderate weights for both:

- LoRA strength: 0.6-0.7

- IP-Adapter weight: 0.5-0.6

These moderate values let both techniques contribute without fighting each other. The combination handles edge cases that either technique alone might miss.

Prompt Engineering for Consistency

Even without IP-Adapter or LoRAs, prompt engineering improves consistency significantly.

Detailed Feature Specifications

Vague descriptions allow variation. Constrain interpretation with specific details:

Vague (inconsistent):

a woman with brown hair

Specific (more consistent):

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

a woman with shoulder-length chestnut brown wavy hair parted on the left side, heart-shaped face with a small nose and full lips, green eyes with slight upturn at corners, light skin with subtle freckles across the nose and cheeks

The detailed description constrains the model's interpretation to a narrower range. Each feature specification eliminates variation.

Prompt Templates

Create a template for your character that you use verbatim in every prompt, changing only scenario elements:

[SCENARIO], a 25-year-old woman with shoulder-length auburn wavy hair, green eyes, heart-shaped face, light freckles, wearing [OUTFIT], [LIGHTING]

Fill in scenario, outfit, and lighting while keeping character description identical. Exact repetition of feature descriptions produces more consistent results than varied phrasings.

Consistent Negative Prompts

Negative prompts affect character appearance significantly. Create a standard negative prompt and keep it constant:

deformed, bad anatomy, wrong eye color, multiple people, poorly drawn face, blurry

Adding or removing negative terms changes how positive prompts are interpreted. Lock down your negative prompt early.

Emphasis Syntax

Use emphasis to prioritize character features over scenario elements:

(auburn wavy hair:1.3), (green eyes:1.2), woman standing in a forest

Parentheses with weights increase emphasis on those features. Higher weights mean stronger influence on generation.

Reference-Based Techniques

Image-to-image workflows provide another consistency mechanism.

Img2Img with Low Denoising

Use a previous generation as input with low denoising strength (0.3-0.4):

input_image = previous_character_image

denoise_strength = 0.35

prompt = "the woman sitting in a chair, soft lighting"

Low denoising preserves much of the input including facial features while the prompt guides changes to pose and setting. Higher denoising allows more change but risks losing character consistency.

This works well for pose variations from a base image. Generate one strong character reference, then use it as input for variations.

ControlNet with Reference Mode

ControlNet's reference-only mode uses a reference image to guide generation without requiring preprocessed control images. This provides another way to inject character features:

controlnet_model = "reference_only"

reference_image = character_base_image

reference_strength = 0.5

Combine reference mode with other ControlNet modes like OpenPose to control pose while reference handles appearance.

Inpainting for Corrections

When one image in a set has incorrect features, use inpainting rather than full regeneration:

- Mask the incorrect feature (wrong hair color, for example)

- Write a targeted inpainting prompt

- Generate with context-aware settings

The surrounding context anchors the inpainted area to match the rest of the image. This is faster than regenerating and more likely to maintain overall consistency.

Workflow Selection Guide

Different situations call for different techniques.

Comics and Sequential Art

For long-term character use across many images, invest in LoRA training. The upfront time pays off through unlimited consistent generations.

Supplement with IP-Adapter when you need specific expressions or angles from a reference panel. The combination provides both learned consistency and visual anchoring.

Use strict prompt templates to maintain outfit and prop consistency alongside character features.

Quick One-Off Projects

IP-Adapter requires no training and works immediately. Generate one good reference image, then use it for remaining project images.

Accept slightly lower consistency in exchange for faster workflow. For short projects, the time saved skipping training outweighs slightly more variation.

Professional Production

Combine all techniques for maximum reliability. Train a character LoRA for base consistency. Use IP-Adapter for shot-specific refinement. Employ strict prompt templates for uniformity.

This maximal approach costs more time but ensures the consistency level professional work requires.

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

Frequently Asked Questions

Can I achieve perfect consistency without training a LoRA?

Near-perfect with IP-Adapter FaceID for close-up portraits. Full body shots and varied angles show more variation. For truly perfect consistency across all scenarios, LoRA training is necessary.

How many images do I need for a character LoRA?

10-20 images with variety in pose, lighting, and expression. Quality and variety matter more than quantity. 50 similar images don't train better than 15 diverse ones.

Why does IP-Adapter make my character look too similar to the reference?

Weight is too high. Reduce to 0.5-0.6 for more variation while maintaining identity. Very high weights copy the reference rather than generating new images.

Can I use multiple characters in one scene consistently?

Yes, but it's challenging. Use separate IP-Adapter applications with regional prompting for each character. Or train a multi-character LoRA on images containing both characters together.

My character's outfit keeps changing. How do I fix this?

Character LoRAs don't capture outfits well because training wants feature variety. Use detailed outfit descriptions in every prompt, or create a separate outfit LoRA.

How do I maintain consistency between different art styles?

Very difficult. A realistic photo and anime version of the same character require either style transfer techniques or separate LoRAs for each style.

Can I extract a character from existing media?

Yes, with training data from the media. Gather 15-20 frames showing the character clearly, train a LoRA, generate new images. Respect copyright for your use case.

Why does my character look right close-up but wrong in full body shots?

IP-Adapter face embedding focuses on facial features. In full body shots the face is small, reducing embedding influence. Use higher weight or add detailed body descriptions.

How long does it take to train a character LoRA?

On RTX 4090, about 30-60 minutes for training. Dataset preparation with captions adds 1-2 hours. Total project time is 2-4 hours including evaluation.

Conclusion

Character consistency ai in generation requires intentional technique rather than relying on prompts alone. The combination of trained LoRAs for learned character understanding, IP-Adapter for visual feature reinforcement, and structured prompts for specification produces reliable character consistency ai results.

For ongoing characters you'll use repeatedly, invest in LoRA training. The upfront time pays off through unlimited consistent generations. For VRAM optimization during training, see our VRAM optimization guide.

For quick projects, IP-Adapter provides immediate character consistency ai without training. Generate one good reference and use it throughout.

Always use detailed, consistent character descriptions. Even with LoRA and IP-Adapter, specific language helps anchor features for better character consistency ai.

Test your character consistency ai setup early with varied poses and scenarios. Identify weaknesses before committing to a full project.

For users who want professional character consistency ai without mastering these technical systems, Apatero.com offers tools designed for maintaining characters across generations. You focus on creative direction while the platform handles technical character consistency ai.

Character consistency ai is solvable. With the right techniques for your situation, you can generate the same character reliably across any number of images.

Advanced Consistency Techniques

Beyond basic approaches, advanced techniques solve challenging consistency scenarios.

Multi-View LoRA Training

Train LoRAs specifically for multi-view consistency:

Training Data Strategy: Include views from multiple angles in training:

- Front face

- 3/4 profile (left and right)

- Side profile

- Back view (with distinctive hair/features)

Benefits: The LoRA learns to maintain identity across viewing angles, solving one of the hardest consistency challenges.

Face Embedding Databases

Create reference databases for complex projects:

Setup:

Join 115 other course members

Create Your First Mega-Realistic AI Influencer in 51 Lessons

Create ultra-realistic AI influencers with lifelike skin details, professional selfies, and complex scenes. Get two complete courses in one bundle. ComfyUI Foundation to master the tech, and Fanvue Creator Academy to learn how to market yourself as an AI creator.

- Generate multiple good character images

- Extract face embeddings from each

- Store as reference library

Usage: For each new generation, compare output embedding to library. Reject generations that deviate too far from stored references.

This automated quality control ensures consistency at scale.

Style-Preserved Character Transfer

Transfer your character to different art styles while maintaining identity:

Technique:

- Generate character in consistent base style

- Use style LoRA with reduced strength

- IP-Adapter maintains identity while style applies

Calibration:

- Character LoRA: 0.7-0.8

- Style LoRA: 0.3-0.4

- IP-Adapter: 0.5-0.6

This balance lets style transform the aesthetic while identity persists.

Integration with Production Workflows

Embed character consistency into professional production pipelines.

ComfyUI Workflow Templates

Create standardized workflows for character generation:

Basic Character Workflow:

Model Loader → LoRA Loader (character)

Reference Image → IP-Adapter

Prompt Template → CLIP Encode

All → KSampler → VAE Decode → Output

Save this as template for instant character generation setup.

For workflow fundamentals, see our essential nodes guide.

Batch Character Generation

Generate many consistent character images efficiently:

Strategy:

- Set up character consistency (LoRA + IP-Adapter)

- Create prompt list with scene variations

- Queue batch with locked character settings

- Generate overnight

For extensive batch processing, see our batch processing guide.

Quality Control Pipeline

Systematic quality control for character consistency:

Automated Checks:

- Generate image

- Face detection and embedding extraction

- Compare to reference embedding

- If similarity < threshold, regenerate

- Save accepted images with metadata

This ensures every output meets consistency standards.

Platform and Model-Specific Considerations

Different platforms and models require different approaches.

Flux Character Consistency

Flux models respond well to:

- IP-Adapter FaceID (excellent support)

- Detailed prompts (Flux follows prompts precisely)

- LoRA training (effective with fewer images than SDXL)

Flux-Specific Tip: Flux's precise prompt following means detailed character descriptions in prompts contribute significantly to consistency.

SDXL Character Consistency

SDXL works best with:

- Trained character LoRAs

- IP-Adapter with appropriate SDXL weights

- Consistent negative prompts

SDXL-Specific Tip: Use SDXL's dual text encoders effectively. Character descriptions in the first encoder, scene details in the second.

Video Generation Consistency

For video generation with consistent characters:

Frame-to-Frame Consistency: Video models like WAN 2.2 maintain internal consistency. The challenge is consistency across separate video clips.

Cross-Clip Consistency: Use IP-Adapter per clip with same reference. Train LoRA and use across all clips. Maintain identical prompts for character description.

For video generation workflows, see our WAN 2.2 guide.

Common Character Consistency Scenarios

Solutions for specific challenging situations.

Scenario: Character Aging

Generating same character at different ages:

Approach:

- Train base LoRA on current age

- Generate with age-modifying prompts

- IP-Adapter maintains core identity

- Accept that features naturally change with age

Example Prompts:

character_trigger, same person as a child, 8 years old, childhood photo

character_trigger, same person elderly, 70 years old, dignified aging

Scenario: Different Art Styles

Same character in realistic, anime, and cartoon styles:

Approach: Train separate LoRAs per style, OR use single LoRA with style modifiers:

character_trigger, photorealistic style, detailed skin texture

character_trigger, anime style, cel shaded, large eyes

character_trigger, pixar style, 3d rendered, stylized features

IP-Adapter helps maintain identity across style changes.

Scenario: Action Poses

Maintaining consistency during dynamic action:

Challenge: Fast motion, unusual angles, motion blur all reduce consistency.

Solutions:

- Use higher IP-Adapter weights for action shots

- Include action poses in LoRA training data

- Describe character features explicitly in action prompts

Scenario: Group Scenes

Multiple consistent characters in single image:

Approach:

- Regional prompting for character placement

- Separate IP-Adapter per character per region

- Or train multi-character LoRA on images of both together

Complexity: This is one of the hardest consistency challenges. Consider compositing individual character images if regional approaches fail.

Measuring and Tracking Consistency

Quantify consistency to track improvement.

Consistency Metrics

Face Similarity Score: Use face recognition embeddings to calculate similarity between images. Scores above 0.7 indicate good consistency.

Feature Checklist: Create checklist of distinctive features. Score each image on how many features are correct:

- Hair color correct

- Eye color correct

- Face shape correct

- Distinctive features present (freckles, scars, etc.)

A/B Testing Approaches

Compare different techniques:

- Generate 20 images with technique A

- Generate 20 images with technique B

- Score each set on consistency metrics

- Use technique with better scores

This data-driven approach identifies what works best for your specific character.

Progress Tracking

Track consistency improvement over project:

- Document technique settings used

- Score consistency for each batch

- Note what improved results

- Build knowledge base for future characters

Resources and Community

Further learning and community support for character consistency.

Tools and Extensions

ComfyUI Nodes:

- IP-Adapter nodes

- Face detection nodes

- Embedding comparison nodes

- Regional prompting nodes

Install via ComfyUI Manager.

Learning Resources

LoRA Training: See our LoRA training guide for detailed training instructions.

General AI Generation: For foundational knowledge, see our beginner's guide.

Community Support

Active communities for character consistency:

- Reddit r/StableDiffusion

- ComfyUI Discord

- CivitAI forums

Share your approaches and learn from others' solutions.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

10 Best AI Influencer Generator Tools Compared (2025)

Comprehensive comparison of the top AI influencer generator tools in 2025. Features, pricing, quality, and best use cases for each platform reviewed.

AI Adventure Book Generation with Real-Time Images

Generate interactive adventure books with real-time AI image creation. Complete workflow for dynamic storytelling with consistent visual generation.

AI Background Replacement: Professional Guide 2025

Master AI background replacement for professional results. Learn rembg, BiRefNet, and ComfyUI workflows for seamless background removal and replacement.

.png)