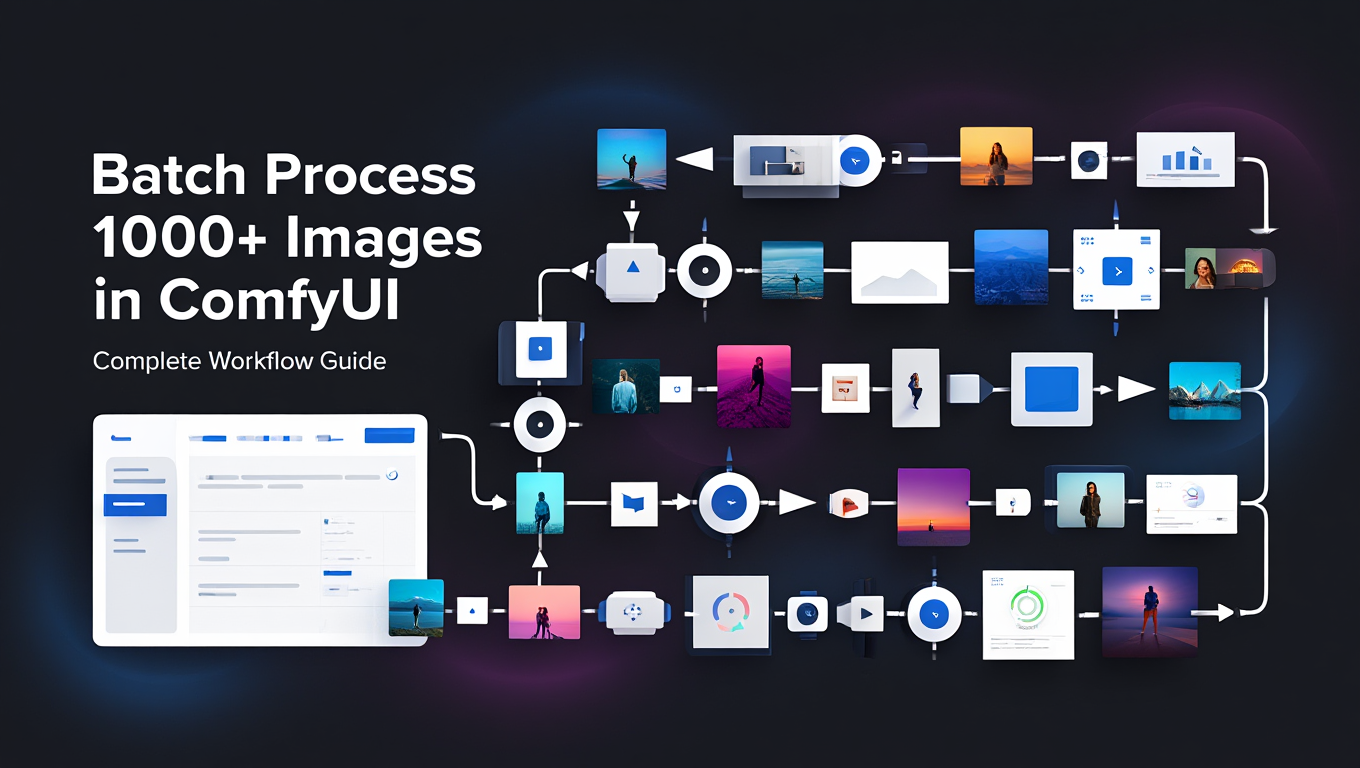

Batch Process 1000+ Images in ComfyUI - Complete Workflow Guide

Process thousands of images through ComfyUI workflows with batch loading, queue management, and automation techniques for production workloads

ComfyUI's node-based workflow system isn't just for interactive experimentation - it's a powerful batch processing engine that can handle thousands of images with the right setup. ComfyUI batch processing transforms your workflows from single-image experiments into production pipelines capable of handling massive datasets. Whether you need to upscale a product catalog, apply consistent style transfer across a dataset, run object detection on thousands of frames, or generate variations from a folder of input images, ComfyUI batch processing transforms ComfyUI from a creative tool into a production pipeline.

This guide covers everything from basic ComfyUI batch processing workflow design to advanced automation techniques that let you process massive image sets while you sleep. You'll learn to use batch loading nodes effectively, manage long-running queues, handle errors gracefully, and scale processing to match your hardware capabilities. By the end, you'll have the knowledge to build reliable ComfyUI batch processing systems that handle real production workloads.

Understanding ComfyUI Batch Processing Architecture

Before building batch workflows, understand how ComfyUI batch processing handles batch operations differently from single-image processing. Understanding ComfyUI batch processing architecture is essential for building efficient workflows.

In single-image mode, you load an image, process it through your workflow, and save the result. Each generation is manually initiated. For batch processing, you need automatic iteration: load image 1, process, save result 1, then automatically proceed to image 2, and continue until all images are processed.

ComfyUI achieves this through specialized batch loading nodes that iterate through image folders. When you queue a workflow with a batch loader, ComfyUI doesn't just run once - it queues multiple executions automatically, one for each image (or group of images) in your source folder. The batch loader node tracks which image to load for each execution, incrementing through your dataset.

The key concept in ComfyUI batch processing is the queue-per-image model. If you have 1000 images to process, ComfyUI queues 1000 workflow executions. Each execution loads the next image from the batch, processes it, and saves the result. This differs from batching approaches that load multiple images simultaneously into a single execution - ComfyUI batch processing handles one image per execution but automates the iteration through your dataset.

This architecture has important implications. Each image processes through the complete workflow independently, which provides isolation - one failed image doesn't block subsequent images from processing. However, it also means any overhead per execution (model loading, etc.) occurs for each image unless ComfyUI caches appropriately.

Essential Nodes for ComfyUI Batch Processing

Several node types enable ComfyUI batch processing workflows. Understanding what's available helps you design effective pipelines for ComfyUI batch processing operations.

Load Image Batch nodes from various custom node packs load images from a folder sequentially. The core parameters include:

- Directory path: The folder containing your source images

- Index: Which image in the folder to load (0-based)

- Pattern: Optional file pattern filtering (e.g., *.jpg, *.png)

When you queue the workflow, ComfyUI automatically increments the index for each queued execution. Set your initial index and the number of images to process, and ComfyUI handles the iteration.

Image Input node from ComfyUI-Impact-Pack provides batch functionality with additional features like automatic output filename preservation. This is particularly useful when you need output files to correspond to input filenames.

VHS Load Images from Video Helper Suite can batch load images with good filename handling and supports loading image sequences in order.

Save Image nodes need configuration for batch output. The key is filename templating that ensures each output has a unique name. Options include:

- Sequential numbering: output_0001.png, output_0002.png, etc.

- Preserving input filename: if input is photo_001.jpg, output is photo_001_processed.png

- Adding prefixes/suffixes to organize outputs

Most custom save nodes support these patterns. Consult your specific node's documentation for template syntax.

Building a Basic ComfyUI Batch Processing Workflow

Let's walk through creating a complete ComfyUI batch processing workflow for a common task: upscaling a folder of images.

Start with your input node. Place a Load Image Batch node and configure it:

Directory: /path/to/input_images

Index: 0

Pattern: *.jpg

The index starts at 0 for the first image. You'll queue multiple executions to process all images.

Connect the loaded image to your processing pipeline. For upscaling, this might be:

Load Image Batch -> Upscale Image (by Model) -> Save Image

Or for a more sophisticated upscale:

Load Image Batch -> VAE Encode -> KSampler (tile upscale) -> VAE Decode -> Save Image

Configure your Save Image node to handle batch output. Set the output directory and filename template:

Output Directory: /path/to/output_images

Filename Prefix: upscaled_

With some save nodes, you can preserve the original filename:

Filename Template: {original_name}_upscaled

Now determine how many images to process. Check how many images are in your input folder (e.g., 500 images). In ComfyUI, set the queue prompt count to match. When you click Queue Prompt with "Extra options" showing 500, ComfyUI queues 500 workflow executions. The batch loader automatically increments the index for each execution, processing image 0 through image 499.

Click Queue and watch the progress. ComfyUI shows remaining queue count, and you can see outputs appearing in your output folder.

Handling Large-Scale ComfyUI Batch Processing (1000+ Images)

Processing hundreds or thousands of images introduces challenges that smaller batches don't face. Here's how to handle large-scale ComfyUI batch processing effectively.

Chunking large batches is essential for manageability. Rather than queuing 5000 executions at once, break into chunks of 500-1000. This provides several benefits:

- Easier progress monitoring (you know when each chunk completes)

- Ability to pause between chunks to check results

- Easier recovery if something goes wrong mid-batch

- Better memory management (some caches can clear between chunks)

To process in chunks, adjust your starting index and queue count:

- Chunk 1: Index 0, queue 500 images

- Chunk 2: Index 500, queue 500 images

- Chunk 3: Index 1000, queue 500 images

You can script this with the ComfyUI API for hands-off processing.

Memory management matters for long batches. ComfyUI caches loaded models and intermediate results for performance, but over thousands of iterations, memory can accumulate. If you see memory growing over time:

- Clear the ComfyUI cache periodically through the UI

- For very long batches, schedule chunks with ComfyUI restarts between them

- Use --cpu-vae or other memory-saving flags if needed

Progress monitoring becomes important when processing takes hours or days. Options include:

- Watch the queue counter in the UI

- Count output files appearing in your output folder

- Use API-based monitoring to track progress programmatically

- Log completion of each chunk if you're scripting

Error handling is critical because some images will fail. Maybe one file is corrupted, or an image has unusual dimensions that break your workflow. Default ComfyUI behavior stops on error, which means you find out the next morning that processing stopped at image 347 of 5000.

Better approaches:

- Some batch nodes have skip-on-error options that continue processing

- Implement error handling in your workflow using custom nodes

- Log failed images for later investigation while allowing the batch to continue

- Process in small chunks so errors affect fewer images

Advanced ComfyUI Batch Processing Patterns

Beyond basic iteration, several patterns enable more sophisticated ComfyUI batch processing workflows.

Paired input processing handles cases where each input image has a corresponding control image, mask, or prompt file. For example, inpainting with per-image masks:

Load Image Batch (images) -> index 0, 1, 2...

Load Image Batch (masks) -> index 0, 1, 2... (same indexing)

Inpaint Node -> receives image and corresponding mask

Both batch loaders use the same index, so image 0 pairs with mask 0.

Text file prompts enable per-image prompts. Structure your data as:

/images/image_000.jpg

/prompts/image_000.txt (contains prompt for image_000)

Load the prompt text file corresponding to each image and pipe it to your sampler node's prompt input.

Conditional processing applies different processing based on image properties. Use nodes that detect image characteristics (dimensions, content, etc.) and route to different processing branches:

Load Image -> Detect Orientation -> If Portrait: Processing A, If space: Processing B

This enables batch processing workflows that adapt to varied input.

Multi-output workflows generate multiple outputs per input. For example, generating three variations of each image:

Load Image -> Process with Seed 1 -> Save as {name}_var1

-> Process with Seed 2 -> Save as {name}_var2

-> Process with Seed 3 -> Save as {name}_var3

Each queued execution produces three outputs for one input.

Programmatic ComfyUI Batch Processing Control via API

For maximum control over ComfyUI batch processing, use ComfyUI's API rather than the UI. API-based ComfyUI batch processing provides the most flexibility for production environments.

ComfyUI exposes a WebSocket API that accepts workflow JSON and queues executions. You can write scripts that:

- Load your workflow template

- Modify parameters for each batch item (input path, output path, prompts)

- Submit to the API

- Track completion

- Handle results

Here's a Python example for batch processing with API control:

import json

import requests

import time

from pathlib import Path

COMFYUI_URL = "http://127.0.0.1:8188"

def load_workflow_template(template_path):

with open(template_path, 'r') as f:

return json.load(f)

def queue_prompt(workflow):

response = requests.post(

f"{COMFYUI_URL}/prompt",

json={"prompt": workflow}

)

return response.json()

def get_history(prompt_id):

response = requests.get(f"{COMFYUI_URL}/history/{prompt_id}")

return response.json()

def wait_for_completion(prompt_id, timeout=300):

start = time.time()

while time.time() - start < timeout:

history = get_history(prompt_id)

if prompt_id in history:

return history[prompt_id]

time.sleep(1)

raise TimeoutError(f"Processing did not complete within {timeout}s")

def process_batch(input_folder, output_folder, workflow_template_path):

workflow = load_workflow_template(workflow_template_path)

input_path = Path(input_folder)

output_path = Path(output_folder)

output_path.mkdir(exist_ok=True)

images = sorted(input_path.glob("*.jpg")) + sorted(input_path.glob("*.png"))

print(f"Processing {len(images)} images")

for i, image_path in enumerate(images):

print(f"Processing {i+1}/{len(images)}: {image_path.name}")

# Modify workflow for this image

# These node IDs need to match your specific workflow

workflow["1"]["inputs"]["image"] = str(image_path)

workflow["10"]["inputs"]["filename_prefix"] = image_path.stem + "_processed"

# Queue and wait

result = queue_prompt(workflow)

prompt_id = result["prompt_id"]

try:

completion = wait_for_completion(prompt_id)

print(f" Completed successfully")

except TimeoutError:

print(f" ERROR: Timeout processing {image_path.name}")

except Exception as e:

print(f" ERROR: {e}")

print("Batch processing complete")

# Usage

process_batch(

"/path/to/input_images",

"/path/to/output_images",

"/path/to/workflow_api.json"

)

This script gives you complete control: you can add logging, implement retry logic, parallelize across multiple ComfyUI instances, send notifications on completion, and integrate with other systems.

Key benefits of API-based batch processing:

- Process images from anywhere, not just a single folder

- Dynamically generate prompts or parameters per image

- Implement sophisticated error handling and retry logic

- Track detailed metrics and timing

- Integrate with larger pipelines and automation systems

- Schedule processing for off-hours

Performance Optimization for ComfyUI Batch Processing

ComfyUI batch processing performance determines whether your job completes in hours or days. Optimize these factors to maximize your ComfyUI batch processing throughput:

Model loading overhead: ComfyUI caches loaded models between executions, so the first image is slower than subsequent ones (model load) but remaining images process faster. Ensure your workflow doesn't force model reloading - check that model paths are consistent and no node forces fresh loading.

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

VRAM management: For long batches, VRAM fragmentation can accumulate. If you notice slowdowns over time, the cache might need clearing. Balance between keeping models loaded (fast) and clearing cache (freeing VRAM for larger individual operations).

Disk I/O: Reading thousands of input images and writing thousands of outputs stresses storage. Fast SSD storage helps significantly. Avoid reading from and writing to network drives if possible - local NVMe storage provides best performance.

Parallel processing: If you have multiple GPUs, run multiple ComfyUI instances, each processing different chunks of your batch. Even on one GPU, you might run two instances if your workflow doesn't fully use VRAM, though this requires testing.

Workflow optimization: Simplify your workflow for batch processing. Remove any preview nodes (they add overhead). Ensure you're not doing unnecessary operations. Profile your workflow to identify bottlenecks.

Resolution and quality tradeoffs: Processing 1024x1024 images takes roughly 4x longer than 512x512 for generation tasks. For batch processing where speed matters, consider whether lower resolution is acceptable or if you can downscale inputs, process, then upscale outputs.

Common Batch Processing Applications

Different applications require different workflow patterns.

Upscaling is the simplest batch application. Load images, run through upscaler model, save at higher resolution. This is embarrassingly parallel and well-suited to batch processing:

Load Image Batch -> RealESRGAN Upscale -> Save Image

With a good GPU, you can upscale thousands of images overnight.

Style transfer applies consistent artistic style across a dataset. Use IP-Adapter or similar to apply a style reference to each image:

Load Image Batch (content) ->

Load Image (style, single reference) ->

IP-Adapter -> KSampler -> Save Image

Every image gets processed with the same style reference.

Image-to-image generation transforms inputs while preserving structure:

Load Image Batch -> VAE Encode -> KSampler (low-medium denoise) -> VAE Decode -> Save Image

Useful for applying generation enhancements to existing images while maintaining composition.

Object detection/segmentation runs detection models across a dataset:

Load Image Batch -> SAM Model -> Export Masks -> Save

Extracts masks or detections from each image for further use.

Data augmentation generates multiple variations of each image for training datasets:

Load Image Batch -> Random transforms -> Save multiple variations

Multiplies your dataset size for training purposes.

Consistent character generation uses batch prompts to generate a character across many scenes:

Load Prompt Batch (scene descriptions) ->

Character LoRA -> KSampler -> Save Image

Generates the same character in many different contexts.

Frequently Asked Questions

How long does it take to process 1000 images?

Depends entirely on your workflow complexity and hardware. Simple upscaling might take 3-5 seconds per image (about an hour total). Complex generation workflows might take 30-60 seconds per image (8-16 hours total). Multiply your per-image time by image count for estimates.

Can I pause and resume batch processing?

You can stop the queue at any time. To resume, note which index you stopped at (by counting outputs or queue position), set your batch loader to that index, and queue the remaining images. Some batch nodes have explicit resume functionality.

What happens if one image fails during processing?

Default behavior stops the queue. To continue despite errors, use batch nodes with skip-on-error options, or process in small chunks so failures affect fewer images. Always note which images failed for later investigation.

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

How do I know which image caused an error?

The current queue position tells you which index was processing. Match that to your input folder listing. Some batch nodes output the current filename to help identify failures.

Can I process images with different prompts for each?

Yes, use text file loading nodes that read prompt files corresponding to each image. Structure your data so image_001.jpg has image_001.txt containing its prompt.

Does batch processing use more VRAM than single images?

Per-image VRAM usage is the same. However, long batches without cache management can accumulate memory. Monitor usage during long batches and clear caches if memory grows.

How do I maintain filename correspondence between input and output?

Use save nodes that support filename templates like {original_name}_processed. This preserves the input filename in the output. Check your specific node's documentation for template variables.

Can I batch process videos?

Yes, extract frames, batch process frames, then reassemble. VHS (Video Helper Suite) nodes handle video loading and saving. Process videos as image sequences.

What's the maximum batch size I can process?

Limited by disk space and patience, not by ComfyUI. Tens of thousands of images are feasible if you have storage for inputs and outputs. Process in manageable chunks rather than queuing everything at once.

How do I handle images of different sizes in a batch?

Either resize all inputs to consistent dimensions before processing, or use workflow nodes that handle varying sizes gracefully. Some operations require consistent dimensions while others adapt automatically.

Can I distribute batch processing across multiple machines?

Yes, split your image set across machines, each running ComfyUI. This requires coordination to avoid processing the same images twice and to combine outputs. Network storage or cloud orchestration helps.

Advanced Batch Processing Patterns

Beyond basic iteration, sophisticated patterns handle complex production requirements.

Conditional Processing Workflows

Apply different processing based on image characteristics.

Dimension-based routing processes portrait and space images differently. Detect orientation and route to appropriate processing branches with settings optimized for each format.

Content-based routing applies different processing based on detected content. Use classification or detection nodes to identify image types and route So.

Quality-based filtering removes or flags low-quality inputs before processing. Check resolution, blur metrics, or other quality indicators to handle outliers appropriately.

Multi-Input Correlation

Process sets of related inputs together.

Image-caption pairs load both image and corresponding text file. The batch index keeps them synchronized, ensuring image 47 processes with caption 47.

Multi-modal inputs combine images with masks, depth maps, or control images. Multiple batch loaders with synchronized indices provide all inputs for each item.

Sequential dependency where one output becomes the next input. Process image A, use result as input for image B processing. This enables chained transformations.

Distributed Processing

Scale batch processing across multiple machines or GPUs.

Dataset partitioning divides images across workers. Machine 1 processes images 0-999, machine 2 processes 1000-1999. Requires coordination to avoid overlap and combine outputs.

Queue distribution sends different jobs to different workers based on availability. A coordinator assigns work and collects results.

Cloud burst scales to cloud GPUs for large batches while using local hardware for development. Services like RunPod or Vast.ai provide temporary GPU capacity.

Join 115 other course members

Create Your First Mega-Realistic AI Influencer in 51 Lessons

Create ultra-realistic AI influencers with lifelike skin details, professional selfies, and complex scenes. Get two complete courses in one bundle. ComfyUI Foundation to master the tech, and Fanvue Creator Academy to learn how to market yourself as an AI creator.

Integration with Production Pipelines

Batch processing often integrates with larger systems beyond ComfyUI.

Input Pipeline Integration

Connect batch processing to upstream data sources.

Database queries populate processing queues dynamically. Script queries database for images needing processing, generates batch job, and runs ComfyUI.

Watch folders automatically process new images as they appear. Monitor script detects new files and triggers batch processing for recent additions.

API triggers start batch processing from external systems. Web service receives processing request, constructs batch job, executes, and returns results.

Output Pipeline Integration

Connect processed results to downstream systems.

Automatic upload sends results to storage or CDN after processing. Scripted pipelines copy outputs to appropriate destinations automatically.

Database updates record processing completion and results. Update status, store output paths, record metrics for each processed item.

Notification systems alert when batches complete or fail. Email, Slack, or other notifications keep teams informed of processing status.

Monitoring and Observability

Track batch processing health and performance.

Progress dashboards show batch status in real time. Web interface displays queue position, completed count, estimated remaining time.

Metric collection tracks processing speed, error rates, resource usage. Time series data enables performance analysis and capacity planning.

Log aggregation collects logs from all processing components. Centralized logging enables debugging across distributed batch jobs.

For foundational workflow skills that support ComfyUI batch processing, start with our ComfyUI essential nodes guide. Understanding these basic nodes is crucial for building effective ComfyUI batch processing workflows.

Error Handling and Recovery

solid batch processing requires comprehensive error handling.

Error Detection

Identify problems quickly and accurately.

Processing errors occur during workflow execution. ComfyUI reports errors for failed nodes. Log these with the specific image that caused them.

Input errors occur when loading problematic files. Corrupted images, wrong formats, or missing files cause load failures. Handle gracefully rather than stopping the entire batch.

Output errors occur when saving results. Full disks, permission issues, or invalid paths prevent saving. Detect and report before losing processing work.

Error Recovery

Resume batch processing after addressing problems.

Skip and continue processes remaining items despite errors. Log failed items for later investigation while completing the batch.

Retry logic attempts failed items again after brief delay. Transient errors (network, disk) may succeed on retry.

Checkpoint recovery resumes from where processing stopped. Save progress regularly so interrupted batches restart at correct position.

Error Analysis

Learn from errors to prevent recurrence.

Pattern identification finds common causes among errors. If many images fail the same way, there's likely a systematic issue to address.

Root cause analysis traces errors to underlying problems. A "decode error" might indicate corrupted source files, format incompatibility, or memory issues.

Prevention measures based on error patterns improve future batches. Add input validation, adjust workflow for robustness, or improve error handling.

Resource Planning and Estimation

Plan batch processing jobs with realistic resource expectations.

Time Estimation

Predict how long batch jobs will take.

Per-image timing from test runs provides baseline. Time a representative sample to establish average processing time.

Total time calculation multiplies per-image time by count. A 30-second average across 1000 images means approximately 8.3 hours total.

Overhead accounting adds time for loading, saving, and transitions. Batch overhead can add 10-20% to pure processing time.

Parallelization benefits reduce calendar time if available. Two GPUs processing in parallel halve the calendar time for fixed total work.

Storage Planning

Ensure sufficient storage for batch operations.

Input storage holds source images accessible to processing. Calculate total input size and ensure fast access (local NVMe preferred over network).

Output storage receives all processed results. Estimate output size (may differ from input) and plan for complete batch plus headroom.

Temporary storage for intermediate files during processing. ComfyUI may create temporary files during complex workflows.

Memory Planning

Ensure sufficient system resources throughout the batch.

VRAM requirements per workflow execution. Batch processing doesn't increase per-image VRAM needs but long runs may accumulate fragmentation.

System RAM for data loading and buffering. Processing thousands of images requires RAM for file operations beyond GPU needs.

Swap planning for when physical memory is insufficient. Swap usage dramatically slows processing, so plan for sufficient physical RAM.

For memory optimization strategies that improve batch processing efficiency, see our VRAM optimization guide.

Conclusion

Batch processing transforms ComfyUI from an interactive creative tool into a production-capable image processing pipeline. The key principles are using appropriate batch loading nodes, managing long queues through chunking, handling errors gracefully, and optimizing performance for your specific hardware and workflow.

Start with simple batch workflows processing small sets of images to verify your setup works correctly. Once confident in your workflow, scale up to larger batches. Use API-based scripting for maximum control over complex batch operations.

The investment in learning batch processing pays off whenever you need to process more images than you'd want to click through manually. Whether that's dozens or thousands, batch processing makes it feasible.

For users who need reliable batch processing without infrastructure management, Apatero.com provides scalable processing for large image sets with professional monitoring and error handling.

For those just beginning with AI image generation, our complete beginner guide provides foundational knowledge that helps contextualize batch processing within your overall AI image workflow.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

10 Most Common ComfyUI Beginner Mistakes and How to Fix Them in 2025

Avoid the top 10 ComfyUI beginner pitfalls that frustrate new users. Complete troubleshooting guide with solutions for VRAM errors, model loading...

25 ComfyUI Tips and Tricks That Pro Users Don't Want You to Know in 2025

Discover 25 advanced ComfyUI tips, workflow optimization techniques, and pro-level tricks that expert users leverage.

360 Anime Spin with Anisora v3.2: Complete Character Rotation Guide ComfyUI 2025

Master 360-degree anime character rotation with Anisora v3.2 in ComfyUI. Learn camera orbit workflows, multi-view consistency, and professional...

.png)