Qwen-Image-Edit 2509 Plus: Better Image Editing with GGUF Support

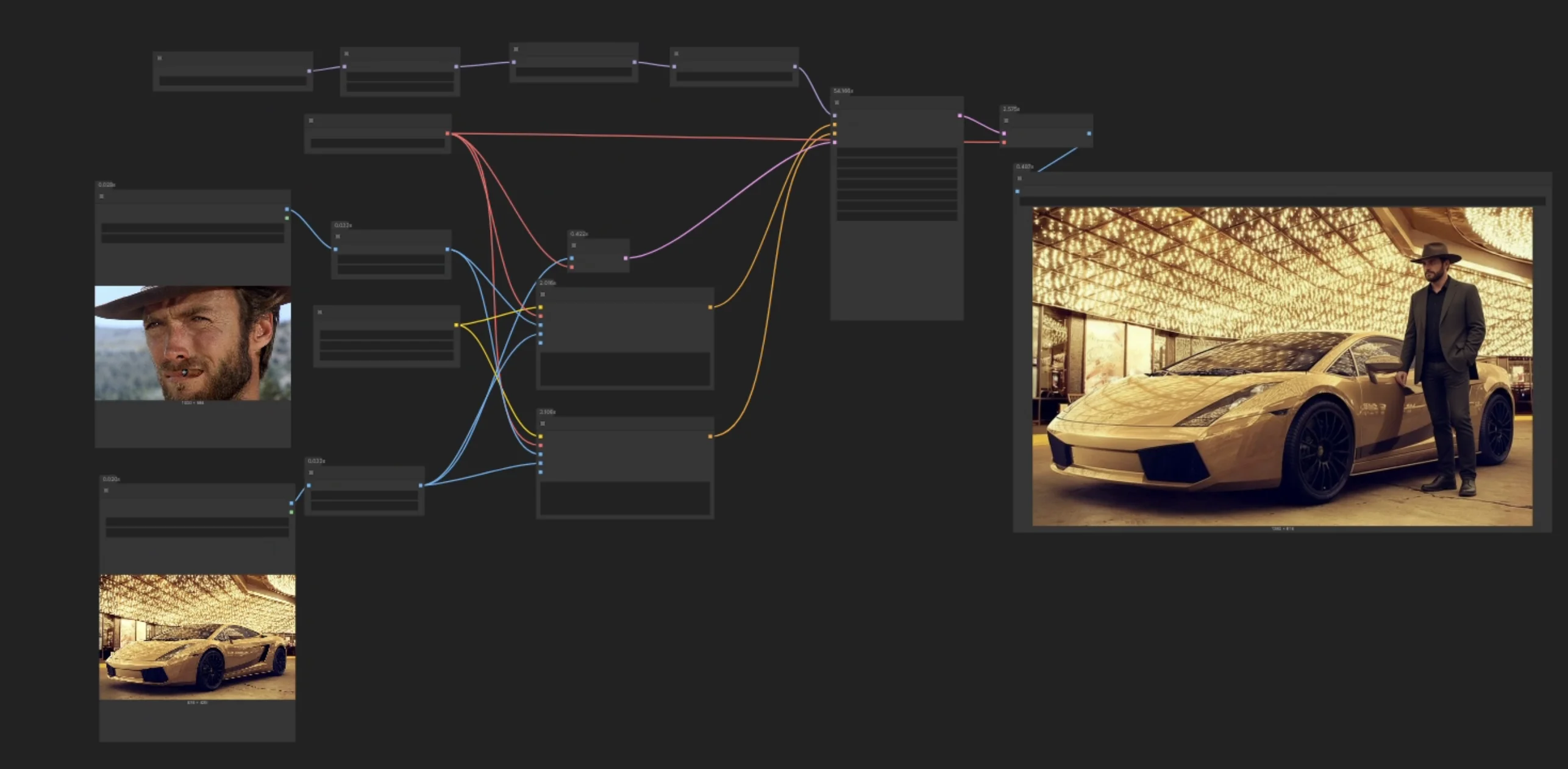

Master Qwen-Image-Edit 2509 Plus in ComfyUI with GGUF quantization support. Complete guide covering installation, advanced editing workflows, and...

Qwen-Image-Edit 2509 Plus enables AI-powered image editing through natural language instructions in ComfyUI, with GGUF quantization support allowing professional results on GPUs with just 8GB VRAM. Describe your edits in plain English and the model intelligently applies changes while preserving unedited regions, delivering quality comparable to manual Photoshop work in minutes instead of hours.

Why Use Qwen-Image-Edit 2509 Plus?

You spend hours in Photoshop trying to make that perfect edit. Change the lighting, adjust colors, remove objects, add details. Each tweak requires multiple layers, masks, and manual adjustments.

Alibaba's Qwen-Image-Edit 2509 Plus makes this possible through natural language. This breakthrough model understands editing instructions and applies them with precision that rivals manual editing. Better yet, GGUF quantization support means you can run it on GPUs with as little as 8GB VRAM while maintaining impressive quality.

- What makes Qwen-Image-Edit 2509 Plus superior to previous editing models

- Step-by-step installation in ComfyUI with GGUF support

- Natural language editing workflows for common tasks

- Advanced techniques for style transfer and object manipulation

- GGUF quantization strategies for different hardware configurations

- Real-world editing workflows and best practices

- Troubleshooting common issues with proven solutions

What is Qwen Image Edit 2509 Plus?

Qwen Image Edit 2509 Plus represents the latest evolution in instruction-based image editing. Released by Alibaba Cloud's Qwen team in September 2025, it builds on the foundation of their vision-language models with specialized training for precise image manipulation tasks.

Unlike traditional image editing models that require masks, layers, or complex parameter tuning, Qwen Image Edit interprets natural language instructions and applies edits intelligently. You describe what you want changed, and Qwen Image Edit figures out how to do it while preserving the parts of the image you want to keep. For VRAM optimization when running Qwen Image Edit, check our VRAM optimization guide. And if you need to isolate specific elements before editing, Qwen-Image-Layered can automatically decompose images into separate editable layers.

The Technology Behind Qwen-Image-Edit 2509 Plus

The model uses a hybrid architecture combining vision understanding, language comprehension, and diffusion-based image generation. According to research from Alibaba Cloud's technical documentation, the 2509 Plus version introduces several key improvements over the original Qwen-Image-Edit.

Key Technical Advances:

- Enhanced semantic understanding of complex editing instructions

- Better preservation of unedited regions

- Improved handling of multiple simultaneous edits

- Native GGUF quantization support for memory efficiency

- Faster inference through optimized attention mechanisms

Think of it as having a professional photo editor who instantly understands your vision and executes it perfectly. You provide creative direction, the model handles technical execution.

Qwen Image Edit Model Variants

Alibaba released several Qwen Image Edit model sizes optimized for different use cases and hardware configurations.

| Model Version | Parameters | Max Resolution | Precision | VRAM Required | Best For |

|---|---|---|---|---|---|

| Qwen-IE-2509-7B | 7B | 2048x2048 | FP16 | 16GB | Testing and experimentation |

| Qwen-IE-2509-14B | 14B | 2048x2048 | FP16 | 28GB | Professional editing |

| Qwen-IE-2509-Plus-14B | 14B | 4096x4096 | FP16 | 32GB+ | High-resolution production |

| Qwen-IE-2509-Plus-14B-GGUF | 14B | 4096x4096 | Q4_K_M to Q8 | 8-18GB | Budget hardware |

The Plus 14B variant with GGUF quantization hits the perfect balance for most users. It delivers professional results while running on consumer GPUs through intelligent quantization.

How Qwen Image Edit Compares to Traditional Editing Tools

Before installation, you need to understand where Qwen Image Edit fits in your workflow compared to existing solutions.

Qwen Image Edit vs Adobe Photoshop

Photoshop remains the industry standard for manual image editing, but the workflows couldn't be more different.

Photoshop Strengths:

- Pixel-perfect control over every element

- Unlimited layers and non-destructive editing

- Massive ecosystem of plugins and extensions

- Industry-standard file format support

- Professional color management

Photoshop Limitations:

- Steep learning curve requiring years to master

- Time-intensive for complex edits

- Requires manual selection and masking

- Subscription costs add up over time

- No AI understanding of semantic content

Qwen Image Edit Advantages:

- Natural language instructions, no technical skills needed

- Seconds to minutes vs hours for complex edits with Qwen Image Edit

- Automatic understanding of image semantics

- One-time setup cost, no subscriptions

- Runs entirely offline on your hardware

Of course, platforms like Apatero.com provide professional AI image editing through a simple web interface without managing local installations. You get instant results without technical complexity.

Qwen Image Edit vs Other AI Editing Models

Several AI models attempt instruction-based editing, but with varying capabilities.

InstructPix2Pix: Early instruction-based editing model from Berkeley AI Research. Good for simple edits but struggles with complex multi-step instructions. Limited resolution support (512x512 native). Requires careful prompt engineering for consistent results.

ControlNet Inpainting: Powerful but requires manual mask creation. Excellent control but loses the simplicity of natural language instructions. Better for targeted edits when you know exactly what needs changing.

Stable Diffusion Inpainting: General-purpose inpainting works well for filling removed areas but lacks semantic understanding. Can't interpret complex editing instructions like "make the lighting more dramatic" or "age the subject by 10 years."

Qwen-Image-Edit 2509 Plus Differentiators:

- Superior understanding of complex multi-step instructions

- Better preservation of non-edited regions

- Native high-resolution support up to 4096x4096

- GGUF quantization for accessible hardware requirements

- Faster inference than comparable models

- More consistent results across diverse image types

The Cost-Performance Reality

Let's analyze the economics over one year of moderate use (200 edited images per month).

Adobe Photoshop + AI Tools:

- Creative Cloud Photography Plan: $20/month = $240/year

- Additional AI editing plugins: $15-30/month = $180-360/year

- Total: $420-600/year (requires expertise)

Commercial AI Editing Services:

- Remove.bg, Photoroom, etc: $10-30/month = $120-360/year

- Limited to specific editing types

- Quality varies by service

Qwen-Image-Edit Local Setup:

- RTX 4070 Ti or similar (one-time): $800-900

- Electricity for one year: ~$30

- Total first year: ~$930, then ~$30/year

Apatero.com:

- Pay-per-edit pricing with no infrastructure investment

- Professional results without hardware or technical knowledge

- Instant access with guaranteed performance

For high-volume editing needs, local Qwen-Image-Edit pays for itself within two years. However, Apatero.com eliminates setup complexity entirely for users who prefer managed services.

How Do You Install Qwen-Image-Edit 2509 Plus in ComfyUI?

System Requirements

Minimum Specifications (GGUF Q4_K_M):

- ComfyUI version 0.3.48+

- 8GB VRAM (using GGUF quantization)

- 16GB system RAM

- 40GB free storage for models

- NVIDIA GPU with CUDA 11.8+ support

Recommended Specifications (FP16):

- 20GB+ VRAM for full precision

- 32GB system RAM

- NVMe SSD for faster model loading

- RTX 4090 or A6000 for optimal performance

Step 1: Install ComfyUI-Qwen Extension

Qwen models require a custom node pack for ComfyUI integration. If you're new to ComfyUI custom nodes, our essential nodes guide provides helpful context.

- Open terminal and navigate to ComfyUI/custom_nodes/

- Clone the Qwen extension with git clone https://github.com/QwenLM/ComfyUI-Qwen

- Navigate into ComfyUI-Qwen directory

- Install dependencies with pip install -r requirements.txt

- Restart ComfyUI completely

Verify installation by opening ComfyUI and checking for "Qwen" nodes in the node browser (right-click menu, search "qwen").

Step 2: Download Qwen-Image-Edit Models

Qwen provides models in multiple formats through Hugging Face.

Text Encoder (Required):

- Download Qwen2-VL-7B-Instruct from Hugging Face

- Place in ComfyUI/models/text_encoders/qwen/

Main Model Files:

For FP16 Full Precision (requires 28GB+ VRAM):

- Download Qwen-IE-2509-Plus-14B-FP16 from Hugging Face

- Place in ComfyUI/models/checkpoints/

For GGUF Quantized (recommended for most users):

- Download Qwen-IE-2509-Plus-14B-Q5_K_M.gguf from Hugging Face

- Place in ComfyUI/models/checkpoints/

- Q5_K_M offers best quality-performance balance

Find all official models at Qwen's Hugging Face repository.

Step 3: Install GGUF Support (For Quantized Models)

GGUF models require additional runtime support in ComfyUI.

- Navigate to ComfyUI/custom_nodes/

- Clone GGUF support with git clone https://github.com/city96/ComfyUI-GGUF

- Install dependencies with pip install -r requirements.txt

- Restart ComfyUI

You should now see GGUF-specific loader nodes in the node browser.

Step 4: Verify Directory Structure

Your ComfyUI installation should now have these directories and files:

Main Structure:

- ComfyUI/models/text_encoders/qwen/Qwen2-VL-7B-Instruct/

- ComfyUI/models/checkpoints/Qwen-IE-2509-Plus-14B-Q5_K_M.gguf

- ComfyUI/custom_nodes/ComfyUI-Qwen/

- ComfyUI/custom_nodes/ComfyUI-GGUF/

The text encoder should be in a qwen subfolder inside text_encoders, and both custom node folders should be present in custom_nodes.

Step 5: Load Official Workflow Templates

The Qwen team provides starter workflows optimized for common editing tasks.

- Download workflow JSON files from Qwen's GitHub examples

- Launch ComfyUI web interface

- Drag workflow JSON into the browser window

- ComfyUI loads all nodes and connections automatically

- Verify nodes show green status (no missing components)

Red nodes indicate missing dependencies or incorrect file paths. Double-check installation steps and file locations.

Your First Edit with Qwen-Image-Edit

Let's perform your first instruction-based edit to understand how Qwen-Image-Edit works.

Basic Image Editing Workflow

- Load the "Qwen-IE Basic Edit" workflow template

- Click "Load Image" node and upload your source image

- In the "Editing Instruction" text node, type your edit command

- Configure the "Qwen Sampler" node with these settings:

- Steps: 30 (higher = better quality, longer generation)

- CFG Scale: 7.5 (controls instruction adherence)

- Preservation Strength: 0.8 (how much to keep unchanged areas intact)

- Seed: -1 for random results

- Set output parameters in "Save Image" node

- Click "Queue Prompt" to start editing

Your first edit will take 2-8 minutes depending on hardware, image resolution, and model quantization level.

Understanding Editing Parameters

Steps (Denoising Iterations): The number of refinement passes. More steps produce cleaner, more coherent edits. Start with 30 for testing, increase to 50-70 for final outputs. Diminishing returns above 70 steps.

CFG (Classifier-Free Guidance) Scale: Controls how closely the model follows your instruction. Lower values (5-6) allow creative interpretation. Higher values (8-10) force strict adherence. Sweet spot is 7-7.5 for balanced results.

Preservation Strength: Qwen-specific parameter controlling how aggressively to preserve unedited regions. Range 0.0-1.0. Default 0.8 works well. Increase to 0.9 if too much changes. Decrease to 0.6-0.7 if edits aren't strong enough.

Resolution Handling: Qwen-Image-Edit automatically handles different resolutions up to 4096x4096. Images above 2048px on the longest side may benefit from tiling for better detail preservation.

Writing Effective Editing Instructions

The quality of your edits depends heavily on clear, specific instructions.

Good Instruction Examples:

- "Change the sky to sunset with warm orange and pink tones"

- "Remove the person in the background on the left side"

- "Make the subject smile and look directly at the camera"

- "Add dramatic side lighting from the right"

- "Change the season to autumn with falling leaves"

Poor Instruction Examples:

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

- "Make it better" (too vague)

- "Fix everything" (no specific target)

- "Change colors" (which colors? to what?)

- "Remove stuff" (what stuff?)

Instruction Writing Best Practices:

- Be specific about what to change

- Mention location if relevant ("background", "left side", "subject's face")

- Describe desired outcome, not just removal

- Use clear adjectives for style changes

- Keep instructions to 1-2 sentences for best results

Your First Results

When editing completes, compare the output to your source image carefully.

Quality Checks:

- Did the model execute your instruction accurately?

- Are non-edited areas well preserved?

- Is there visible quality degradation or artifacts?

- Does the edit look natural and cohesive?

If results aren't satisfactory, adjust your instruction clarity or modify parameters before re-running.

For users who want professional results without workflow complexity, remember that Apatero.com provides AI image editing through an intuitive interface. No node configurations or parameter tuning required.

Advanced Qwen-Image-Edit Techniques

Once you've mastered basic edits, these advanced techniques will significantly expand your creative capabilities.

Multi-Step Sequential Editing

Instead of describing all changes in one instruction, break complex edits into sequential steps.

Sequential Editing Workflow:

- Load your source image

- Apply first edit (e.g., "remove background clutter")

- Feed output image to second edit node

- Apply second edit (e.g., "add dramatic lighting")

- Feed to third edit for final refinement

- Chain as many steps as needed

This approach produces better results than cramming multiple instructions into one prompt. Each step can focus on executing one change perfectly.

Example Sequential Chain:

- Step 1: "Remove the telephone pole from the right side"

- Step 2: "Change the sky to a dramatic stormy sunset"

- Step 3: "Enhance the subject's face with better lighting"

- Step 4: "Add subtle depth of field blur to the background"

Style Transfer and Aesthetic Changes

Qwen-Image-Edit excels at applying stylistic changes while preserving image structure.

Style Instruction Examples:

- "Convert to black and white with high contrast film look"

- "Apply bold fantasy art style with saturated colors"

- "Transform to look like an oil painting"

- "Add cinematic color grading with teal and orange tones"

- "Make it look like a vintage 1970s photograph"

Lighting Modifications:

- "Add golden hour lighting from the left side"

- "Change to dramatic low-key lighting with deep shadows"

- "Apply soft diffused overcast lighting"

- "Add rim lighting to separate subject from background"

Object Manipulation and Composition Changes

Beyond simple removal, Qwen can modify, add, and transform objects intelligently.

Object Addition:

- "Add a red vintage car parked on the street"

- "Place a large ornate mirror on the wall behind the subject"

- "Add flowering plants in the foreground"

Object Transformation:

- "Change the subject's shirt from blue to red"

- "Transform the wooden chair into a modern office chair"

- "Age the building to look weathered and abandoned"

Composition Adjustments:

- "Shift the subject to the right side following rule of thirds"

- "Add foreground elements for depth"

- "Crop to vertical portrait orientation"

Preservation Masking for Selective Edits

For maximum control, combine Qwen-Image-Edit with manual masks to specify exactly what should change.

Masked Editing Workflow:

- Create a mask in ComfyUI or external tool highlighting edit region

- Connect mask to Qwen's "Edit Mask" input

- Provide editing instruction

- Model only modifies masked areas

- Perfect preservation of everything outside the mask

This hybrid approach combines AI intelligence with manual control for pixel-perfect results.

Batch Processing for Consistent Edits

Apply the same edit instruction to multiple images automatically.

Batch Workflow Setup:

- Load multiple images into a batch loader node

- Connect to Qwen-Image-Edit node

- Provide single instruction applied to all images

- Process sequentially or in parallel (if VRAM allows)

- Output batch of edited images

Perfect for applying consistent style changes, watermark removal, background replacement, or color correction across photo sets.

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

What GGUF Quantization Strategy Should You Use?

GGUF quantization makes Qwen-Image-Edit accessible on budget hardware. Understanding the trade-offs helps you choose the right quantization level. For a deep dive into how GGUF changed AI model deployment, check out our article on the GGUF revolution.

GGUF Quantization Levels Explained

GGUF uses different quantization formats balancing quality and memory usage.

| GGUF Format | VRAM Usage | Quality vs FP16 | Generation Speed | Best For |

|---|---|---|---|---|

| Q4_K_M | 8-10GB | 85-88% | 1.4x faster | Rapid iteration on low VRAM |

| Q5_K_M | 10-13GB | 92-95% | 1.2x faster | Best quality-performance balance |

| Q6_K | 13-16GB | 96-98% | 1.1x faster | Near-original with memory savings |

| Q8_0 | 16-18GB | 98-99% | 1.05x faster | Maximum GGUF quality |

| FP16 (Original) | 28GB+ | 100% baseline | 1.0x baseline | Production with unlimited VRAM |

Quality Degradation Characteristics:

Lower quantization levels affect different aspects of image quality:

- Fine details: Most impacted, slight softening at Q4_K_M

- Color accuracy: Well preserved even at Q4_K_M

- Edge sharpness: Moderate impact, noticeable at Q4_K_M

- Instruction adherence: Minimal impact across all quantization levels

Choosing Your Quantization Level

Match quantization to your hardware and use case.

For 8GB VRAM GPUs (RTX 4060 Ti, RTX 3070):

- Use Q4_K_M for images up to 1024x1024

- Expect slight quality degradation but usable results

- Perfect for testing and iteration

- Consider tiling for larger images

Check out our low VRAM optimization guide for more tips on running models on budget hardware.

For 12GB VRAM GPUs (RTX 4070, RTX 3080):

- Use Q5_K_M for best balance

- Handles up to 2048x2048 comfortably

- Quality difference from FP16 barely noticeable

- Recommended for most users

For 16GB VRAM GPUs (RTX 4080):

- Use Q6_K or Q8_0 for near-perfect quality

- Supports full 4096x4096 resolution

- Almost indistinguishable from FP16

- Ideal for professional work

For 24GB+ VRAM (RTX 4090, Professional Cards):

- Use FP16 for absolute maximum quality

- No compromises

- Fastest fine-tuning if needed

Installing and Using GGUF Models

GGUF models require the GGUF loader node installed in Step 3 of the installation section.

- Download your chosen GGUF quantization level from Hugging Face

- Place in ComfyUI/models/checkpoints/

- In your workflow, use "GGUF Checkpoint Loader" instead of standard loader

- Select your GGUF file from the dropdown

- Everything else remains identical to FP16 workflow

The GGUF loader automatically handles quantization format and memory optimization.

Memory Management for Maximum Efficiency

Additional techniques to maximize VRAM efficiency with GGUF models.

Enable Attention Slicing: Reduces peak VRAM usage during the attention mechanism computation. Enable in ComfyUI settings under "Optimization." Allows processing of larger images with minimal speed impact.

Use CPU Offloading: Move model components to system RAM when not actively processing. ComfyUI handles this automatically, but aggressive mode saves additional VRAM at the cost of 15-20 percent speed reduction.

Process at Lower Resolution: Edit at 1024x1024 or 1536x1536 during iteration, then run final edit at full resolution. The editing results are highly consistent across resolutions.

Clear Cache Between Operations: Use ComfyUI's cache clearing function between different editing tasks. Prevents memory fragmentation over extended sessions.

If GGUF optimization still seems complex, consider that Apatero.com handles all infrastructure automatically. You get maximum quality without managing quantization formats or memory constraints.

Real-World Qwen-Image-Edit Use Cases

Qwen's instruction-based editing unlocks practical workflows across multiple industries.

E-Commerce and Product Photography

Background Replacement: "Replace the background with a clean white studio background"

- Instant professional product shots from any source photo

- Consistent backgrounds across entire product catalog

- No manual masking or green screen required

Seasonal Variations: Generate multiple versions of product photos for different campaigns.

- "Add Christmas decorations and snow"

- "Place product in a summer beach setting"

- "Show product in modern minimalist home interior"

Color Variant Generation: "Change the product color from blue to red" produces additional product photos without re-shooting inventory.

Join 115 other course members

Create Your First Mega-Realistic AI Influencer in 51 Lessons

Create ultra-realistic AI influencers with lifelike skin details, professional selfies, and complex scenes. Get two complete courses in one bundle. ComfyUI Foundation to master the tech, and Fanvue Creator Academy to learn how to market yourself as an AI creator.

Real Estate and Architecture

Virtual Staging: "Add modern furniture and decor to the empty living room" transforms vacant properties into appealing spaces for listings.

Time of Day Variations: Show properties in best lighting conditions regardless of when photos were taken.

- "Change to golden hour sunset lighting"

- "Show the exterior at blue hour twilight"

Seasonal Appeal: "Add autumn foliage and warm tones" or "Show landscaping in full spring bloom" makes properties more attractive to buyers.

Content Creation and Social Media

Thumbnail Optimization: Enhance video thumbnails for maximum click-through rates.

- "Make the expression more excited and energetic"

- "Add dramatic lighting and increase contrast"

- "Remove distracting background elements"

Brand Consistency: Apply consistent color grading and style across all content.

- "Apply bold warm color grading matching brand guidelines"

- Process entire content libraries for cohesive visual identity

Quick Corrections: Fix issues in already-published content without reshooting.

- "Remove the stain on the shirt"

- "Fix the harsh shadows on the face"

- "Straighten the crooked picture frame"

Creative Arts and Concept Development

Mood Board Generation: Take a single concept image and generate variations exploring different directions.

- "Make darker and more mysterious"

- "Transform to bright and cheerful aesthetic"

- "Add futuristic sci-fi elements"

Style Exploration: Test different artistic styles on the same composition before committing to final execution.

Concept Iteration: Rapidly iterate on creative concepts through natural language adjustments without starting from scratch.

How Do You Troubleshoot Common Issues?

Even with proper setup, you may encounter specific challenges. Here are proven solutions.

Excessive Changes to Preserved Areas

Symptoms: Parts of the image you wanted to keep unchanged are being modified.

Solutions:

- Increase "Preservation Strength" parameter to 0.85-0.95

- Make your instruction more specific about what should change

- Use preservation masking to protect specific regions

- Reduce CFG scale slightly (to 6.5-7.0)

- Try different seed values (some seeds preserve better)

Edits Not Strong Enough

Symptoms: The instruction is being followed but changes are too subtle.

Solutions:

- Increase CFG scale to 8.5-9.5

- Reduce "Preservation Strength" to 0.6-0.7

- Use more emphatic language ("dramatically change", "completely transform")

- Increase sampling steps to 50-70

- Break edit into sequential steps, each building on the previous

Quality Degradation or Artifacts

Symptoms: Output image has visible artifacts, blurriness, or quality loss compared to input.

Solutions:

- Use higher GGUF quantization (Q6_K or Q8_0 instead of Q4_K_M)

- Increase sampling steps to 60+

- Verify source image resolution isn't already degraded

- Check that model files downloaded completely and correctly

- Enable tiling for high-resolution images

Model Not Following Instructions

Symptoms: Output ignores your instruction or produces unrelated changes.

Solutions:

- Simplify instruction to single clear change

- Check instruction language (English works best)

- Verify you're using the Plus 14B model, not smaller 7B

- Try different phrasing of the same concept

- Provide reference style in instruction (e.g., "like a professional portrait")

CUDA Out of Memory Errors

Symptoms: Generation fails partway through with CUDA memory error.

Solutions:

- Switch to lower GGUF quantization (Q5_K_M to Q4_K_M)

- Reduce input image resolution before editing

- Enable CPU offloading in ComfyUI settings

- Close other VRAM-intensive applications

- Enable attention slicing optimization

- Process in tiles for very large images

Slow Generation Times

Symptoms: Edits take excessively long compared to expected times.

Solutions:

- Verify you're using GGUF model, not unquantized FP16

- Update CUDA drivers to latest version

- Check that ComfyUI is actually using GPU (watch GPU use)

- Disable unnecessary preview nodes in workflow

- Close browser tabs or other applications stealing compute resources

For persistent issues not covered here, check the Qwen GitHub Issues for community solutions and bug reports.

Qwen-Image-Edit Best Practices for Production

Instruction Library Management

Build a reusable library of proven instructions for common editing tasks.

Organization System:

- /instructions/backgrounds/ - Background replacement prompts

- /instructions/lighting/ - Lighting modification prompts

- /instructions/style/ - Artistic style transfer prompts

- /instructions/objects/ - Object manipulation prompts

- /instructions/corrections/ - Common fix instructions

Document each successful instruction with:

- Exact wording that produced best results

- Example before/after images

- Recommended parameter settings

- Notes on what works and what doesn't

Quality Control Workflow

Implement staged editing to catch issues early.

Three-Stage Editing Process:

Stage 1 - Quick Preview (2 minutes):

- Use Q4_K_M quantization

- 20 steps

- 1024px resolution

- Verify instruction interpretation

Stage 2 - Quality Check (5 minutes):

- Use Q5_K_M quantization

- 40 steps

- Full resolution

- Verify quality and preservation

Stage 3 - Final Render (8-10 minutes):

- Use Q6_K or higher

- 60-70 steps

- Full resolution

- Only for approved edits

This approach prevents wasting time on high-quality renders of misinterpreted instructions.

Combining with Traditional Tools

Qwen-Image-Edit works best as part of a hybrid workflow.

Recommended Pipeline:

- Initial edit in Qwen: Apply major changes through natural language

- Fine-tuning in Photoshop: Manual pixel-level corrections if needed

- Color grading: Final color adjustments in dedicated tools

- Export: Save in appropriate format for end use

This hybrid approach uses AI for heavy lifting while maintaining human control over final quality.

Integration with Video Generation: Edited images from Qwen make excellent starting points for AI video generation. After perfecting your image edits, feed them into WAN 2.2 for image-to-video animation or WAN 2.2 Animate for character animation.

Batch Processing Strategy

For large editing projects, set up efficient batch workflows.

- Organize source images in categorized folders

- Create editing instruction templates for each category

- Use ComfyUI's batch processing nodes

- Process overnight or during off-hours

- Implement quality control review system for outputs

This systematized approach enables processing hundreds of images consistently.

Frequently Asked Questions

Q: What VRAM do I need to run Qwen-Image-Edit 2509 Plus? A: Minimum 8GB VRAM with Q4_K_M GGUF quantization. For best quality, 12GB with Q5_K_M quantization is recommended. Full FP16 precision requires 28GB+ VRAM.

Q: How does Qwen-Image-Edit compare to Photoshop for image editing? A: Qwen excels at rapid natural language edits (seconds to minutes) without technical skills, while Photoshop offers pixel-perfect manual control. Best results come from using both - Qwen for major changes, Photoshop for fine-tuning.

Q: Can I run Qwen-Image-Edit on AMD or Mac GPUs? A: Currently requires NVIDIA GPUs with CUDA support. AMD ROCm support is experimental. Mac Metal support is not yet available for the 2509 Plus version.

Q: What image resolutions does Qwen-Image-Edit support? A: Up to 4096x4096 pixels with the Plus 14B variant. Lower GGUF quantizations work best up to 2048x2048. Larger images can be processed using tiling techniques.

Q: How long does a typical edit take? A: 2-8 minutes depending on resolution, hardware, quantization level, and sampling steps. Quick previews with Q4_K_M at 20 steps take under 2 minutes. Final quality renders at 60+ steps take 8-10 minutes.

Q: Can Qwen-Image-Edit handle multiple edits in one instruction? A: Yes, but sequential editing (one change per step) produces better results than combining multiple instructions. Break complex edits into 2-4 sequential steps for optimal quality.

Q: What's the difference between Qwen-Image-Edit and InstructPix2Pix? A: Qwen offers superior understanding of complex instructions, better preservation of unedited regions, native high-resolution support, and GGUF quantization for lower VRAM requirements.

Q: Do I need to create masks for edits? A: No, Qwen understands natural language instructions without manual masking. However, you can optionally provide masks for pixel-perfect control over edit regions.

Q: Can I batch process multiple images with the same edit? A: Yes, ComfyUI supports batch processing. Load multiple images, apply the same instruction to all, and process sequentially or in parallel depending on VRAM availability.

Q: Which GGUF quantization level should I use? A: Q5_K_M offers the best quality-performance balance for most users with 12GB VRAM. Q4_K_M works for 8GB systems with slight quality reduction. Q6_K or Q8_0 for near-FP16 quality with memory savings.

What's Next After Mastering Qwen Image Edit

You now have comprehensive knowledge of Qwen Image Edit 2509 Plus installation, workflows, GGUF optimization, and production techniques. You understand how Qwen Image Edit instruction-based editing can dramatically accelerate your image editing workflow. For complete beginners, our beginner's guide to AI image generation provides essential context.

Recommended Next Steps:

- Create your personal instruction library for common editing tasks

- Experiment with sequential multi-step editing workflows

- Test different GGUF quantization levels to find your sweet spot

- Integrate Qwen into your existing creative pipeline

- Join the Qwen community to share results and learn advanced techniques

Additional Resources:

- Qwen Official Documentation for technical details

- Hugging Face Model Hub for all model variants

- ComfyUI Community Wiki for workflow examples

- Qwen Discord community for troubleshooting and tips

- Choose Local Qwen-Image-Edit if: You edit images regularly, need complete control over the process, have suitable hardware (8GB+ VRAM with GGUF), value privacy, and want zero recurring costs

- Choose Apatero.com if: You need instant results without technical setup, prefer guaranteed performance, want pay-as-you-go pricing without hardware investment, or lack dedicated GPU hardware

- Choose Photoshop if: You need pixel-perfect manual control, work with industry-standard workflows, require advanced features beyond AI editing, or already have expertise in traditional tools

Qwen-Image-Edit 2509 Plus represents a new approach in image editing. Natural language instructions replace complex manual workflows, GGUF quantization makes it accessible on budget hardware, and the results rival professional manual editing in most scenarios. Whether you're editing product photos, creating social media content, developing concepts, or processing large image libraries, Qwen puts professional AI editing capabilities directly in your hands.

The future of image editing isn't about choosing between AI and traditional tools. It's about using AI for rapid iteration and heavy lifting, while maintaining human creative direction and final quality control. Qwen-Image-Edit 2509 Plus makes that future available today in ComfyUI, ready for you to explore and master.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

10 Best AI Influencer Generator Tools Compared (2025)

Comprehensive comparison of the top AI influencer generator tools in 2025. Features, pricing, quality, and best use cases for each platform reviewed.

AI Adventure Book Generation with Real-Time Images

Generate interactive adventure books with real-time AI image creation. Complete workflow for dynamic storytelling with consistent visual generation.

AI Background Replacement: Professional Guide 2025

Master AI background replacement for professional results. Learn rembg, BiRefNet, and ComfyUI workflows for seamless background removal and replacement.

.png)