PersonaLive: How to Get Started with Real-Time AI Avatar Streaming

Complete getting started guide for PersonaLive. Generate infinite-length portrait animations in real-time on a 12GB GPU for live streaming.

Real-time AI avatar streaming has been "almost here" for years. PersonaLive actually delivers. A single 12GB GPU generating infinite-length portrait animations with 7-22x speedup over previous methods. This is the first time real-time diffusion-based avatars are genuinely viable.

Quick Answer: PersonaLive is a diffusion framework for real-time portrait animation that uses hybrid implicit signals, appearance distillation, and autoregressive micro-chunk streaming to achieve unprecedented speed. It's designed for live streaming applications where latency matters as much as quality.

- Real-time and streamable diffusion on a single 12GB GPU

- 7-22x speedup over prior diffusion-based portrait animation

- Infinite-length video generation via autoregressive streaming

- ComfyUI support available as of December 17, 2025

- Uses hybrid implicit signals for expressive motion control

- Designed specifically for live streaming scenarios

Why PersonaLive Matters

Here's the problem it solves. Diffusion-based portrait animation models focus on visual quality and expression realism, but they ignore generation latency. When you're live streaming, waiting 5-10 seconds per frame isn't viable.

PersonaLive was built from the ground up for streaming. The architecture prioritizes speed alongside quality. The result: real-time portrait animation that actually works for live applications.

I've been testing it since the ComfyUI integration dropped on December 17, 2025. The speed claims are real. Generation keeps up with live input in ways previous methods simply couldn't.

The Technical Approach

PersonaLive achieves its speed through three innovations:

1. Hybrid Implicit Signals

Instead of explicit facial landmarks, PersonaLive uses implicit facial representations and 3D implicit keypoints. This provides expressive image-level motion control without the computational overhead of explicit tracking.

Why this matters: traditional facial tracking is a bottleneck. Implicit representations transfer more information faster.

2. Appearance Distillation

PersonaLive proposes a "fewer-step appearance distillation strategy" that eliminates redundancy in the denoising process. Essentially, it learned to skip steps that don't meaningfully improve the output.

Why this matters: fewer denoising steps = faster generation. The distillation identifies which steps are essential and which are just computation wasted.

3. Autoregressive Micro-Chunk Streaming

This is the key innovation for infinite-length generation. Rather than generating entire videos, PersonaLive generates small chunks that stream continuously. A sliding training strategy and historical keyframe mechanism ensure chunks connect smoothly.

Why this matters: you're not waiting for the whole video. Generation happens in real-time as you stream, with no practical length limit.

Hardware Requirements

The good news: PersonaLive is designed for consumer hardware.

Minimum viable:

- 12GB VRAM GPU (RTX 3060 12GB, RTX 4070, etc.)

- Modern CPU (for webcam/input processing)

- Stable internet for streaming

Recommended:

- 16-24GB VRAM (RTX 4080, 4090)

- Fast CPU for input processing

- Low-latency capture setup

What I tested on:

- RTX 4090

- Real-time performance with room to spare

- Higher resolution output than minimum specs

The 12GB claim is real. PersonaLive runs on mid-range consumer hardware. This accessibility is part of what makes it significant.

Getting Started in ComfyUI

ComfyUI-PersonaLive is the official integration. Here's the setup:

Installation

- Open ComfyUI Manager

- Search for "PersonaLive"

- Install ComfyUI-PersonaLive node pack

- Restart ComfyUI

Or clone manually:

cd ComfyUI/custom_nodes

git clone https://github.com/GVCLab/ComfyUI-PersonaLive

pip install -r ComfyUI-PersonaLive/requirements.txt

Required Models

Download from the PersonaLive Hugging Face or ModelScope:

- PersonaLive checkpoint

- Required encoders/decoders

Place in appropriate ComfyUI model folders as specified in the repo README.

Basic Workflow

The minimal workflow needs:

- Input source (webcam, video file, or image sequence)

- PersonaLive nodes for processing

- Output destination (preview, stream, or save)

Example workflows are included in the node pack. Start with these rather than building from scratch.

Setting Up for Live Streaming

The real power of PersonaLive is streaming applications. Here's how to connect it:

Webcam Input

Use ComfyUI's webcam input nodes to capture your face:

- Real-time facial tracking

- Motion drives the avatar

- Expressions transfer to the animated portrait

Virtual Camera Output

Route PersonaLive output to a virtual camera:

- OBS Virtual Camera

- ManyCam

- Other virtual camera solutions

Your streaming software sees the animated avatar as a camera source.

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

Streaming Platform Integration

With virtual camera output:

- Twitch: Add virtual camera as scene source

- YouTube: Same approach

- Discord: Virtual camera as video input

- Any platform: Works like a normal webcam

You appear as an animated avatar while your real face controls the expressions and motion.

Practical Use Cases

Virtual Streaming

The obvious application. Stream as an animated character without showing your face. Your expressions drive the avatar in real-time.

Benefits:

- Privacy while streaming

- Consistent "look" regardless of your actual appearance

- Character-based content without expensive motion capture

Virtual Meetings

Tired of being on camera? PersonaLive can substitute an avatar for video calls.

I've tested this for casual video calls. Works surprisingly well. Professional settings might raise eyebrows, but for creative or gaming contexts, it's viable.

Content Creation

Generate infinite-length animated content for videos, shorts, and social media. The real-time nature means you can record as you perform rather than animating after the fact.

Interactive Entertainment

Characters that respond in real-time. Gaming streams with AI co-hosts. Interactive experiences where the avatar reacts to audience input.

Performance Optimization

To get the best results from PersonaLive:

Resolution trade-offs:

- Lower resolution = faster performance

- 512px is reliable on 12GB

- 768px works well on 16GB+

- 1024px needs 24GB+ for real-time

Quality settings:

- Fewer denoising steps for speed

- More steps for quality (pre-recorded content)

- Balance based on your use case

Input quality matters:

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

- Good lighting on your face

- Clear webcam image

- Minimal background distractions

- Consistent positioning

Reduce background load:

- Close unnecessary applications

- Disable overlays that might interfere

- Dedicated streaming machine ideal

Current Limitations

Being honest about where PersonaLive currently falls short:

Still developing:

- ComfyUI integration is very new (December 2025)

- Workflow documentation is limited

- Community resources still building

Technical constraints:

- Best with front-facing portraits

- Extreme head turns can struggle

- Hand gestures not captured (face only)

- Audio sync requires additional tooling

Quality vs. pre-rendered:

- Real-time quality is good, not perfect

- Pre-rendered diffusion methods still look better

- Trade-off is speed, not quality parity

For live streaming, these limitations are acceptable trade-offs. For pre-recorded content where speed doesn't matter, other methods may still be preferable.

Combining with Other Tools

PersonaLive is part of a larger toolkit. Here's how it fits:

PersonaLive + Voice Cloning: Your avatar speaks with your cloned voice. Motion from PersonaLive, voice from ElevenLabs or Fish Speech. Complete virtual persona.

PersonaLive + SCAIL: Use SCAIL for complex body animations, PersonaLive for real-time face. Different tools for different parts of the character.

PersonaLive + AI Influencer Pipeline: Generate still images with consistent character (Apatero, IPAdapter), animate the face with PersonaLive for video content.

The combination of these tools enables virtual personas that were previously impossible without professional motion capture.

How Apatero Relates

Full disclosure: I work with Apatero.com. PersonaLive is a separate open source project from GVC Lab at Great Bay University.

Earn Up To $1,250+/Month Creating Content

Join our exclusive creator affiliate program. Get paid per viral video based on performance. Create content in your style with full creative freedom.

That said, there's natural synergy. Apatero handles image and video generation for character creation. PersonaLive handles real-time animation. Using Apatero to create your character, then PersonaLive to animate it for streaming, is a workflow that makes sense.

We're watching PersonaLive development closely. Integration possibilities are interesting.

Troubleshooting

Issue: Low frame rate during streaming

Reduce output resolution. Enable any available optimizations. Close background applications. Consider upgrading GPU if consistently maxed.

Issue: Avatar doesn't match my expressions

Check input lighting. Ensure webcam is properly positioned. Some expressions may not transfer perfectly. Calibrate if options available.

Issue: Disconnected/choppy output

The autoregressive chunks aren't connecting smoothly. Try adjusting chunk size settings if available. Ensure stable input signal.

Issue: Installation errors

PersonaLive has specific dependencies. Check requirements.txt is fully installed. Python version compatibility matters. Try a clean virtual environment.

Issue: VRAM overflow

Reduce resolution. Close other GPU-using applications. Consider VRAM-optimized settings if available.

Frequently Asked Questions

Can I use PersonaLive for commercial streaming?

Check the license in the GitHub repository. As of writing, it's a research project with academic affiliations. Commercial use terms may have restrictions.

How does latency compare to non-AI solutions?

PersonaLive adds latency vs. direct camera feed, but it's designed to be minimal. For streaming contexts, the delay is typically acceptable.

Can I animate any portrait image?

Within reason. Works best with front-facing portraits similar to training data. Extreme stylization or unusual angles may struggle.

Does it work with anime avatars?

Portrait animation is focused on realistic faces. Anime-style avatars would need different models or approaches.

What's the difference between PersonaLive and other avatar solutions?

PersonaLive uses diffusion-based generation for quality. Other solutions use rigging or 3D models. Diffusion gives more natural results but requires more compute.

Can I add my own avatar character?

Yes, you provide the portrait image. Your face drives the motion, your chosen portrait provides the appearance. Swap portraits to change avatars.

What's Next for PersonaLive

Based on the research paper and project activity:

Near-term:

- Improved ComfyUI workflow examples

- Better documentation

- Community contributions

Potential future:

- Higher resolution support

- Better extreme pose handling

- Audio-synced lip movement

- Body pose support

The project is active. GVC Lab, University of Macau, and Dzine.ai are backing it. Expect continued development through 2026.

Final Thoughts

PersonaLive delivers on the promise of real-time AI avatar streaming. The 7-22x speedup is real. The 12GB VRAM target is real. Generation keeps pace with live input.

For content creators who want to stream as animated characters without expensive motion capture, this is the accessible solution we've been waiting for. The technology works. The barrier is now willingness to set it up, not fundamental capability.

Start with the example workflows. Get basic operation working. Then customize for your specific streaming setup. The learning curve is manageable if you're already comfortable with ComfyUI.

Real-time AI avatars are here. PersonaLive proves it.

Related guides: WAN SCAIL Character Animation, Text to Speech Voice Cloning, AI Influencer Content Creation

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

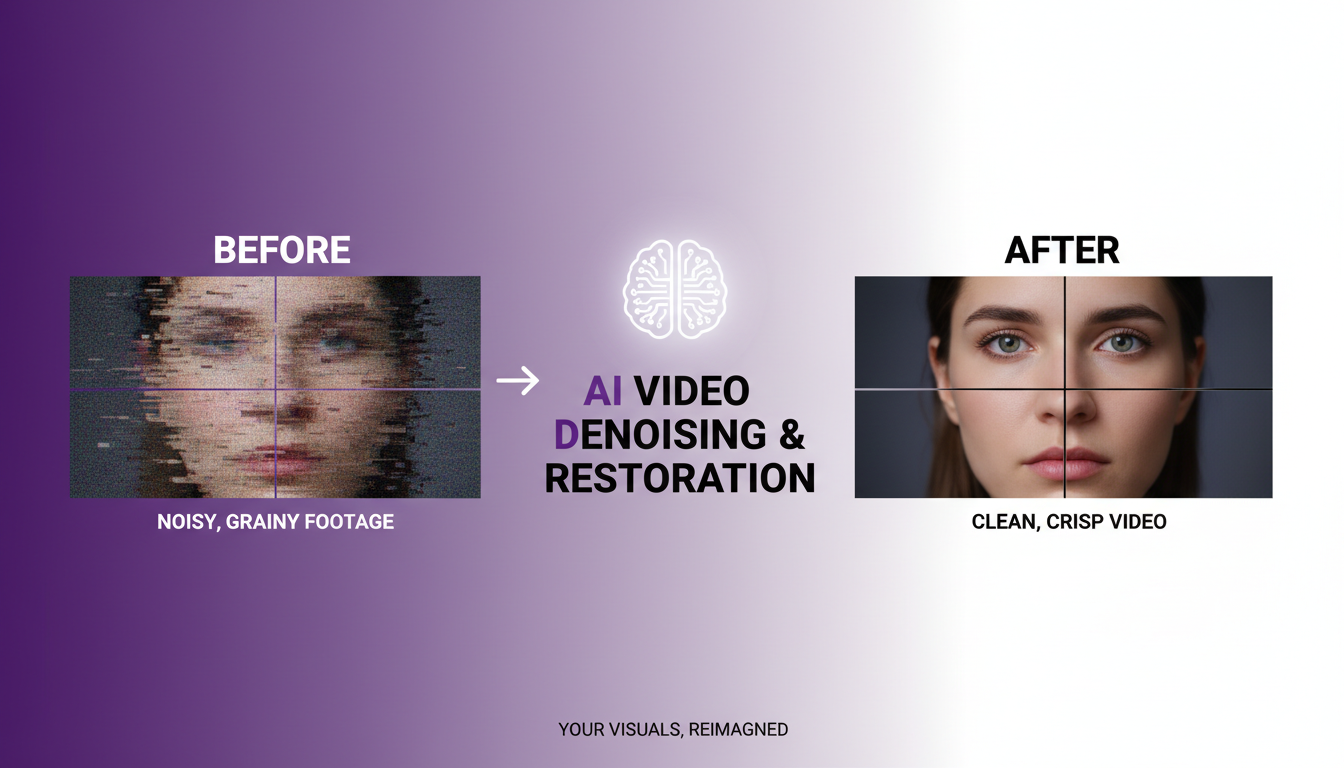

AI Video Denoising and Restoration: Complete Guide to Fixing Noisy Footage (2025)

Master AI video denoising and restoration techniques. Fix grainy footage, remove artifacts, restore old videos, and enhance AI-generated content with professional tools.

AI Video Generation for Adult Content: What Actually Works in 2025

Practical guide to generating NSFW video content with AI. Tools, workflows, and techniques that produce usable results for adult content creators.

AI Video Generator Comparison 2025: WAN vs Kling vs Runway vs Luma vs Apatero

In-depth comparison of the best AI video generators in 2025. Features, pricing, quality, and which one is right for your needs including NSFW capabilities.