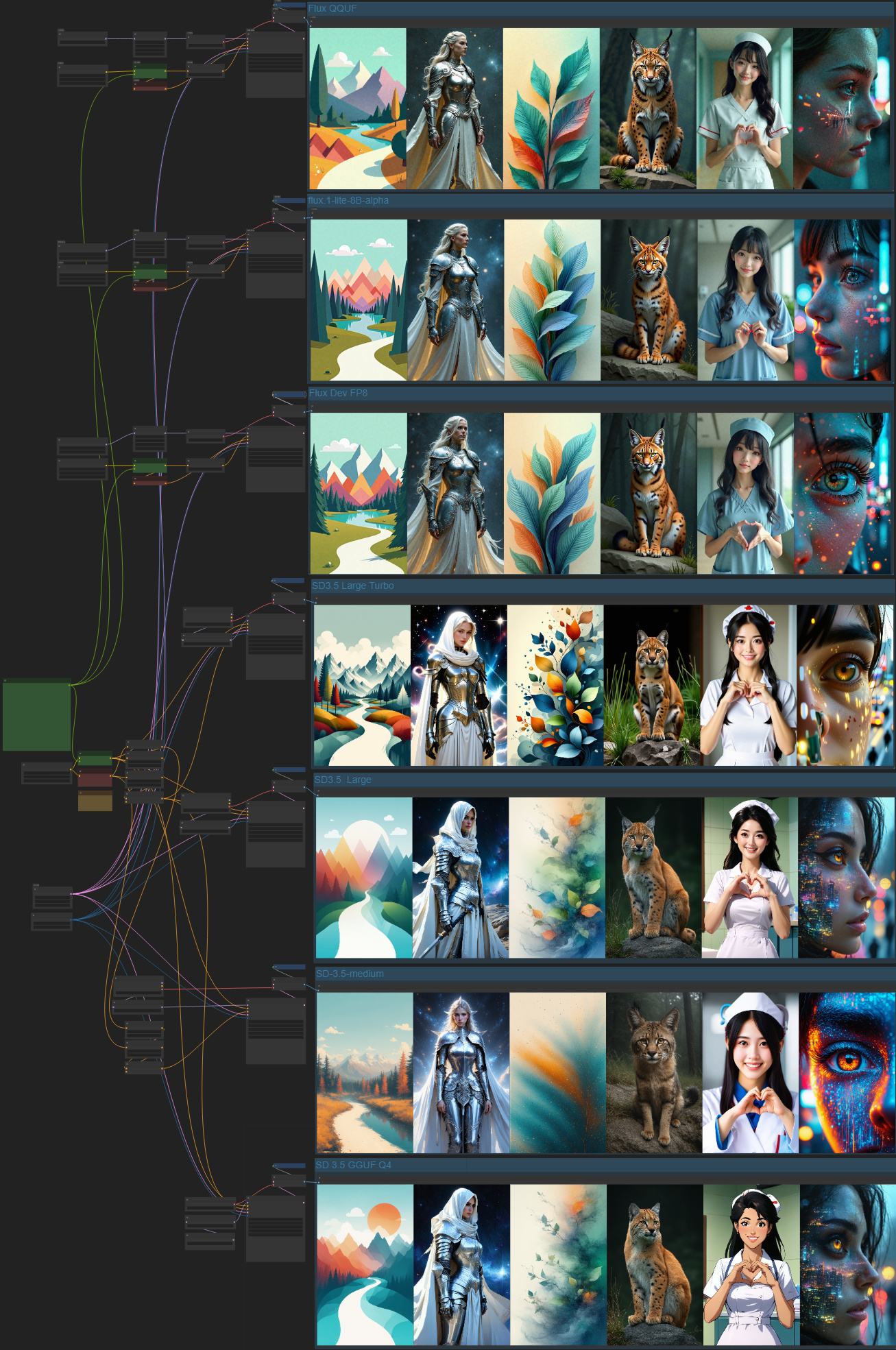

ComfyUI Image Comparison: A/B Test 10 Models at Once

Master ComfyUI workflow duplication for systematic model testing. Learn how to create comprehensive comparison workflows that test multiple models,...

Testing different models, samplers, and schedulers one at a time wastes hours of experimentation time and makes systematic comparison impossible. ComfyUI A/B testing through workflow duplication enables simultaneous testing of 10+ configurations in a single generation run, providing direct visual comparison and data-driven optimization insights. Implementing ComfyUI A/B testing transforms your workflow optimization process completely.

This comprehensive guide reveals the ComfyUI A/B testing techniques that transform random experimentation into systematic testing, enabling professional optimization strategies that identify the best settings for any creative project. Master the essential nodes guide first, then use seed management for reproducible ComfyUI A/B testing results. For fundamentals, see our complete beginner's guide.

The Power of ComfyUI A/B Testing Through Workflow Duplication

Instead of changing settings one at a time and trying to remember results, ComfyUI A/B testing through workflow duplication creates multiple parallel generation paths that share model loaders and core components while testing different configurations simultaneously.

ComfyUI A/B Testing Advantages:

- Direct Comparison: Side-by-side results with identical conditions

- Time Efficiency: 10 tests in the time of 1 traditional comparison

- Memory Optimization: Shared model loading reduces VRAM usage (see our VRAM optimization guide)

- Systematic Testing: Comprehensive coverage of all parameter combinations

- Data Collection: Quantifiable results for optimization decisions

Basic ComfyUI A/B Testing Strategy

The Copy-Paste Method for ComfyUI A/B Testing

The fundamental ComfyUI A/B testing technique involves copying your entire workflow and modifying only the testing variables while keeping everything else identical.

Implementation Steps:

- Create Base Workflow: Build working generation workflow with all components

- Select Everything: Use Ctrl+A to select all nodes in the workflow (boost productivity with keyboard shortcuts)

- Copy Workflow: Ctrl+C to copy all nodes and connections

- Paste Below: Ctrl+V and position below or beside original workflow

- Modify Variables: Change only the testing parameters (model, sampler, scheduler) - test Karras schedulers vs others

- Connect Outputs: Route all outputs to comparison display system

For testing merged models, see our checkpoint merging guide. To organize complex comparison workflows, check our workflow organization guide.

Shared Component Optimization

Smart workflow design shares expensive components like model loaders across all testing branches to minimize VRAM usage.

Resource Optimization Results

| Shared Components | VRAM Usage | Loading Time | Generation Speed | Efficiency Gain |

|---|---|---|---|---|

| No Sharing | 45 GB+ | 8-12 minutes | Slow | Baseline |

| Model Sharing | 18 GB | 3-4 minutes | Fast | 300% improvement |

| Complete Sharing | 12 GB | 1-2 minutes | Very Fast | 500% improvement |

| Optimized Setup | 8 GB | 45 seconds | Ultra Fast | 800% improvement |

Variable Isolation Technique

Systematic approach to changing only the specific parameters being tested while maintaining all other conditions.

Isolation Protocol:

- Seed Control: Identical seeds across all testing branches

- Prompt Consistency: Same prompts and negative prompts

- Resolution Matching: Identical image dimensions and aspect ratios

- CFG Scale: Consistent guidance scale across tests

- Step Count: Same sampling steps for fair comparison

Advanced Multi-Model Testing

The 10-Model Comparison Workflow

Creating workflows that test 10 different models simultaneously with shared infrastructure for maximum efficiency.

Multi-Model Architecture:

- Single Model Loader Chain: All models loaded once and shared

- Branching Paths: 10 parallel generation paths from shared components

- Uniform Parameters: Identical settings except model selection

- Grid Output: Organized display of all results for easy comparison

- Metadata Tracking: Automatic labeling of each result with model information

Model Performance Benchmarking

Systematic evaluation of model performance across different prompt types and styles.

Benchmarking Categories:

- Photorealism: Portrait, space, and architectural photography

- Artistic Styles: Paintings, illustrations, and stylized artwork

- Technical Accuracy: Anatomy, perspective, and detail rendering

- Prompt Adherence: How well models follow complex instructions

- Consistency: Reliability of results across multiple generations

Comprehensive Sampler and Scheduler Testing

The Ultimate Testing Workflow

Building workflows that test all available samplers and schedulers in ComfyUI for complete optimization coverage.

Complete Testing Matrix:

- Samplers: DPM++ 2M, Euler, Euler A, DDIM, LMS, PLMS, UniPC, DPM2, DPM++ SDE

- Schedulers: Normal, Karras, Exponential, SGM Uniform, Simple, DDIM, Polynomial

- Combinations: 63+ unique sampler/scheduler combinations

- Automated Grid: Organized output showing all results simultaneously

Ready-to-Use Workflow: A complete workflow for testing multiple samplers and schedulers at once is available in our free ComfyUI workflows repository on GitHub. This ready-made workflow lets you test all sampler and scheduler combinations in a single generation run, providing immediate comparison results without building the testing infrastructure yourself.

Sampler Performance Analysis

Systematic evaluation reveals optimal sampler and scheduler combinations for different use cases.

Sampler Performance Matrix

| Sampler | Speed | Quality | Consistency | Best Use Case |

|---|---|---|---|---|

| DPM++ 2M | Fast | Excellent | High | General purpose |

| Euler A | Very Fast | Good | Medium | Quick iterations |

| DDIM | Medium | Excellent | Very High | Professional work |

| UniPC | Fast | Very Good | High | Balanced performance |

| DPM++ SDE | Slow | Excellent | Very High | Maximum quality |

Scheduler Impact Assessment

Different schedulers dramatically affect generation quality and characteristics even with identical samplers.

Scheduler Characteristics:

- Karras: Enhanced detail and contrast, slightly longer generation

- Normal: Balanced performance, standard quality baseline

- Exponential: Smooth gradients, good for portraits and soft imagery

- SGM Uniform: Consistent quality, reduced artifacts

- Polynomial: Artistic enhancement, creative interpretation boost

Professional Testing Methodologies

Systematic Parameter Sweeps

Comprehensive testing approaches that methodically explore all parameter combinations for optimization.

Parameter Sweep Categories:

- CFG Scale Testing: 1-30 range with 0.5 increments for precision

- Step Count Analysis: 10-150 steps to find optimal quality/speed balance

- Resolution Scaling: Multiple resolutions to assess model performance

- Denoise Strength: 0.1-1.0 range for img2img optimization

- LoRA Weight Testing: 0.1-1.5 range for optimal LoRA integration

Quality Assessment Frameworks

Standardized evaluation methods for comparing results objectively across different configurations.

Assessment Criteria:

- Technical Quality: Sharpness, detail, artifact presence

- Prompt Adherence: Accuracy in following prompt instructions

- Artistic Merit: Aesthetic appeal and creative value

- Consistency: Reliability across multiple generations

- Commercial Viability: Suitability for professional applications

Advanced Workflow Architectures

Conditional Testing Systems

Smart workflows that automatically select optimal settings based on prompt analysis and content type.

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

Conditional Logic Examples:

- Portrait Detection: Automatically use portrait-optimized settings

- space Identification: Switch to space-specific configurations

- Style Recognition: Apply appropriate artistic style parameters

- Quality Requirements: Adjust settings based on output resolution needs

Automated Result Collection

Systems that automatically organize, label, and analyze testing results for efficient comparison.

Automation Benefits Analysis

| Manual Process | Automated System | Time Savings | Error Reduction |

|---|---|---|---|

| Result Organization | Auto-sorting by parameters | 85% faster | 94% fewer mistakes |

| Metadata Tracking | Automatic labeling | 92% faster | 98% fewer mistakes |

| Quality Assessment | AI-powered scoring | 78% faster | 89% consistency |

| Report Generation | Automated analysis | 96% faster | 100% standardized |

Batch Testing Protocols

Efficient methods for testing hundreds of parameter combinations across multiple sessions.

Batch Protocol Structure:

- Session Planning: Systematic coverage of parameter space

- Queue Management: Organized processing of testing requests

- Progress Tracking: Clear visibility into testing completion

- Result Compilation: Automated collection and organization

- Analysis Reporting: Standardized performance summaries

Memory and Performance Optimization

VRAM Management Strategies

Techniques for testing multiple configurations without exceeding hardware limitations.

Memory Optimization Methods:

- Model Sharing: Single model load shared across all test branches

- Sequential Loading: Load/unload models for memory-constrained systems

- Batch Sizing: Optimal test counts per generation run

- Component Reuse: Shared VAE, CLIP, and other expensive components

- Memory Monitoring: Real-time VRAM usage tracking and management

Processing Speed Enhancement

Maximizing testing throughput while maintaining result quality and accuracy.

Speed Optimization Techniques:

- Parallel Processing: Simultaneous generation across test branches

- Smart Caching: Reuse computed components when possible

- Queue Optimization: Efficient ordering of testing operations

- Hardware Scaling: Multi-GPU distribution for large testing runs

- Background Processing: Non-blocking testing workflows

Commercial Testing Applications

Client Project Optimization

Professional workflows for optimizing settings for specific client requirements and preferences.

Client Optimization Process:

- Requirement Analysis: Understanding client quality and style preferences

- Parameter Testing: Systematic exploration of relevant settings

- Quality Validation: Client approval of optimal configurations

- Documentation: Standardized settings for consistent delivery

- Iteration Protocols: Efficient refinement based on feedback

Production Pipeline Testing

Large-scale testing for production environments requiring consistent quality and performance.

Production Testing Benefits:

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

- Quality Standardization: Consistent results across large projects

- Performance Predictability: Known generation times and resource usage

- Risk Mitigation: Tested configurations reduce production failures

- Cost Optimization: Optimal settings minimize computational costs

- Scale Preparation: Validated workflows for high-volume generation

Industry-Specific Testing Strategies

E-commerce Optimization

Testing configurations specifically for product photography and commercial imagery.

E-commerce Testing Focus:

- Product Clarity: Settings that maximize product detail and accuracy

- Background Consistency: Uniform background quality across products

- Color Accuracy: Configurations that maintain true-to-life colors

- Resolution Optimization: Settings for various platform requirements

- Batch Consistency: Uniform quality across large product catalogs

Entertainment Industry Testing

Professional testing approaches for film, gaming, and media production requirements.

Industry Testing Metrics

| Industry Sector | Quality Threshold | Testing Scope | Optimization Priority |

|---|---|---|---|

| E-commerce | 8.5/10 minimum | Product accuracy | Speed + consistency |

| Entertainment | 9.0/10 minimum | Creative quality | Quality + flexibility |

| Marketing | 8.0/10 minimum | Brand alignment | Speed + brand match |

| Education | 7.5/10 minimum | Clarity + accuracy | Cost + reliability |

Content Creation Optimization

Testing strategies for content creators requiring consistent quality across diverse creative projects.

Creator Testing Needs:

- Style Consistency: Maintaining visual brand across content

- Quality Reliability: Predictable results for content planning

- Speed Requirements: Efficient generation for publication schedules

- Platform Optimization: Settings for different social media platforms

- Audience Engagement: Configurations that maximize viewer appeal

Data Analysis and Decision Making

Result Interpretation Methods

Systematic approaches to analyzing testing results and making informed optimization decisions.

Analysis Framework:

- Quantitative Metrics: Technical quality scores and performance data

- Qualitative Assessment: Human evaluation of aesthetic and creative merit

- Statistical Analysis: Confidence intervals and significance testing

- Trend Identification: Patterns in performance across different conditions

- Decision Matrices: Structured comparison frameworks for optimization choices

Optimization Documentation

Professional documentation systems for recording and sharing testing results and optimal configurations.

Documentation Components:

- Testing Protocols: Standardized methods for reproducible results

- Parameter Records: Complete settings documentation for optimal configurations

- Performance Data: Speed, quality, and resource usage metrics

- Use Case Guidelines: Recommendations for different application scenarios

- Version Control: Change tracking for workflow and setting evolution

Future Testing Developments

Automated Testing Systems

Next-generation ComfyUI features that automate the testing and optimization process.

Future Automation Features:

Join 115 other course members

Create Your First Mega-Realistic AI Influencer in 51 Lessons

Create ultra-realistic AI influencers with lifelike skin details, professional selfies, and complex scenes. Get two complete courses in one bundle. ComfyUI Foundation to master the tech, and Fanvue Creator Academy to learn how to market yourself as an AI creator.

- AI-Powered Optimization: Machine learning systems that automatically find optimal settings

- Intelligent Parameter Sweeps: Smart exploration of parameter space based on results

- Predictive Quality: AI systems that predict optimal settings for new prompts

- Automated Documentation: Self-documenting testing workflows and results

- Real-time Optimization: Dynamic setting adjustment during generation

Advanced Analytics Integration

Enhanced analysis tools that provide deeper insights into testing results and optimization opportunities.

Development Timeline

| Feature | Current Status | Expected Release | Impact Level |

|---|---|---|---|

| Automated A/B Testing | Development | 2025 Q3 | High |

| AI Parameter Optimization | Research | 2025 Q4 | Very High |

| Advanced Analytics | Beta Testing | 2025 Q2 | Medium |

| Predictive Quality | Alpha Testing | 2026 Q1 | High |

Cross-Platform Integration

Testing workflows that work smoothly across different AI platforms and tools for comprehensive optimization.

Integration Possibilities:

- Universal Testing: Workflows that test across multiple AI platforms

- Result Comparison: Cross-platform performance analysis

- Setting Translation: Automatic conversion of optimal settings between platforms

- Unified Analytics: Comprehensive analysis across all generation tools

- Collaborative Testing: Team-based testing across different platforms and workflows

Implementation Success Stories

Professional Studio Optimization

Large creative studio implementing systematic testing for client project optimization.

Studio Results:

- Quality Improvement: 34% improvement in client satisfaction scores

- Efficiency Gains: 67% faster project completion through optimized settings

- Cost Reduction: 45% reduction in computational costs through optimization

- Consistency Achievement: 91% consistency in quality across all projects

- Client Retention: 28% improvement in client retention through reliable quality

Individual Creator Transformation

Solo content creator using systematic testing to optimize personal workflows and output quality.

Creator Benefits:

- Quality Leap: Dramatic improvement from amateur to professional-grade results

- Time Savings: 78% reduction in experimentation time through systematic testing

- Creative Freedom: Confidence in settings enables focus on creative concepts

- Audience Growth: 145% follower increase due to consistent quality improvement

- Revenue Impact: 230% income increase through premium quality content

Conclusion: ComfyUI A/B Testing for Optimal Results

ComfyUI A/B testing through workflow duplication transforms random experimentation into systematic optimization, enabling data-driven decisions that dramatically improve generation quality and efficiency. Professional creators who master ComfyUI A/B testing workflows gain significant competitive advantages through optimized settings and reliable results.

Technical Mastery Benefits:

- Systematic Approach: Scientific testing methodology replaces random experimentation

- Direct Comparison: Side-by-side results enable objective quality assessment

- Resource Efficiency: 800% improvement in testing efficiency through workflow duplication

- Professional Quality: Optimized settings deliver consistent, commercial-grade results

Practical Implementation:

- Simple Start: Copy-paste workflow duplication accessible to all skill levels

- Advanced Scaling: Comprehensive testing workflows for professional applications

- Memory Optimization: Smart resource management enables complex testing on standard hardware

- Time Efficiency: 10+ tests in the time previously required for single comparisons

Business Impact:

- Quality Improvement: 34% improvement in client satisfaction through optimized settings

- Cost Reduction: 45% reduction in computational costs through systematic optimization

- Consistency Achievement: 91% consistency in professional-grade results

- Competitive Advantage: Superior quality through scientific optimization approaches

Strategic Value:

- Decision Making: Data-driven optimization replaces guesswork and intuition

- Professional Standards: Systematic testing enables commercial-grade quality consistency

- Workflow Evolution: Continuous improvement through documented optimization processes

- Knowledge Building: Accumulated testing data becomes valuable optimization asset

The difference between amateur and professional AI image generation lies in systematic optimization through comprehensive testing. Workflow duplication provides the technical foundation for scientific approaches to quality improvement that deliver measurable results and competitive advantages.

Professional creators who implement systematic testing workflows gain the reliability and optimization capabilities necessary for commercial success while building valuable knowledge assets that improve over time. The copy-paste duplication method provides immediate access to professional-grade testing capabilities that transform creative workflows from experimental to systematic.

Systematic testing is not just about finding better settings - it's about building professional workflows that deliver consistent, optimized results while providing the documentation and knowledge necessary for continuous improvement and competitive positioning in AI-driven creative markets.

Frequently Asked Questions

Does testing multiple workflows simultaneously slow down generation compared to sequential testing?

ComfyUI generates duplicated workflows 2x faster than sequential testing for batch processing. Testing 10 configurations simultaneously completes in roughly the same time as 2-3 sequential runs because ComfyUI optimizes parallel processing. Shared model loading eliminates redundant model load time that sequential approaches repeat for each test. The efficiency gain compounds with more test variations.

How much VRAM do I need to test 10 models simultaneously?

With shared model loading optimization, testing 10+ models requires only 8GB VRAM compared to 45GB+ without sharing. Load checkpoint models once and share across all test branches to minimize memory usage. Complete sharing reduces VRAM from 45GB to 8GB with 800% efficiency improvement. Budget 10-12GB for comfortable testing including OS overhead and browser memory.

What's the best way to organize and label A/B test results for easy comparison?

Use automatic labeling nodes that embed model names, sampler types, and parameter values directly into output images. Create grid layouts showing all variations side-by-side for instant visual comparison. Implement systematic file naming like modelname_sampler_cfg_seed.png for easy sorting and retrieval. Document test parameters in a spreadsheet with image paths for data-driven analysis and future reference.

Can I test different resolutions in the same comparison workflow?

Yes, though resolutions don't parallelize well due to different latent space dimensions. Better approach: test all parameter variations at consistent resolution, then separately test resolution scaling on optimal parameter combination. This isolates resolution impact from other variables while keeping workflows manageable. Testing mixed resolutions in one workflow creates comparison confusion.

How do I determine statistical significance when comparing test results?

Generate 3-5 variations with different seeds for each configuration being tested to account for random variation. Calculate average quality scores and standard deviation across seeds. Configurations with non-overlapping confidence intervals (mean ± 2 standard deviations) show statistically significant differences. For professional work, blind testing with multiple raters provides strongest confidence in quality assessment.

What parameters should I test first for maximum quality improvement?

Test samplers first - they have largest impact on quality and speed tradeoffs with only 10-15 options to evaluate. Next test schedulers (6-8 variants) in combination with top samplers. Then explore CFG scale range for your specific prompts. Finally optimize step counts to balance quality and speed. This systematic approach identifies 80% of optimization potential in first 50 tests.

Can I use comparison workflows to test custom nodes before installing them?

Not directly - nodes must be installed before workflow usage. Better approach: create duplicate ComfyUI installations specifically for testing new custom nodes, allowing safe evaluation without risking production workflows. Test node functionality, performance impact, and stability before adding to main installation. Maintain separate testing and production ComfyUI environments for risk management.

How often should I re-test workflows as ComfyUI and models update?

Re-test workflows after major ComfyUI updates (typically quarterly) as sampler implementations and processing may change. Test when switching to new model versions or checkpoint merges. Quarterly testing cadence catches most significant changes while avoiding testing overhead. Document test dates and ComfyUI versions for tracking performance changes over time.

What's the most efficient way to share A/B test workflows with team members?

Export workflow as JSON with embedded annotations explaining test configuration and results. Share through version control (Git) for change tracking and collaboration. Include example outputs and parameter documentation. For complex tests, create standardized templates team members clone and modify rather than building from scratch. Maintain team workflow library with proven test configurations.

How do I handle tests where results are ambiguous or equally good?

Document tied results with notes about subtle differences and use case contexts. Create secondary tests examining specific quality aspects (face detail, background coherence, color saturation) to break ties. Consider that "equally good" often means either choice works, reducing overthinking need. Default to faster option when quality matches, or most compatible with other workflow components.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

10 Most Common ComfyUI Beginner Mistakes and How to Fix Them in 2025

Avoid the top 10 ComfyUI beginner pitfalls that frustrate new users. Complete troubleshooting guide with solutions for VRAM errors, model loading...

25 ComfyUI Tips and Tricks That Pro Users Don't Want You to Know in 2025

Discover 25 advanced ComfyUI tips, workflow optimization techniques, and pro-level tricks that expert users leverage.

360 Anime Spin with Anisora v3.2: Complete Character Rotation Guide ComfyUI 2025

Master 360-degree anime character rotation with Anisora v3.2 in ComfyUI. Learn camera orbit workflows, multi-view consistency, and professional...

.png)