LongCat-Video: Meituan's AI Video Generation Guide

Complete guide to LongCat-Video from Meituan for long-form AI video generation. Setup, configuration, and optimization for extended sequences.

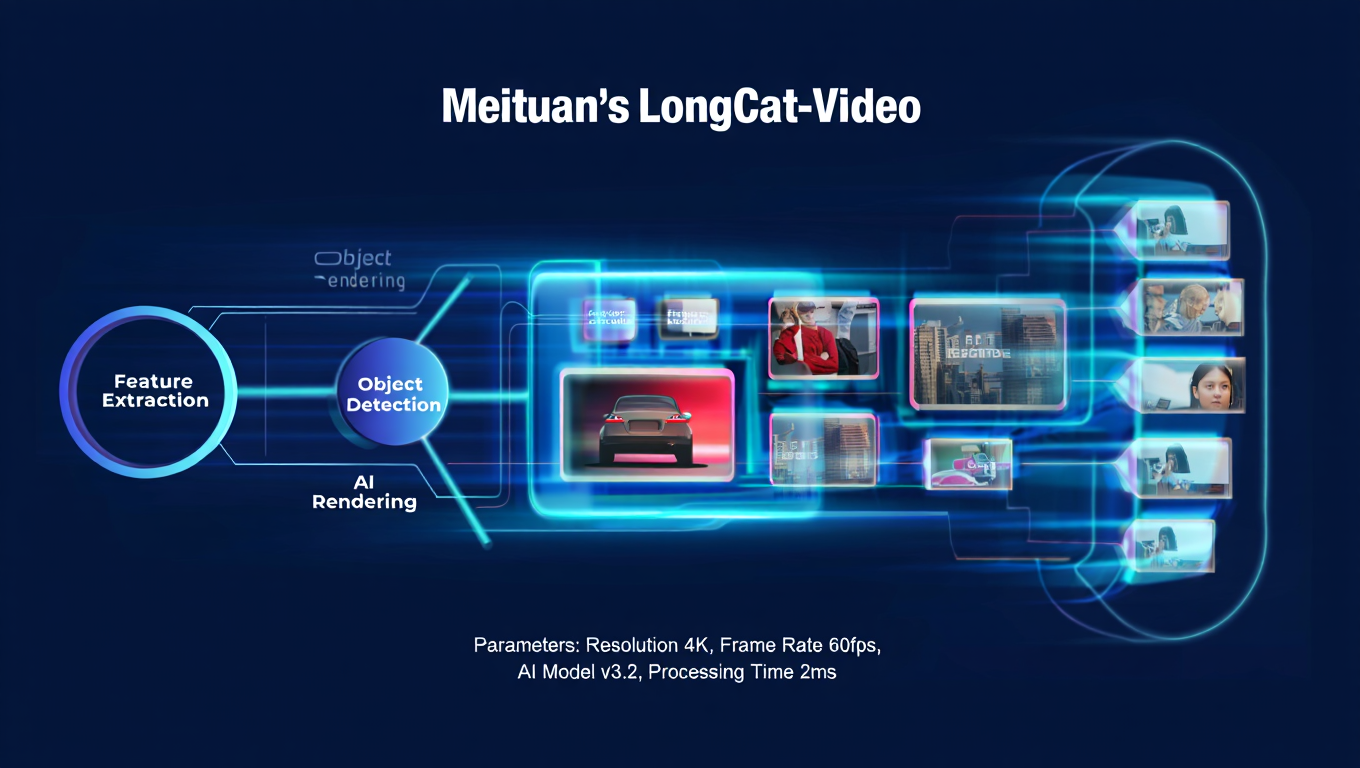

Quick Answer: LongCat-Video is Meituan's 13.6 billion parameter AI video generation model released in 2025, offering text-to-video, image-to-video, and video continuation in a unified architecture. It uses Block Sparse Attention and coarse-to-fine generation to produce 720p videos at 30fps with competitive quality to Veo3 and PixVerse-V5. Released under MIT License, it runs on single or multi-GPU setups and provides one of the first truly open-source solutions for long-form video generation without quality degradation.

- What it is: 13.6B parameter unified video generation model supporting three modes

- Key innovation: Block Sparse Attention enables long-form video without quality loss

- Requirements: Python 3.10, PyTorch 2.6, FlashAttention-2, 24GB VRAM minimum

- Output quality: 720p at 30fps with competitive performance to commercial models

- Best for: Developers and creators needing flexible, open-source video generation with commercial licensing

You watch the explosion of AI video generation tools in 2025 and notice something missing. Most models excel at one task - either text-to-video or image-to-video, but rarely both. And when you try to extend videos beyond a few seconds, quality degrades rapidly. What if a single model could handle all three tasks smoothly with consistent quality?

That's exactly what Meituan's research team solved with LongCat-Video. Released as one of the newest major open-source video generation models, it combines text-to-video, image-to-video, and video continuation in a unified 13.6 billion parameter architecture. Unlike separate specialized models, LongCat-Video maintains consistent quality across all generation modes while supporting extended video creation.

:::tip[Key Takeaways]

- Follow the step-by-step process for best results with longcat-video: meituan's ai video generation

- Start with the basics before attempting advanced techniques

- Common mistakes are easy to avoid with proper setup

- Practice improves results significantly over time :::

- What makes LongCat-Video unique compared to Wan 2.2, Mochi, and HunyuanVideo

- How Block Sparse Attention and coarse-to-fine generation work

- Step-by-step installation and environment setup process

- Using all three generation modes with practical examples

- Performance benchmarks and quality comparisons with commercial models

- Commercial applications and MIT License implications

What Is LongCat-Video and Why Does It Matter?

LongCat-Video represents a significant advance in open-source AI video generation. Developed by Meituan's research division, one of China's largest technology companies, the model was designed to address fundamental limitations in existing video generation approaches.

Traditional video generation models use separate architectures for different tasks. You need one model for text-to-video, another for image-to-video, and often lack proper video continuation capabilities. This fragmentation creates workflow complications and inconsistent quality across generation modes.

The Unified Architecture Approach

LongCat-Video takes a different approach by implementing a unified architecture that handles all three core video generation tasks through a single model framework.

The model supports three distinct generation modes within the same 13.6 billion parameter architecture. Text-to-video generation creates complete video sequences from text prompts alone. Image-to-video animation transforms static images into dynamic video content. Video continuation extends existing videos while maintaining temporal consistency and quality.

This unified approach delivers practical benefits. You work with a single model deployment instead of managing multiple specialized models. Quality remains consistent across all generation modes because they share the same underlying architecture. Workflow complexity decreases substantially when one model handles all video generation tasks.

Technical Foundation and Architecture

LongCat-Video builds on advanced diffusion model techniques with several key innovations that enable its capabilities.

The model uses a transformer-based architecture specifically optimized for video generation. At 13.6 billion parameters, it sits in the middle range between lightweight models and massive commercial systems. This parameter count provides sufficient capacity for high-quality generation while remaining accessible on consumer and prosumer hardware.

According to research from Meituan published on Hugging Face, the training methodology emphasizes temporal consistency and motion coherence. The model was trained on diverse video datasets encompassing multiple content types, motion patterns, and visual styles.

The architecture incorporates two major technical innovations that distinguish it from alternatives. Block Sparse Attention enables efficient processing of long video sequences. Coarse-to-fine generation strategy produces hierarchical video construction from rough to detailed.

Block Sparse Attention Explained

Block Sparse Attention represents one of LongCat-Video's most significant technical contributions. Traditional attention mechanisms in video diffusion models face quadratic complexity growth as video length increases. This means longer videos require exponentially more computational resources and memory.

Block Sparse Attention solves this by restricting attention computation to relevant spatial-temporal blocks rather than computing full global attention. The model divides video frames into blocks and computes attention only within and between adjacent blocks.

This architectural choice delivers multiple benefits. Memory usage grows linearly rather than quadratically with video length. Generation speed remains consistent even for extended videos. Quality degradation typically seen in long video generation is substantially reduced.

The practical implication for users is straightforward. You can generate and extend videos to meaningful lengths without the quality collapse that plagues other models beyond a few seconds.

Coarse-to-Fine Generation Strategy

LongCat-Video employs a hierarchical generation approach that constructs videos in stages from rough to refined.

The coarse stage establishes overall composition, major motion patterns, and general visual structure. This happens at reduced resolution and detail levels, focusing on getting the fundamentals correct. The fine stage progressively adds detail, refines motion, enhances textures, and produces the final high-quality output.

This strategy mirrors how skilled animators work, establishing rough keyframes before adding detail. The approach improves both efficiency and quality by ensuring structural coherence before investing computation in fine details.

For creators, this means the model produces videos with better overall coherence and fewer temporal artifacts compared to single-stage generation approaches.

How Does LongCat-Video Compare to Other Video Generation Models?

Understanding where LongCat-Video fits in the competitive space helps determine when to use it versus alternatives like Wan 2.2, Mochi, or HunyuanVideo.

LongCat-Video vs Commercial Models

Meituan benchmarked LongCat-Video against leading commercial video generation services to validate its competitive performance.

LongCat-Video vs Veo3: Google's Veo3 represents state-of-the-art commercial video generation. Testing shows LongCat-Video achieves competitive quality in motion coherence and temporal consistency. Veo3 maintains advantages in prompt understanding for complex descriptions and overall visual polish in certain scenarios.

However, LongCat-Video delivers critical advantages that matter for many use cases. Complete open-source availability under MIT License means no usage restrictions or recurring costs. Local deployment provides full control over processing and data privacy. Customization and fine-tuning capabilities enable specialization for specific use cases.

For professionals considering platforms like Apatero.com, LongCat-Video offers similar capabilities with the option for complete local control.

LongCat-Video vs PixVerse-V5: PixVerse-V5 is a prominent commercial video generation service popular in the creative community. Performance comparisons show LongCat-Video matching or exceeding PixVerse-V5 in several key areas.

LongCat-Video demonstrates superior temporal consistency across longer sequences and better handling of complex motion patterns. PixVerse-V5 shows strengths in stylized content and artistic video generation.

The cost difference proves significant for high-volume users. Commercial services charge per generation or subscription fees. LongCat-Video requires only the hardware investment and electricity costs for local generation.

LongCat-Video vs Open-Source Alternatives: Comparing LongCat-Video to established open-source models reveals distinct positioning in the ecosystem.

LongCat-Video vs Wan 2.2: Wan 2.2 currently dominates open-source video generation with excellent quality and broad community adoption. Our comprehensive Wan 2.2 comparison guide covers detailed performance analysis.

LongCat-Video brings unique advantages with its unified architecture supporting all three generation modes in a single model. Video continuation capabilities exceed Wan 2.2's native options. Block Sparse Attention enables more efficient long-form generation.

Wan 2.2 maintains advantages in established ComfyUI integration, extensive community resources and workflows, and more aggressive quantization options for low-VRAM systems. For comprehensive Wan 2.2 workflows, see our complete installation guide.

LongCat-Video vs Mochi: Mochi focuses specifically on photorealistic motion at 30fps with excellent results for realistic content. LongCat-Video offers broader versatility across content types and generation modes. Mochi generates at 480p native resolution, while LongCat-Video produces 720p output directly.

The unified architecture in LongCat-Video provides workflow simplification that Mochi's specialized approach cannot match. However, Mochi remains the better choice for specific photorealistic scenarios where its training optimization shines.

LongCat-Video vs HunyuanVideo: HunyuanVideo brings massive scale with 13 billion parameters and exceptional quality for cinematic multi-person scenes. LongCat-Video matches the parameter count at 13.6 billion but focuses on unified architecture and long-form capability.

HunyuanVideo requires significantly more VRAM and computational resources for comparable generation. LongCat-Video's Block Sparse Attention enables more efficient processing. Both models target professional-grade output, but LongCat-Video emphasizes practical deployment efficiency.

Performance Benchmarks and Specifications

LongCat-Video's technical specifications reveal its positioning in the video generation space.

Output Specifications:

| Specification | Value | Competitive Position |

|---|---|---|

| Resolution | 720p | Standard for open-source models |

| Frame rate | 30fps | Matches commercial alternatives |

| Typical duration | Variable, 5-10s+ | Superior for extended generation |

| Parameter count | 13.6B | Mid-range scale |

| Architecture | Unified diffusion transformer | Unique approach |

Quality Metrics: Motion coherence scores competitively with commercial models in standardized benchmarks. Temporal consistency remains high even in extended generations where other models degrade. Detail retention maintains quality throughout the generation process without significant blur or artifact accumulation.

Generation Speed: Single-GPU generation on RTX 4090 produces approximately 20-30 seconds of output time per second of video. Multi-GPU deployment reduces generation time proportionally across GPU count. Initial model loading takes 30-60 seconds depending on hardware configuration.

These benchmarks position LongCat-Video as a practical production tool rather than just a research demonstration.

What Are the Three Generation Modes and How Do They Work?

LongCat-Video's unified architecture supports three distinct generation modes, each serving different creative and production needs.

Text-to-Video Generation

Text-to-video mode creates complete video sequences from text descriptions without requiring any visual input.

How It Works: You provide a text prompt describing the desired video content, including scene composition, actions, motion, and visual style. LongCat-Video processes the text through language encoders to understand semantic content and visual requirements. The diffusion model generates video frames progressively from noise, guided by the text embedding.

The coarse-to-fine generation strategy first establishes overall structure and major motion patterns, then adds progressive detail and refinement in subsequent passes.

Effective Prompting Strategies: Successful text-to-video generation depends heavily on prompt quality. Effective prompts include specific descriptions of main subjects and their actions, clear indication of camera movement or perspective, lighting and environmental details, and motion characteristics indicating speed and style.

Example Strong Prompts:

- "A golden retriever running through a meadow at sunset, camera tracking from the side, warm golden hour lighting, grass swaying in gentle breeze"

- "Time-lapse of clouds moving across a mountain space, wide angle view, dramatic lighting changes from dawn to dusk"

- "Close-up of hands carefully arranging flowers in a vase, soft natural window light, shallow depth of field, gentle deliberate movements"

Limitations: Text-to-video inherently involves more interpretation and variability than image-to-video. Complex scenes with multiple subjects and interactions prove more challenging. Highly specific visual details may not generate exactly as described without iterative refinement.

For users wanting simplified text-to-video without local setup complexity, platforms like Apatero.com provide simplified interfaces optimized for prompt-based generation.

Image-to-Video Animation

Image-to-video mode transforms static images into animated video sequences, bringing still content to life with motion and dynamics.

How It Works: You provide a source image as the starting frame or reference for video generation. LongCat-Video analyzes the image content, composition, and visual elements. The model generates subsequent frames that animate the scene while maintaining consistency with the source image.

You can optionally provide text prompts to guide the type of motion and animation applied. The model balances maintaining visual consistency with the source image while introducing natural, coherent motion.

Practical Applications: Image-to-video proves particularly valuable for specific use cases. You can animate AI-generated artwork that you created with Stable Diffusion, FLUX, or other image models. Bring photographs to life with subtle animation like camera movement and environmental motion. Create product demonstrations from static product photography with rotation and dynamic presentation.

Character animation benefits significantly from image-to-video, especially when combined with specialized techniques from our Wan 2.2 Animate guide.

Quality Considerations: Image-to-video typically produces more consistent and controllable results than pure text-to-video. The source image provides strong visual grounding that constrains the generation process. Quality depends heavily on source image characteristics, including resolution, composition, and clarity.

Images with clear subjects and unambiguous spatial relationships generate better results than busy, complex compositions.

Video Continuation

Video continuation extends existing videos by generating additional frames that maintain temporal and visual consistency with the source material.

How It Works: You provide an existing video sequence as input. LongCat-Video analyzes the motion patterns, visual consistency, and temporal dynamics in the source video. The model generates additional frames that continue the established patterns and maintain coherence.

Block Sparse Attention plays a crucial role here by efficiently processing the source video context without excessive memory requirements. The continuation maintains quality without the degradation typically seen when extending videos beyond training length.

Use Cases: Video continuation solves practical production challenges. Extend short generations from other models to create longer sequences. Create smooth loops by generating transition frames between end and beginning. Maintain narrative continuity when you need specific duration requirements.

Commercial applications benefit substantially from this capability. You can create advertisement content of specific lengths required by platforms, extend documentary or educational content to match script timing, and develop social media content optimized for platform preferences.

Temporal Consistency: LongCat-Video's architecture specifically optimizes for maintaining consistency during continuation. Motion patterns remain coherent without sudden changes or discontinuities. Visual style stays consistent rather than drifting over extended duration. Subject identity preserves features and characteristics throughout the extension.

This represents a significant advantage over naive approaches that simply string together multiple independent generations.

How Do I Install and Set Up LongCat-Video?

Getting LongCat-Video running requires specific environment configuration and dependencies. The installation process involves several critical steps.

System Requirements and Prerequisites

Minimum Hardware Specifications:

- NVIDIA GPU with 24GB VRAM (RTX 3090, RTX 4090, or better)

- 32GB system RAM recommended for smooth operation

- 100GB free storage space for models and outputs

- Multi-GPU setup optional but beneficial for production use

Required Software Environment:

- Python 3.10 (specific version requirement for compatibility)

- PyTorch 2.6 with CUDA support for GPU acceleration

- FlashAttention-2 for optimized attention computation

- Git for repository management and updates

Operating System: Linux-based systems receive primary support and testing. Windows users can run through WSL2 for compatibility. MacOS with Metal GPU support has limited compatibility for Apple Silicon.

Step-by-Step Installation Process

Step 1: Environment Preparation

Create a dedicated Python environment to avoid dependency conflicts with other projects. Using conda or venv, set up Python 3.10 specifically. Activate the environment before installing dependencies.

Install PyTorch 2.6 with CUDA support matching your CUDA version. Verify GPU detection by running torch.cuda.is_available in Python. Confirm that PyTorch recognizes all available GPUs in your system.

Step 2: Repository and Dependencies

Clone the LongCat-Video repository from the official GitHub repository to access the model code and utilities. Navigate into the cloned directory and examine the requirements file for dependencies.

Install FlashAttention-2 as a separate step since it requires compilation. This process takes several minutes depending on your system. Verify successful installation by importing flash_attn in Python without errors.

Install remaining dependencies from the requirements file using pip. Some packages may require compilation, so expect this step to take time. Address any compilation errors by ensuring development tools are installed.

Step 3: Model Download

Download the LongCat-Video model weights from Hugging Face where Meituan hosts the official release. The model checkpoint is substantial, so ensure adequate bandwidth and storage.

Place the downloaded model file in the designated models directory within the repository structure. Verify file integrity by checking file size matches the expected values from the repository documentation.

Step 4: Configuration

Review and adjust configuration files for your specific hardware setup. Set GPU device IDs if using specific GPUs in a multi-GPU system. Configure memory settings based on your VRAM availability and intended batch sizes.

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

Adjust generation parameters including default resolution, frame count, and quality settings. These configuration choices affect both generation quality and resource requirements.

Step 5: Verification

Run the test script provided in the repository to verify installation success. The test generates a short sample video using default parameters. Successful completion confirms all dependencies and model weights are correctly configured.

Check the output video for proper format, expected duration, and reasonable visual quality. Any errors or warnings during this test indicate configuration issues to address before production use.

Single-GPU vs Multi-GPU Setup

LongCat-Video supports both single-GPU and multi-GPU deployment with different optimization strategies.

Single-GPU Operation: Configure for sequential processing with full attention to memory management. Enable gradient checkpointing if needed to reduce VRAM usage at the cost of slightly slower generation. Adjust batch size to maximum that fits in available VRAM, typically 1 for 24GB cards.

Multi-GPU Configuration: Distribute model layers across multiple GPUs using tensor parallelism for efficient computation. Split batch processing across GPUs to increase throughput proportionally. Ensure proper NCCL configuration for optimal inter-GPU communication.

Multi-GPU setups dramatically reduce generation time for production workflows. Two RTX 4090s generate approximately twice as fast as a single card. Four-GPU configurations enable near-real-time generation speeds for certain use cases.

How Do I Use the Streamlit Interface and Generate Videos?

LongCat-Video includes a Streamlit-based interface that simplifies the generation process through a web-based GUI rather than requiring direct code interaction.

Launching the Interface

Start the Streamlit server by running the provided interface script from the repository. The server launches on localhost with a default port, typically 8501. Open your web browser and navigate to the provided local address to access the interface.

The interface loads the model on first launch, which takes 30-60 seconds depending on hardware. Subsequent generations within the same session skip this initialization step.

Text-to-Video Workflow

Navigate to the text-to-video tab in the interface. Enter your prompt in the text field, following effective prompting strategies discussed earlier. Adjust generation parameters including number of frames to generate, guidance scale for prompt adherence strength, and random seed for reproducibility or variation.

Click generate to start the video creation process. Progress updates display during generation, showing the current stage and estimated time remaining. Once complete, the video displays in the interface with download options.

Review the generated video and adjust parameters if needed for refinement. Higher guidance scales increase prompt adherence but may reduce natural motion. More frames extend duration but increase generation time proportionally.

Image-to-Video Workflow

Switch to the image-to-video tab within the interface. Upload your source image using the file picker, supporting common formats like PNG, JPG, and WebP. Optionally add a text prompt to guide the animation style and motion characteristics.

Configure motion parameters including animation strength and temporal consistency settings. Higher animation strength produces more dramatic motion but risks consistency issues. Temporal consistency controls how closely the animation follows the source image.

Generate the animation and preview results in real-time as frames are produced. The interface displays the source image alongside the generated animation for comparison. Download options provide various video formats for different use cases.

Video Continuation Workflow

Access the video continuation tab for extending existing videos. Upload your source video, which should be a short clip to extend beyond its current length. Specify how many additional frames to generate for the continuation.

Set consistency parameters that control how closely the continuation matches the source video style. Higher consistency maintains closer adherence to original content but may limit variation. Lower consistency allows more creative interpretation in the extension.

Launch the continuation process and monitor progress through the interface. The model analyzes the source video before beginning generation of new frames. Final output combines the original video with the generated continuation smoothly.

Advanced Generation Parameters

The interface exposes several advanced parameters for experienced users seeking fine control.

Sampling Parameters: Number of diffusion steps controls generation quality versus speed trade-off. More steps generally improve quality but increase generation time linearly. Typical range is 20-50 steps, with 30 providing good balance.

Sampler type selection chooses the diffusion sampling algorithm. DDPM provides standard quality. DPM-Solver variants offer faster generation with similar quality. Experiment to find preferred balance for your use cases.

Quality Settings: Resolution scaling adjusts output resolution from the default 720p. Higher resolutions require significantly more VRAM and generation time. Lower resolutions speed iteration during prompt testing and parameter tuning.

Temporal consistency weights balance motion smoothness against detail retention. Higher values produce smoother motion but may sacrifice fine details. Lower values maintain detail but risk jittery movement.

Output Options: Frame rate selection chooses between 24fps and 30fps output. Codec selection determines video compression and quality trade-offs. Format options include MP4 for broad compatibility and WebM for web optimization.

For users preferring managed solutions without local configuration complexity, platforms like Apatero.com provide similar generation capabilities through improved web interfaces.

What Are the Performance Characteristics and Optimization Strategies?

Understanding LongCat-Video's performance profile helps optimize workflows for your specific hardware and use case requirements.

Generation Speed Analysis

Generation speed depends heavily on hardware configuration and parameter choices.

Typical Generation Times on Consumer Hardware:

| Hardware | Resolution | Duration | Generation Time |

|---|---|---|---|

| RTX 4090 | 720p | 5 seconds | 2-3 minutes |

| RTX 3090 | 720p | 5 seconds | 3-4 minutes |

| RTX 4090 x2 | 720p | 5 seconds | 1-2 minutes |

| RTX 4090 | 720p | 10 seconds | 4-6 minutes |

Parameter Impact on Speed: Doubling frame count approximately doubles generation time due to linear scaling. Increasing diffusion steps from 30 to 50 adds roughly 60 percent to generation time. Higher guidance scales have minimal impact on speed but affect quality.

Memory Usage and Optimization

VRAM management proves critical for stable generation, especially on 24GB cards running at capacity.

Memory Consumption Breakdown: Model weights occupy approximately 16-18GB of VRAM when fully loaded. Activation memory during generation adds 4-6GB depending on batch size and resolution. Temporary buffers and computation overhead require 2-3GB reserve.

This totals to 22-27GB under full load, explaining why 24GB cards run at limit and benefit from optimization.

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

Optimization Techniques:

Enable gradient checkpointing to trade computation time for reduced activation memory. This technique recomputes activations during backward pass rather than storing them. Memory savings reach 30-40 percent with approximately 10-15 percent slower generation.

Reduce batch size to the minimum viable value, typically 1 for single video generation. Batch processing only benefits multi-video production workflows and requires proportionally more VRAM.

Use mixed precision computation with float16 instead of float32 where supported. This halves memory usage for certain operations with negligible quality impact. PyTorch automatic mixed precision handles this automatically in most cases.

Quality Tuning for Different Use Cases

Different applications benefit from different parameter optimizations.

Maximum Quality Settings: Use 50 diffusion steps for finest detail and motion coherence. Set guidance scale to 7-9 for strong prompt adherence without artifacts. Enable temporal consistency at high values to ensure smooth motion.

These settings produce best possible output but require maximum generation time. Reserve for final production renders after prompt and composition refinement.

Rapid Iteration Settings: Reduce to 20 diffusion steps for quick preview generations during testing. Lower guidance scale to 5-6 to speed sampling with slight quality reduction. Generate shorter durations of 3-4 seconds instead of full length for faster feedback.

Rapid settings enable 2-3x faster generation, allowing quicker prompt experimentation and parameter tuning. Use these for development before switching to quality settings for finals.

Balanced Production Settings: Use 30 diffusion steps as the optimal quality-speed balance for most use cases. Set guidance scale to 7 for reliable prompt adherence with natural results. Generate full intended duration since you need final output.

These settings work well for production workflows where quality matters but generation time affects practical throughput.

What Are the Commercial Use Cases and MIT License Implications?

LongCat-Video's MIT License release represents significant opportunity for commercial deployment and customization.

Understanding the MIT License

The MIT License is one of the most permissive open-source licenses available. You can use LongCat-Video commercially without licensing fees or usage restrictions. Modification of the model and code is fully permitted for any purpose. Redistribution in original or modified form is allowed with attribution.

The only requirement is including the original MIT License text in distributions. This minimal restriction makes LongCat-Video highly attractive for commercial applications where licensing often creates barriers.

Comparison to Other Video Models: Some open-source video models use more restrictive licenses that prohibit commercial use or require share-alike provisions. Commercial models typically charge per-generation fees or require expensive subscriptions. LongCat-Video's MIT License eliminates these barriers entirely.

Commercial Application Opportunities

The combination of competitive quality, unified architecture, and permissive licensing creates numerous commercial opportunities.

Content Creation and Marketing: Generate promotional videos for products and services without recurring generation costs. Create social media content at scale with consistent quality and branding. Develop advertisement variations quickly for A-B testing different approaches.

Marketing teams can deploy LongCat-Video internally to reduce dependency on external video production or expensive cloud services. The one-time hardware investment pays for itself quickly compared to per-generation pricing.

Media and Entertainment: Produce B-roll footage for documentary and educational content efficiently. Generate storyboard animations and pre-visualization for film and video projects. Create animated sequences for indie games and interactive media.

Independent creators gain access to production tools previously requiring significant budget. The unified architecture streamlines workflows that traditionally required multiple specialized tools.

E-commerce and Retail: Transform product photography into dynamic product demonstrations automatically. Generate lifestyle context videos showcasing products in use scenarios. Create personalized video content for different customer segments and markets.

Online retailers can enhance product listings with video content without expensive photoshoots. The image-to-video capability proves particularly valuable for bringing catalog images to life.

Education and Training: Develop educational video content illustrating concepts and processes. Create training materials with consistent visual style and quality. Generate instructional sequences demonstrating techniques and procedures.

Educational institutions and corporate training departments can produce custom content without relying on external production resources.

Software Integration: Embed video generation capabilities directly into applications and services. Build automated content pipelines that generate video programmatically. Offer video generation as a feature within larger creative software ecosystems.

The MIT License permits integration without licensing complications, unlike models with restrictive terms.

For businesses preferring managed deployment without infrastructure management, services like Apatero.com provide similar capabilities with simplified administration and guaranteed uptime.

How Does LongCat-Video Handle Long-Form Video Generation?

LongCat-Video's name references its specific strength in generating and extending videos beyond the typical short clips produced by most AI video models.

The Long-Form Challenge

Most video generation models train on relatively short clips, typically 3-10 seconds maximum. Attempting to generate beyond training length often produces quality degradation including motion incoherence, visual drift, and temporal inconsistencies. Memory requirements scale poorly, making long generations impractical.

These limitations restrict AI video generation to short clips that require extensive manual editing to create longer sequences. This undermines productivity gains and limits applications requiring specific duration requirements.

Block Sparse Attention Advantage

LongCat-Video's Block Sparse Attention architecture specifically addresses the long-form challenge.

Traditional full attention mechanisms compute relationships between all frame pairs. For a 10-second video at 30fps, that's 300 frames requiring 90,000 pairwise attention computations. Doubling duration quadruples computation and memory requirements.

Block Sparse Attention divides frames into temporal blocks and computes attention primarily within blocks and between adjacent blocks. This reduces computation from quadratic to linear scaling with video length.

Practical implications prove substantial. You can generate 10-second videos with similar efficiency to 5-second videos. Video continuation extends sequences without the quality collapse seen in other models. Memory usage remains manageable even for extended generations.

Earn Up To $1,250+/Month Creating Content

Join our exclusive creator affiliate program. Get paid per viral video based on performance. Create content in your style with full creative freedom.

Temporal Consistency Mechanisms

Beyond efficient attention, LongCat-Video implements several mechanisms ensuring consistency across extended durations.

The coarse-to-fine generation strategy establishes overall temporal structure before adding details. This prevents local optimization that creates global inconsistencies. Motion prediction components maintain coherent velocity and trajectory across frames.

Reference frame conditioning anchors later frames to earlier content, preventing visual drift. The model maintains awareness of established visual elements throughout generation.

Practical Long-Form Capabilities

In practical testing, LongCat-Video demonstrates superior performance for extended video generation.

Single Generation: Direct generation of 10-15 second clips maintains quality comparable to shorter durations. Motion remains coherent without the stuttering or drift typical of other models. Visual consistency preserves subject characteristics throughout the extended sequence.

Iterative Continuation: Using the video continuation mode, you can extend videos well beyond single-generation limits. Generate an initial 5-second base clip with desired composition and content. Extend with continuation mode in 5-second increments, maintaining consistency. Repeat the continuation process to build sequences of 20-30 seconds or longer.

Each continuation maintains coherence with previous content through the model's context processing. Quality remains remarkably consistent across multiple continuation stages.

Quality Preservation: Unlike models that progressively degrade with each continuation or extended generation, LongCat-Video maintains quality through its architectural design. Motion patterns stay coherent rather than becoming increasingly erratic. Visual details remain consistent instead of slowly drifting or blurring.

This capability makes LongCat-Video uniquely suited for applications requiring specific duration requirements beyond typical AI video generation limits.

What Are the Limitations and Considerations?

While LongCat-Video brings significant capabilities, understanding its limitations ensures appropriate application and realistic expectations.

Technical Limitations

Hardware Requirements: The 24GB minimum VRAM requirement restricts accessibility compared to models with aggressive quantization options. Consumer GPUs below RTX 3090 level cannot run the model at full quality. This represents higher barrier to entry than models like Wan 2.2 that support GGUF quantization down to 4GB VRAM.

For detailed low-VRAM strategies with other models, see our complete low-VRAM survival guide.

Generation Speed: Even on high-end hardware, generation takes several minutes for short clips. This limits real-time or interactive applications. Iteration speed during creative development remains slower than working with pre-rendered assets.

Commercial cloud services sometimes offer faster generation through optimized infrastructure, though at ongoing cost. Platforms like Apatero.com provide managed alternatives when generation speed proves critical.

Resolution Constraints: Native 720p output falls short of 1080p or 4K requirements for some professional applications. Upscaling post-generation is possible but adds workflow complexity and processing time. Detail at 720p limits very fine textures and small elements that would benefit from higher resolution.

Quality and Capability Limitations

Complex Scene Handling: While competent at multi-subject scenes, LongCat-Video sometimes struggles with very complex compositions involving 5 or more distinct subjects with independent actions. Spatial relationship understanding occasionally produces layout errors in crowded scenes.

Comparison with HunyuanVideo shows the latter's superiority for complex multi-person cinematic scenes specifically.

Prompt Understanding: Like all current video generation models, LongCat-Video's prompt interpretation has limits. Highly specific visual details may not generate exactly as described. Abstract concepts and complex instructions sometimes get misinterpreted. Achieving precise results often requires iterative refinement and prompt engineering.

Text Rendering: The model struggles with generating readable text within videos, a common limitation across current video generation models. Avoid prompts requiring legible text, signs, or written content. Add text elements in post-production rather than relying on generation.

Workflow Considerations

Learning Curve: Installation and configuration require technical competence beyond consumer tools. Understanding parameter effects and optimization techniques takes experimentation. Achieving consistent quality results requires developing prompt engineering skills.

This contrasts with managed platforms offering faster interfaces at the cost of customization flexibility.

Resource Planning: Electricity costs for extended generation sessions on multi-GPU systems prove non-trivial. Storage requirements grow quickly when generating and preserving multiple variations. Cooling and noise from sustained GPU usage may require consideration in office environments.

Ecosystem Maturity: As a newer release, LongCat-Video lacks the extensive community resources available for established models like Wan 2.2. Fewer third-party tools, custom nodes, and integration options currently exist. Community support and documentation remain in earlier stages compared to mature alternatives.

This gap will likely close over time as adoption grows and community develops resources.

How Does Multi-Reward GRPO Training Improve Quality?

LongCat-Video employs an advanced training methodology called Multi-Reward GRPO (Group solid Policy Optimization) that contributes to its quality characteristics.

Traditional Video Model Training

Conventional video generation models typically train using standard diffusion loss objectives. The model learns to denoise random noise into coherent video frames. Training focuses on matching the distribution of training videos. Quality metrics emphasize pixel-level reconstruction accuracy.

This approach produces functional models but sometimes misses higher-level quality factors that human viewers prioritize like motion naturalness, aesthetic appeal, and narrative coherence.

Multi-Reward GRPO Approach

GRPO introduces reinforcement learning techniques into the training process, optimizing for multiple quality objectives simultaneously rather than single reconstruction loss.

Reward Signal Components: The training incorporates multiple reward signals reflecting different quality dimensions. Motion coherence rewards ensure smooth, physically plausible movement. Aesthetic quality rewards optimize for visual appeal and composition. Temporal consistency rewards penalize frame-to-frame instability and artifacts.

Each reward component guides optimization toward specific quality aspects that users actually care about.

Group solid Optimization: The "group solid" aspect ensures the model performs well across diverse content types and generation scenarios rather than overfitting to specific cases. Training explicitly considers performance across different prompt categories, visual styles, and motion patterns.

This produces more solid generalization to novel prompts and edge cases compared to standard training approaches.

Practical Quality Implications

The multi-reward training methodology translates to observable quality improvements in generated content.

Videos demonstrate more natural motion patterns that align with physical expectations rather than artificial or uncanny movement. Aesthetic quality tends toward more visually pleasing compositions and color palettes without requiring explicit prompting. Temporal stability shows fewer flickering artifacts and frame-to-frame inconsistencies.

These improvements prove particularly noticeable in extended generations where training quality directly impacts whether long-form generation maintains coherence or degrades.

- Motion quality: More natural and physically plausible movement patterns

- Aesthetic appeal: Improved visual composition and color without explicit guidance

- Consistency: Better temporal stability across extended generations

- Robustness: Reliable performance across diverse content types and prompts

For creators interested in training custom models, our Wan 2.2 training guide covers fine-tuning techniques applicable to video generation models.

What's Next for LongCat-Video and Open-Source Video Generation?

LongCat-Video represents current state-of-the-art in open-source video generation, but the field continues advancing rapidly.

Expected Near-Term Developments

Model Improvements: Higher resolution support reaching 1080p native output without upscaling. Extended duration capabilities enabling single generations of 15-20 seconds or more. Improved efficiency through architecture optimizations reducing VRAM requirements.

These advancements will likely arrive through iterative model releases and community modifications.

Ecosystem Integration: ComfyUI custom nodes will emerge as community members build integration tools. Automated workflow pipelines will enable batch processing and production automation. Third-party tools will extend functionality with specialized preprocessing and post-processing.

The open-source nature encourages community development that typically accelerates capability expansion.

Fine-Tuning and Specialization: Domain-specific fine-tuned variants will appear for particular content types like character animation, architectural visualization, or product demonstrations. Style transfer applications will enable consistent branded content generation. Custom training on proprietary datasets will allow organizations to specialize models for their unique needs.

For comprehensive fine-tuning strategies, see our LoRA training complete guide.

Competitive space Evolution

The release of LongCat-Video intensifies competition in open-source video generation.

Established models like Wan 2.2 will likely respond with architectural updates incorporating successful approaches. New entrants will continue emerging from research labs with novel architectural innovations. Commercial services face increasing pressure from open-source alternatives matching their quality.

This competitive dynamic drives rapid innovation benefiting all users through improved capabilities and reduced costs.

Long-Term Trajectory

Looking further ahead, several trends appear likely to shape video generation development.

Real-Time Generation: Continued optimization and specialized hardware will push toward real-time video generation. Interactive applications and live content creation become possible. Gaming and virtual production workflows integrate AI video generation as standard tools.

Multimodal Integration: Tighter integration with audio generation for complete audiovisual content creation. Understanding and generating synchronized sound and motion. Text, image, video, and audio models converge toward unified multimodal systems.

Extended Duration: Technical advances enabling minute-long or longer single generations without quality loss. Feature-length content creation becomes theoretically possible through advanced continuation. Memory and computation scaling problems get solved through architectural innovation.

For exploration of multimodal approaches, see our OVI ComfyUI guide covering simultaneous video and audio generation.

Frequently Asked Questions

What hardware do I need to run LongCat-Video?

Minimum 24GB VRAM GPU like RTX 3090, RTX 4090, or professional cards with equivalent memory. 32GB system RAM recommended, 100GB storage space for models. Multi-GPU setup optional but beneficial for faster generation and production workflows.

How does LongCat-Video compare to Wan 2.2 for video generation?

LongCat-Video offers unified architecture for text-to-video, image-to-video, and continuation in one model, superior long-form generation through Block Sparse Attention, and competitive quality with commercial models. Wan 2.2 provides better low-VRAM support through GGUF quantization, more mature ComfyUI integration, and larger community ecosystem. See our complete Wan 2.2 vs Mochi vs HunyuanVideo comparison.

Can I use LongCat-Video commercially?

Yes, the MIT License permits unrestricted commercial use without licensing fees or usage restrictions. You can integrate into commercial products, offer as a service, or use for client work. Only requirement is including the MIT License text in distributions.

How long does it take to generate a video?

RTX 4090 generates 5-second 720p video in 2-3 minutes with standard settings. 10-second videos take 4-6 minutes. Multi-GPU setups reduce time proportionally. Generation speed depends on resolution, frame count, and diffusion steps selected.

What resolution videos does LongCat-Video produce?

Native output is 720p at 30fps. You can upscale to 1080p or 4K using traditional video upscalers or AI upscaling tools. Higher native resolutions require more VRAM and generation time. See our video upscaling guide for enhancement techniques.

Does LongCat-Video work with ComfyUI?

Not currently through official nodes, though community integration is likely emerging. The model provides standalone interface and API. Future ComfyUI custom nodes will enable workflow integration similar to other video models.

How does Block Sparse Attention improve video generation?

Block Sparse Attention reduces computational complexity from quadratic to linear as video length increases. This enables longer videos without memory explosion, consistent quality in extended generations, and practical video continuation capabilities. Memory usage scales efficiently rather than growing exponentially.

Can I fine-tune LongCat-Video for specific content?

The MIT License permits fine-tuning and modification for any purpose. You can train on custom datasets for specialized content, adjust for specific visual styles, or optimize for particular use cases. Technical knowledge of model training required.

What are the main limitations compared to commercial services?

Higher hardware requirements than cloud services, slower generation than optimized commercial infrastructure, and 720p native resolution versus 1080p+ from some commercial tools. Benefits include no recurring costs, complete control, and unlimited generations.

How do I get started with LongCat-Video?

Install Python 3.10 and PyTorch 2.6 with CUDA support, clone repository from GitHub, download model weights from Hugging Face, install FlashAttention-2 and other dependencies, and run test script to verify installation. Launch Streamlit interface for user-friendly generation.

Conclusion - LongCat-Video's Position in the Video Generation space

LongCat-Video arrives at a key moment in AI video generation evolution. As one of the newest major open-source releases in 2025, it brings unique capabilities that address real limitations in existing approaches.

The unified architecture supporting text-to-video, image-to-video, and video continuation in a single model simplifies workflows significantly. Block Sparse Attention enables practical long-form generation without the quality degradation plaguing other models. Multi-reward GRPO training produces output with natural motion and aesthetic appeal that rivals commercial alternatives.

Choose LongCat-Video if you need: Unified model handling all three generation modes without managing multiple specialized models. Long-form video capability beyond typical 5-second limitations. MIT-licensed commercial use without restrictions or recurring fees. Latest research innovations in an actively developed open-source release.

Consider alternatives if you have: Limited hardware below 24GB VRAM, where Wan 2.2 GGUF variants prove more accessible. Need for mature ComfyUI ecosystem integration with extensive custom nodes and community resources. Preference for managed cloud solutions without local infrastructure requirements.

For professionals comparing options, LongCat-Video represents a compelling choice alongside established alternatives like Wan 2.2, Mochi, and HunyuanVideo. Each brings distinct strengths to different use cases.

The video generation revolution continues accelerating. Models like LongCat-Video demonstrate that open-source alternatives now compete directly with expensive commercial services. For creators willing to invest in hardware and technical learning, the rewards include unlimited generation capability with no recurring costs.

For those preferring managed solutions without infrastructure complexity, platforms like Apatero.com deliver similar capabilities through easier interfaces optimized for production use.

LongCat-Video represents where open-source video generation stands in 2025. With continued development and community adoption, it will play a significant role in democratizing professional video creation capabilities that were recently exclusive to well-funded studios.

The future of video generation is open, accessible, and increasingly powerful. LongCat-Video brings that future one step closer.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

10 Best AI Influencer Generator Tools Compared (2025)

Comprehensive comparison of the top AI influencer generator tools in 2025. Features, pricing, quality, and best use cases for each platform reviewed.

5 Proven AI Influencer Niches That Actually Make Money in 2025

Discover the most profitable niches for AI influencers in 2025. Real data on monetization potential, audience engagement, and growth strategies for virtual content creators.

AI Adventure Book Generation with Real-Time Images

Generate interactive adventure books with real-time AI image creation. Complete workflow for dynamic storytelling with consistent visual generation.