How to Achieve Anime Character Consistency in AI Generation (2025)

Stop getting different characters in every generation. Master LoRA training, reference techniques, and workflow strategies for consistent anime characters.

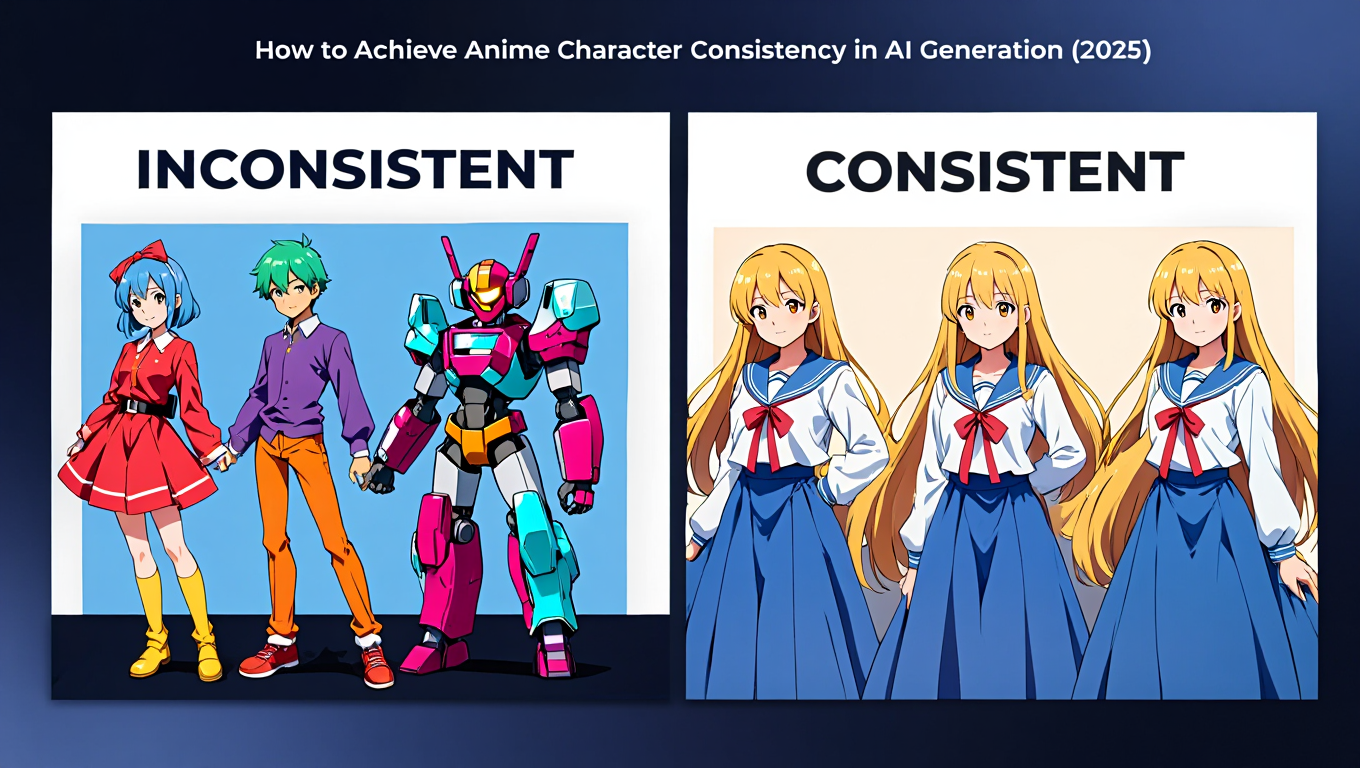

You generate the perfect shot of your original character. Blue hair in a specific style, distinctive eyes, that exact outfit design you've been refining. You're building a comic series, or a visual novel, or just exploring a character concept across different scenes. Next generation loads and she's got completely different facial features, wrong hair length, outfit that barely resembles the reference.

Forty generations later you've got forty variations of "blue-haired anime girl" but not a single consistent character. This is the problem that makes or breaks whether AI image generation actually works for storytelling and character-driven projects.

Quick Answer: Achieving anime character consistency in AI generation requires training a custom LoRA on 15-30 high-quality reference images of your character, using IPAdapter for pose and composition guidance, maintaining consistent prompting with character tags at high weight, and building repeatable workflows in ComfyUI that lock in facial features while allowing pose variation. The combination of trained LoRA (for identity), weighted prompts (for features), and reference conditioning (for composition) produces 80-90% consistency across generations.

- Custom LoRA training is essential for consistent original characters, not optional

- IPAdapter provides composition and pose consistency without affecting character identity

- Prompt structure matters more for anime than realistic models - tag ordering and weights are critical

- 15-30 varied reference images work better than 100 similar ones for LoRA training

- Consistency and pose flexibility exist in tension - workflows must balance both

The Three-Layer Approach That Actually Works

Character consistency isn't one technique, it's a system. People who succeed at this use three complementary approaches layered together, not a single magic solution.

Layer one is identity through LoRA training. This teaches the model what your specific character looks like at a fundamental level. Facial structure, distinctive features, overall design. The LoRA activates that learned identity in every generation.

Layer two is feature reinforcement through precise prompting. Even with a LoRA, prompts need to emphasize distinctive characteristics. Blue hair doesn't automatically mean your specific shade and style of blue hair. Weighted tags like "(long blue hair with side ponytail:1.4)" lock in specifics.

Layer three is compositional guidance through reference systems like IPAdapter or ControlNet. These control pose, angle, and composition separately from identity. You can vary how your character is positioned or what they're doing while maintaining who they are.

Most failed attempts at consistency use only one layer. Just prompting gives you generic characters. Just LoRA without good prompts produces inconsistent features. Just reference systems without identity training gives you similar poses of different characters. The stack is what makes it work.

Services like Apatero.com implement this layered approach automatically, handling LoRA management and reference conditioning behind the scenes so you can focus on creative direction rather than technical configuration.

Why LoRA Training Became Non-Negotiable

Before good LoRA training tools existed for anime models, character consistency was basically impossible for original characters. You could describe your character perfectly in prompts and still get endless variations. LoRAs changed everything by letting you teach the model your specific character directly.

The breakthrough wasn't just LoRA technology itself, it was LoRA training becoming accessible enough that non-technical artists could do it. Tools like Kohya SS simplified the process from "requires machine learning expertise" to "follow these steps and wait."

Training a character LoRA on modern anime models like Animagine XL or Pony Diffusion takes 15-30 good reference images. Not hundreds, not thousands. Quality and variety matter more than quantity. You want your character from different angles, different expressions, maybe different outfits, showing the consistency you're trying to capture.

The reference images themselves can be AI-generated. This sounds circular but it works. Generate 50 images of your character concept, manually select the 20 best that match your vision, train a LoRA on those curated selections. The LoRA reinforces the specific features you selected across that set, producing more consistent future generations.

Training time depends on hardware and settings but typically runs 1-3 hours on a decent GPU. The trained LoRA file is small, usually 50-200MB. Once trained, it loads in seconds and applies to every generation. The upfront time investment pays off immediately if you're generating multiple images of the same character.

The parameters matter though. Undertrained LoRAs have weak influence and characters still vary. Overtrained LoRAs make characters too rigid and hard to pose differently. The sweet spot is training until the character's distinctive features are reliably present but before the LoRA starts memorizing exact poses or compositions from your training set.

IPAdapter Changed the Consistency Game

IPAdapter solved a different problem than LoRA but it's equally critical for full workflow. LoRA handles "who is this character," IPAdapter handles "what is this character doing and how are they positioned."

The technical explanation is that IPAdapter injects image features into the generation process at a different point than text prompts or LoRAs. It influences composition, pose, and spatial relationships while largely leaving identity alone if that identity is locked in through LoRA.

In practice, this means you can use a reference image showing the exact pose you want while your LoRA maintains character identity. Generate your character sitting cross-legged? Feed a reference of anyone sitting cross-legged to IPAdapter, use your character LoRA, and you get your character in that pose. The pose comes from the reference, the identity comes from the LoRA.

This is massive for sequential art or comics. You're not fighting to describe complex poses in prompts while simultaneously maintaining character consistency. The reference handles pose, the LoRA handles identity, prompts handle details like expression and what they're wearing.

IPAdapter strength needs calibration. Too weak and it barely influences composition. Too strong and it starts affecting character features, undermining your LoRA. The sweet spot for anime work is usually 0.4-0.7 strength depending on how strict the pose matching needs to be versus how much creative interpretation you want.

Multiple IPAdapter models exist with different characteristics. IPAdapter Plus for general use, IPAdapter Face for maintaining facial features from reference (useful when you don't have a LoRA yet), IPAdapter Style for transferring artistic style separately from content. Understanding which adapter serves which purpose lets you combine them for layered control.

The workflow becomes: LoRA for character identity, IPAdapter for pose and composition, prompts for specifics like expression and setting, ControlNet optionally for additional precision on things like hand positions or specific angles. Each system handles what it does best, combined they produce control that wasn't possible with any single approach.

- Start with pose library: Build a collection of reference images showing various poses you commonly need

- Test strength ranges: Same character, same pose reference, vary IPAdapter strength from 0.3 to 0.8 to find your model's sweet spot

- Separate face and body references: Use IPAdapter Face for maintaining expression while IPAdapter Plus handles body pose

- Combine with ControlNet: IPAdapter for overall composition, ControlNet for precise details that must be exact

What Makes Prompting Anime Models Different

If you're coming from realistic models like SDXL or Flux, anime model prompting feels backwards at first. The rules are different and ignoring that produces inconsistent results.

Anime models trained on booru-style tags expect specific tag structure. Character-defining features should appear early and with weight modifiers. Generic quality tags like "masterpiece" and "best quality" actually matter for anime models where they're mostly placebo on realistic ones. The model was trained on images tagged that way, so it responds to those patterns.

Tag ordering influences hierarchy. Earlier tags generally have more influence than later ones. If you bury your character's distinctive features at the end of a long prompt, they'll be weak or ignored. Lead with the identity information, follow with pose and setting details.

Weight modifiers like (tag:1.4) or [tag:0.8] let you emphasize or de-emphasize specific features. For consistency, heavily weight your character's unique features. "(purple eyes:1.4), (twin drills hairstyle:1.3), (frilly gothic dress:1.2)" locks in those specifics stronger than surrounding details. The model pays more attention to weighted tags.

Negative prompts are more critical for anime models than realistic ones. Common problems like "multiple girls, extra limbs, deformed hands" need explicit negation. Anime models don't have the same inherent understanding of anatomy that realistic models do, you guide them more explicitly away from common failures.

Artist tags dramatically shift style but can undermine character consistency if overused. An artist tag effectively says "draw in X person's style" which may conflict with your character's specific design if that artist's style is very distinctive. Use artist tags for general aesthetic direction but not as crutches for solving consistency problems.

The prompt engineering for consistency looks like this: character identity tags heavily weighted, pose and composition moderately weighted, setting and details normal weight, quality tags up front, comprehensive negative prompt. This structure reinforces character while allowing variation in other elements.

Which Base Models Handle Consistency Best

Not all anime models are equally good at maintaining character consistency even with proper techniques. The base model matters.

Pony Diffusion V6 became popular specifically because of strong consistency characteristics. It maintains features across generations better than most alternatives even without LoRA training. The tradeoff is that it has a distinctive aesthetic that not everyone likes. If the Pony look works for your project, the consistency comes easier.

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

Animagine XL produces more varied aesthetic styles and arguably prettier baseline output, but requires more careful prompting for consistency. It's more flexible, which means it also has more room to drift from your intended character. Excellent with proper LoRA training, more challenging with prompting alone.

Anything V5 and the Anything series maintain consistent popularity because they're reliable workhorses. Not the fanciest output, not the most features, but steady and predictable. Good choice when you want to focus on the workflow rather than fighting model quirks.

NovelAI's models excel at consistency by design since the platform focuses on character-driven storytelling. If you're using NovelAI Diffusion locally, it rewards the layered consistency approach more than most alternatives. The model was explicitly trained with character consistency as a priority.

Merge models are wildly unpredictable for consistency. Someone's custom merge of three different anime models might produce gorgeous one-off images but terrible consistency because the merged weights average out the features that make consistency possible. Stick with well-tested base models or carefully validated merges for character work.

The model choice interacts with your LoRA training. A LoRA trained on Animagine won't necessarily work on Pony Diffusion and vice versa. You're training on top of that specific model's understanding. Switching base models means retraining your character LoRA, which is annoying but necessary if you want to experiment with different model aesthetics.

For beginners, start with Pony Diffusion V6 because it's forgiving. Once you've mastered the consistency workflow there, branch out to other models if the aesthetic doesn't match your needs. Or use platforms like Apatero.com that abstract away model selection by maintaining character consistency across their optimized model choices.

Building a Repeatable Workflow in ComfyUI

Theory is great, practice means actually building workflows you can reuse. Here's how consistent character generation looks as an actual ComfyUI workflow structure.

Start with your checkpoint loader for your chosen anime model. Connect that to your LoRA loader with your character LoRA. Both feed into your KSampler. This is the identity foundation.

Add IPAdapter nodes between your image loading and the conditioning path to the sampler. Your reference pose image feeds through IPAdapter Model Loader and then into IPAdapter Apply, which modifies the conditioning before it reaches the sampler. This adds compositional control.

Your positive prompt goes through CLIP Text Encode with your carefully structured tags. Character features weighted high, pose and setting details at normal weights, quality tags included. This reinforces the identity and specifies the variation you want.

Negative prompt similarly encoded with comprehensive negatives for common anime model failures. Multiple characters, anatomical problems, quality degradation terms all negated.

The sampler combines all these inputs - base model, LoRA modification, IPAdapter conditioning, text prompts positive and negative - into generations that maintain your character while varying based on your prompts and references.

Save this workflow as a template. Next time you need the same character in a different scenario, load the template, swap the IPAdapter reference image, modify the text prompts for the new scenario, generate. The infrastructure stays the same, only the variables change. This is how you go from struggling with consistency to producing multiple consistent shots in a session.

ControlNet can layer on top if you need additional precision. OpenPose for specific skeletal structure, Depth for exact spatial relationships, Canny for strong edge control. These add to the consistency stack rather than replacing any part of it.

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

How to Build Your Reference Dataset for LoRA Training

The reference images you train your LoRA on determine what consistency you get out. Building this dataset thoughtfully makes everything easier downstream.

Generate or collect 50-100 candidate images showing your character concept. These can come from AI generation, commissioned art, your own sketches if you draw, or carefully selected existing art that matches your vision. The source matters less than the consistency within the set.

Curate ruthlessly down to 15-30 best images. You're looking for consistency in the features that define your character while having variation in everything else. Same face, eyes, hair, body type across all selections. Different poses, expressions, outfits, angles. The LoRA learns what stays constant across the variations.

Variety in the training set produces flexible LoRAs. All frontal views trains a LoRA that struggles with profile or three-quarter angles. All similar expressions makes different emotions difficult. All the same outfit might bake that outfit into the character's identity when you want outfit to be variable. Think about what needs to be consistent versus what needs to be flexible.

Image quality matters more for LoRA training than for normal generation. Blurry references, artifacts, anatomical errors, these get learned and reinforced. Clean, high-quality references produce clean LoRAs that don't introduce problems. If you're using AI-generated references, only include the ones that came out correctly.

Tag your reference images if you're using automatic tagging in your training setup. Consistent, accurate tags help the LoRA learn what features correspond to which concepts. Most modern training tools can auto-tag using interrogation models, but manually reviewing and correcting those tags improves results.

Resolution should be consistent or at least similar across your reference set. Training on images of wildly different sizes sometimes confuses the learning process. 512x512 or 768x768 are common base resolutions for anime LoRA training. Higher resolution can work but requires more VRAM and longer training times.

Training Parameters That Actually Affect Consistency

LoRA training involves dozens of parameters but most barely matter for results. These are the ones that actually impact character consistency.

Learning rate controls how aggressively the LoRA learns from your data. Too high and it overfits, memorizing specific images. Too low and it underfits, barely learning anything useful. For character consistency on anime models, learning rates between 0.0001 and 0.0005 work reliably. Start at 0.0002 and adjust if results are too weak or too rigid.

Training epochs is how many times the training process loops through your entire dataset. Underdone and you get weak, inconsistent LoRAs. Overdone and you get rigid LoRAs that memorize your training images. For 15-30 image datasets, 10-20 epochs usually hits the sweet spot. Watch your preview generations during training to catch when it's learned enough.

Network dimension and alpha control LoRA capacity and how strongly it applies. Common values are 32 or 64 for dimension, with alpha equal to dimension. Higher values give more expressive LoRAs but require more training time and can overfit more easily. For character consistency, 32/32 or 64/64 both work well. Going higher doesn't usually improve results for this use case.

Batch size affects training speed and memory usage more than final quality. Larger batches train faster but need more VRAM. For character work, batch size of 1-4 is typical. The quality impact is minor, set this based on what your hardware can handle.

Optimizer choice between AdamW, AdamW8bit, and others mostly affects memory usage and speed. AdamW8bit uses less VRAM with minimal quality difference. Unless you're optimizing for specific edge cases, the default optimizers work fine for character LoRAs.

Most other parameters can stay at sensible defaults. The training systems have matured enough that default values work for standard use cases. You're not doing novel research, you're training a character LoRA using a process thousands of people have done before. Follow proven recipes rather than over-optimizing parameters.

Join 115 other course members

Create Your First Mega-Realistic AI Influencer in 51 Lessons

Create ultra-realistic AI influencers with lifelike skin details, professional selfies, and complex scenes. Get two complete courses in one bundle. ComfyUI Foundation to master the tech, and Fanvue Creator Academy to learn how to market yourself as an AI creator.

Preview your training progress. Good training tools generate sample images every few epochs so you can see the LoRA developing. If previews show strong character features appearing consistently by epoch 10-12, you're on track. If epoch 20 still looks vague, something in your dataset or parameters needs adjustment.

Common Consistency Failures and Real Fixes

Even with proper technique, things go wrong. Here's what actually breaks and how to fix it without guessing.

Character features drift between generations despite LoRA. Your LoRA weight is probably too low. LoRAs default to strength 1.0, but you can push character LoRAs to 1.2 or 1.3 for stronger influence without problems. Alternatively, your base prompts aren't reinforcing the character features enough. Add heavily weighted tags for distinctive characteristics.

Pose variation breaks character consistency. IPAdapter strength is too high, it's affecting identity along with pose. Lower it to 0.4-0.5 range. Or your reference pose images show different characters with varying features, confusing the system. Use neutral references that don't have strong facial features, or use pose-only ControlNet like OpenPose instead of IPAdapter.

LoRA produces the same pose repeatedly. You overtrained on too-similar reference images. The LoRA memorized compositions along with character identity. Retrain with more varied reference poses, or reduce training epochs to stop before memorization sets in. Short term fix is lower LoRA strength and stronger prompting for varied poses.

Character looks fine in some angles but wrong in others. Training dataset lacked variety in angles. If you only trained on frontal views, three-quarter and profile generations will struggle. Retrain including the missing angles, or accept that you need to prompt more carefully and cherry-pick more for those angles. Alternatively, use IPAdapter with reference images of the missing angles to guide generations.

Details like exact outfit or accessories vary when they shouldn't. These details aren't being picked up by the LoRA because they're not consistent enough across training images, or your prompts aren't weighting them heavily enough. For outfit consistency, either include outfit details in every training image, or prompt outfit specifics with high weights like (character-specific-outfit:1.4). Accessories especially need prompt reinforcement because they're small details the model might ignore.

Character changes completely when changing settings or adding other characters. Your LoRA is weak relative to the other concepts in the generation. Increase LoRA strength. Simplify your prompts to reduce competing concepts that dilute the character focus. Generate character in simple settings first, then composite or inpaint complex backgrounds after establishing the consistent character.

The debugging approach is always to isolate variables. Generate with just the LoRA, no IPAdapter, simple prompts. Works? Add complexity one layer at a time until it breaks. That identifies what's causing the problem. Doesn't work? The issue is in your LoRA or base prompts, not the additional systems.

How Multi-Character Scenes Complicate Everything

Getting one character consistent is hard enough. Multiple consistent characters in the same scene multiplies difficulty.

Each character needs their own LoRA trained separately. You'll load multiple LoRAs simultaneously, which works but requires careful prompt structure to direct which character gets which description. Regional prompters or attention coupling techniques help by assigning different prompts to different areas of the image.

Latent couple and similar regional generation methods split the image spatially during generation. Left side gets character A's LoRA and prompts, right side gets character B's LoRA and prompts. This prevents the LoRAs from interfering with each other but requires careful planning of character positions.

Interaction between characters is where it gets truly difficult. If they're touching or overlapping, regional methods break down. You end up doing multiple passes, generating each character separately in consistent poses, then compositing or using inpainting to combine them while maintaining consistency for both.

The practical workflow for multi-character consistency often involves generating each character in the desired pose separately, using background removal or segmentation to extract them cleanly, then compositing in traditional image editing software with final inpainting passes to blend edges and add interaction details.

Professional comic or visual novel workflows basically never generate final multi-character scenes in one pass. They're doing character layers, background layers, compositing, and selective inpainting. The AI handles consistency of individual elements, human composition handles combining them coherently. Trying to force everything into single generations produces inconsistent results and endless frustration.

This is where managed services provide significant value. Platforms like Apatero.com can handle complex multi-character consistency through backend workflow orchestration that would take hours to set up manually. For commercial projects where time is money, that complexity management is worth paying for.

- Generate separately: Each character in their pose with simple background

- Segment cleanly: Use proper segmentation to extract characters without artifacts

- Composite deliberately: Combine in editing software with proper layer management

- Inpaint connections: Use AI inpainting to add shadows, contact points, interaction details after composition

- Accept the complexity: Multi-character consistency is genuinely hard, structure workflow to handle it methodically

Frequently Asked Questions

How many reference images do you actually need for a character LoRA?

For functional consistency, 15-20 varied, high-quality images work well. More than 30 rarely improves results unless you're specifically trying to teach extremely complex character designs with many distinctive elements. Quality and variety matter far more than quantity. One person reported excellent results from just 10 perfectly curated images, while another struggled with 50 similar images. The consistency within your set determines what the LoRA can learn.

Can you achieve consistency without training custom LoRAs?

For existing popular characters that already have LoRAs available, yes. For original characters, technically yes but practically it's frustrating enough that you should just train the LoRA. IPAdapter plus extremely detailed prompting can maintain rough consistency, but you'll spend more time fighting it than the 2-3 hours to train a proper LoRA. The consistency ceiling without LoRA is much lower than with it.

Does LoRA training require expensive hardware?

A 12GB GPU can train anime character LoRAs, though it takes longer than higher-end cards. Budget 1-3 hours on mid-range hardware. If you don't have a suitable GPU, rental services like RunPod or Vast.ai let you rent powerful cards for a few dollars per training session. Some online services will train LoRAs for you if you provide the dataset, removing the hardware requirement entirely but adding cost per LoRA.

Why does character consistency break when changing art styles?

Style and identity are tangled in the model's learned representations. Pushing hard toward a different style (through prompts, LoRAs, or artist tags) can override character identity. The model is balancing multiple competing concepts and style tags often have strong influence. Use style LoRAs at lower strength, or train your character LoRA on examples already in your target style. IPAdapter Style can help transfer style without affecting character identity as much.

How do you maintain consistency across different models or checkpoints?

You generally don't. LoRAs are checkpoint-specific. A LoRA trained on Animagine won't work properly on Pony Diffusion. If you need to switch base models, you need to retrain your character LoRA on the new base. Some crossover sometimes works between closely related models, but results degrade. For serious work, commit to a base model for the duration of your project or maintain separate LoRAs for each model you want to use.

Can you use celebrity or existing character LoRAs as starting points?

Technically yes by training on top of an existing LoRA, but it rarely works as well as training from the base model. The existing LoRA's learned features interfere with learning your new character's features. Better to train fresh unless your character is intentionally a variation of an existing one. Then starting from that character's LoRA and training your modifications on top can work well.

How often do you need to regenerate because consistency failed anyway?

Even with perfect setup, expect 10-30% of generations to have something off that requires regeneration. Maybe the expression isn't quite right, or a detail drifted, or the pose came out awkward. This is normal. You're stacking probabilities, not guarantees. The system dramatically improves consistency from "90% fail" to "70-80% usable," not from "90% fail" to "100% perfect." Building in iteration time is part of the workflow.

What's the best way to share characters with others who want to use them consistently?

Provide the trained LoRA file, a detailed prompt template showing how you structure character descriptions, reference images showing the character from multiple angles, and your typical negative prompt. The LoRA does most of the heavy lifting but the prompting approach matters for consistent results. Some creators package this as a "character card" with all info in one place. Specify which base model the LoRA was trained on since it won't work on others.

The Reality of Workflow Maintenance

Character consistency isn't a problem you solve once and forget. It's an ongoing practice that requires maintenance as you develop projects.

Your LoRA might need occasional retraining as you refine your character design. Generate 20 images with your current LoRA, curate the best ones that match your evolved vision, retrain incorporating these. The character can develop naturally while maintaining consistency through iterative LoRA updates.

Save everything systematically. LoRA files, training datasets, workflow templates, prompt templates, reference images. Six months into a project you'll need to generate something new, and if you've lost the specific setup that was working, you're starting over from scratch. Version control matters for creative projects just like code.

Document what works for each character. Different characters may need different LoRA strengths, IPAdapter settings, or prompting approaches even using the same workflow structure. Note which settings produce best results for each. Trying to remember months later wastes time.

The consistency workflow becomes natural after enough practice. Initially it feels like juggling multiple complex systems. After training a few LoRAs and generating hundreds of images, it becomes second nature. Your intuition develops for when to adjust LoRA strength versus prompt weights versus IPAdapter influence. You start recognizing failure patterns and knowing immediately what to adjust.

Most successful character-driven AI projects used these techniques not because they're easy, but because nothing else works reliably enough. The alternative is accepting inconsistency or doing everything manually. The time invested in mastering consistency workflows pays back across every subsequent character-driven project.

Start simple. One character, basic workflow, master the fundamentals. Add complexity only when simpler approaches hit limits. Build your system incrementally based on actual needs rather than trying to implement everything at once. The learning curve is real but the capability it unlocks makes it worthwhile.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

10 Best AI Influencer Generator Tools Compared (2025)

Comprehensive comparison of the top AI influencer generator tools in 2025. Features, pricing, quality, and best use cases for each platform reviewed.

AI Adventure Book Generation with Real-Time Images

Generate interactive adventure books with real-time AI image creation. Complete workflow for dynamic storytelling with consistent visual generation.

AI Background Replacement: Professional Guide 2025

Master AI background replacement for professional results. Learn rembg, BiRefNet, and ComfyUI workflows for seamless background removal and replacement.

.png)