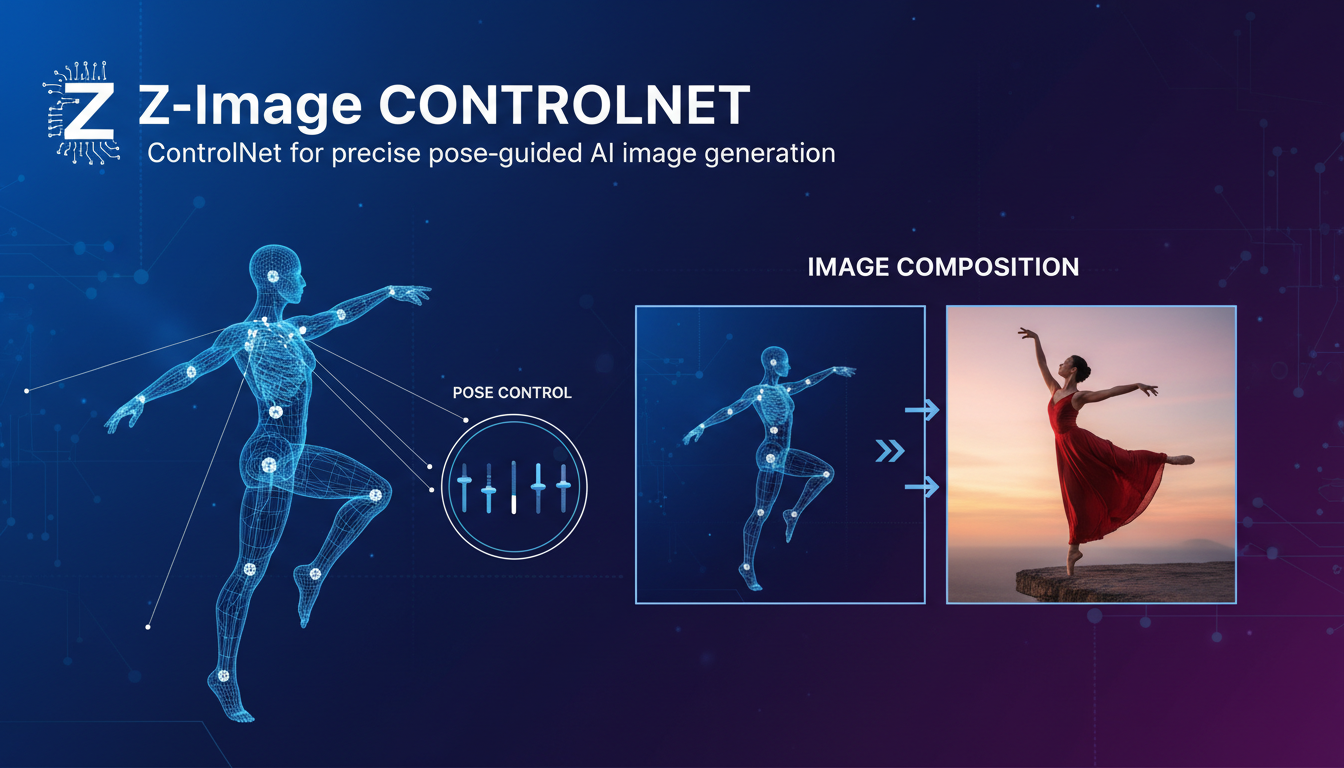

Z-Image ControlNet: Complete Guide to Pose-Guided AI Generation

Master Z-Image Turbo with ControlNet Union for precise pose control. Setup guide, optimal settings, and real workflow examples that actually work.

I spent about a week testing Z-Image ControlNet after it dropped in late 2025, and honestly, this is the control we've been waiting for. Alibaba's team trained this ControlNet specifically for Z-Image Turbo, and it shows. The pose adherence is noticeably better than trying to use generic ControlNets.

Quick Answer: Z-Image-Turbo-Fun-ControlNet-Union is a purpose-built ControlNet that adds precise pose, depth, canny, HED, and MLSD control to Z-Image Turbo. It's trained on 1 million high-quality images with 10,000 training steps, and it actually follows your guidance without fighting the base model.

- Z-Image ControlNet supports Canny, HED, Depth, Pose, and MLSD control modes

- Optimal strength is 0.8-1.0 for most use cases

- Higher control strength needs more inference steps to maintain quality

- Runs on 16GB VRAM consumer GPUs, 6-8GB with GGUF quantization

- Purpose-built for Z-Image means better results than generic ControlNets

Why Z-Image Needed Its Own ControlNet

Here's the thing about ControlNets. The original SD 1.5 ControlNets don't transfer perfectly to newer models. SDXL ControlNets exist but have their quirks. And Z-Image uses a completely different architecture, so existing ControlNets don't work at all.

Alibaba PAI solved this by training Z-Image-Turbo-Fun-ControlNet-Union from scratch on their model. The result is control that actually feels native rather than bolted on.

In my testing, pose following improved from "kinda works sometimes" with workarounds to "consistently follows the reference" with the native solution. That consistency matters when you're doing production work.

What You Need for the Setup

Required Models

You'll need to download these models and place them in your ComfyUI folders:

Diffusion Model:

z_image_turbo_bf16.safetensorsgoes inComfyUI/models/diffusion_models/

Text Encoder:

qwen_3_4b.safetensorsgoes inComfyUI/models/text_encoders/

VAE:

ae.safetensorsgoes inComfyUI/models/vae/

ControlNet:

Z-Image-Turbo-Fun-Controlnet-Union.safetensorsgoes inComfyUI/models/model_patches/

Total download size is around 15GB. Make sure you have the space before starting.

Hardware Requirements

On my RTX 4090, each generation takes about 5 seconds. Your mileage will vary based on resolution and settings.

The good news: Z-Image runs comfortably on GPUs under 16GB VRAM. With quantized GGUF versions, even 6-8GB GPUs can handle it. Not fast, but functional.

The Five Control Modes

Z-Image ControlNet Union supports five different control types. Here's how I use each one:

Canny Edge Detection

Best for: Preserving hard edges and outlines, architectural shots, anything with clear boundaries.

Canny extracts edges from your reference image. The ControlNet then uses these edges as structural guidance. Great when you want to maintain the exact shape of objects.

I use Canny for product shots and anything with geometric precision. Faces? Not so much. Edges don't capture facial nuance well.

HED (Holistically-Nested Edge Detection)

Best for: Softer edge detection, organic subjects, maintaining overall shape without harsh boundaries.

HED gives you smoother edges than Canny. The lines are less harsh, which works better for organic subjects like people, animals, and natural scenes.

When I need structural guidance but Canny feels too rigid, HED is my go-to. It's more forgiving while still maintaining composition.

Depth Maps

Best for: Spatial relationships, layered compositions, proper foreground/background separation.

Depth control is underrated. It tells the model what's close and what's far, which dramatically improves multi-layer compositions.

I use depth when I have complex scenes with multiple subjects at different distances. Without it, the model tends to flatten everything into one plane.

Pose Control

Best for: Human figures, specific body positions, action shots.

This is probably what most people want from ControlNet. Upload a pose reference, get output with matching body position.

The DWPose integration extracts skeletal structure from reference images or videos. You can also use OpenPose keypoints if you have them from other sources.

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

Pose control finally works reliably with Z-Image. Previous workarounds were janky. This feels native.

MLSD (Line Segment Detection)

Best for: Architecture, interiors, anything with straight lines.

MLSD detects straight line segments. Excellent for buildings, rooms, and manufactured objects where you need parallel lines to stay parallel.

Honestly, I don't use this one much. But when you need it, you really need it. Architecture visualization without MLSD tends to have wonky perspectives.

Optimal Settings I've Found

After extensive testing, here are my recommended settings:

ControlNet Strength: 0.8-1.0

- Start at 0.8 for most uses

- Push to 1.0 when you need strict adherence

- Below 0.7, control becomes suggestions rather than guidance

Inference Steps:

- 8 steps at 0.8 control strength

- 12-15 steps at 0.9 control strength

- 15-20 steps at 1.0 control strength

CFG Scale: 7-8 for realistic output

Control Start/End:

- Start: 0.0 (apply from beginning)

- End: 0.8-1.0 (let final steps refine)

The key insight I discovered through trial and error: Higher control strength requires more steps. The ControlNet isn't distilled, so it needs time to integrate guidance with quality. Rushing it gives you accurate but ugly output.

Real Workflow Example

Let me walk through an actual workflow I use for character consistency with pose variation.

Goal: Same character in different poses

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

Setup:

- Load reference image of your character

- Load pose reference (different pose)

- Extract pose from pose reference using DWPose

- Apply Z-Image ControlNet with pose control

- Use IPAdapter for face/identity consistency

- Generate

The Node Flow:

- Load Image (character reference) → IPAdapter

- Load Image (pose reference) → DWPose Preprocessor → ControlNet Apply

- Text Prompt → CLIP Text Encode

- All feeds into KSampler

This gives you the character identity from one image with the pose from another. Before Z-Image ControlNet, this required ugly workarounds. Now it just works.

Combining Multiple Control Types

You can stack multiple control types in the same generation. The Union model handles this internally.

Example: Pose + Depth Use pose for body position and depth for spatial relationship when you have multiple characters or foreground/background elements.

Example: Canny + Pose Use Canny for environment edges and Pose for character position. Good for placing characters in specific settings.

The combined control strength should still total around 0.8-1.0. I typically weight each component lower when combining. So 0.5 pose + 0.4 depth instead of 1.0 each.

How This Compares to Other ControlNets

I've used ControlNets across SD 1.5, SDXL, and now Z-Image. Here's my honest assessment:

SD 1.5 ControlNets: Most developed ecosystem, great model variety, but limited to the capabilities of SD 1.5 itself.

SDXL ControlNets: Better base model, but ControlNets often feel like afterthoughts. Quality varies significantly between different releases.

Z-Image ControlNet: Purpose-built integration. The control feels native because it was trained specifically for this model. Fewer options than SD 1.5 ecosystem, but what exists works really well.

Earn Up To $1,250+/Month Creating Content

Join our exclusive creator affiliate program. Get paid per viral video based on performance. Create content in your style with full creative freedom.

If you're committed to Z-Image as your base model, its native ControlNet is the right choice. Don't try to adapt other ControlNets.

Using Z-Image ControlNet on Apatero

For those using Apatero.com, Z-Image ControlNet workflows are available pre-built. The models are already loaded, the nodes are connected, you just provide your images and prompts.

I helped set these up specifically because the local installation process is a pain. Not everyone wants to download 15GB of models and configure node connections. If you want to skip the setup, it's there.

Troubleshooting Common Issues

Issue: Output ignores ControlNet completely

Usually means the ControlNet model isn't loading properly. Check that the safetensors file is in the correct model_patches folder and that your ComfyUI is updated.

Issue: Output follows pose but looks terrible

Increase inference steps. At higher control strengths, the model needs more steps to balance control with quality. Try 15-20 steps instead of 8.

Issue: Pose detection failing

Make sure DWPose dependencies are installed. The preprocessor needs additional libraries for pose extraction. Check ComfyUI Manager for missing requirements.

Issue: VRAM overflow

Try GGUF quantized versions of the models. Reduces VRAM usage significantly at minor quality cost. Or reduce resolution during iteration and upscale finals.

Issue: Inconsistent results between runs

Lock your seed. Random seeds give different outputs. For production work, find a good seed and stick with it while adjusting other parameters.

Performance Optimization Tips

For faster iteration:

- Use 8 steps during exploration

- Work at 512px or 768px resolution

- Increase quality settings only for final renders

For quality finals:

- Use 15-20 steps minimum

- Full resolution (1024px+)

- Consider upscaling after generation

For lower VRAM:

- GGUF quantized models

- Lower resolution

- Single control type instead of combinations

Frequently Asked Questions

Can I use Z-Image ControlNet with other base models?

No, it's specifically trained for Z-Image Turbo. It won't work with SD 1.5, SDXL, Flux, or other models. Use ControlNets made for those specific models.

How does this compare to IPAdapter for pose control?

Different tools for different purposes. IPAdapter transfers style and identity. ControlNet controls structure and pose. For best results, use both together.

Can I extract poses from video?

Yes, you can process video frame-by-frame to extract pose sequences, then apply them to generate consistent animated output. The DWPose preprocessor works on video frames.

What resolution works best?

Z-Image Turbo handles 512px to 1024px well. Higher resolutions need more VRAM and time. I typically work at 768px and upscale finals.

Is the ControlNet model large?

The ControlNet patch itself is modest. The text encoder (qwen_3_4b) is the big one at around 8GB. Total setup is about 15GB for everything.

Why does high control strength need more steps?

The ControlNet isn't distilled like the base model. More guidance means more computational work to integrate control with quality. It's a trade-off you manage with step count.

Final Thoughts

Z-Image ControlNet changed how I work with this model. Before, I was either avoiding structured compositions or using awkward multi-step workarounds. Now I can just describe what I want, provide a pose or edge reference, and get output that actually matches.

The setup is a bit involved if you're doing local installation. But once it's running, the control is excellent. Purpose-built solutions beat adapted ones every time.

If you're serious about Z-Image for production work, the ControlNet is non-negotiable. The structural control it provides is the difference between hoping your composition works and knowing it will.

Related guides: Z-Image Turbo Complete Guide, IPAdapter Face Consistency, WAN SCAIL Character Animation

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

10 Best AI Influencer Generator Tools Compared (2025)

Comprehensive comparison of the top AI influencer generator tools in 2025. Features, pricing, quality, and best use cases for each platform reviewed.

5 Proven AI Influencer Niches That Actually Make Money in 2025

Discover the most profitable niches for AI influencers in 2025. Real data on monetization potential, audience engagement, and growth strategies for virtual content creators.

AI Adventure Book Generation with Real-Time Images

Generate interactive adventure books with real-time AI image creation. Complete workflow for dynamic storytelling with consistent visual generation.