Video ControlNet Stacking: Advanced Depth + Pose + Edge Control Guide

Master multi-ControlNet workflows for video generation. Learn to stack depth, pose, and edge controls for character consistency and scene stability in ComfyUI.

You've generated a video with a single ControlNet and watched your character morph into different people halfway through. Or the scene wobbles and shifts even though your depth map stayed perfectly consistent. Single ControlNet workflows work great for still images, but video generation demands more precision and stability.

Quick Answer: ControlNet stacking combines multiple control types (depth, pose, edge) in a single workflow to maintain character consistency and scene stability across video frames. By balancing control strengths between 0.4-0.9 per ControlNet, you achieve temporal consistency that single controls cannot provide, though this requires 12-24GB VRAM depending on resolution and frame count.

- Stacking 2-3 ControlNets provides character consistency and scene stability that single controls miss

- Depth + Pose combination works best for character-focused videos like dance and action scenes

- Adding Edge/Canny control reduces scene drift and maintains structural integrity across frames

- Each ControlNet needs individual strength balancing between 0.4-0.9 to avoid conflicts

- Multi-ControlNet workflows require 12-24GB VRAM but deliver professional temporal consistency

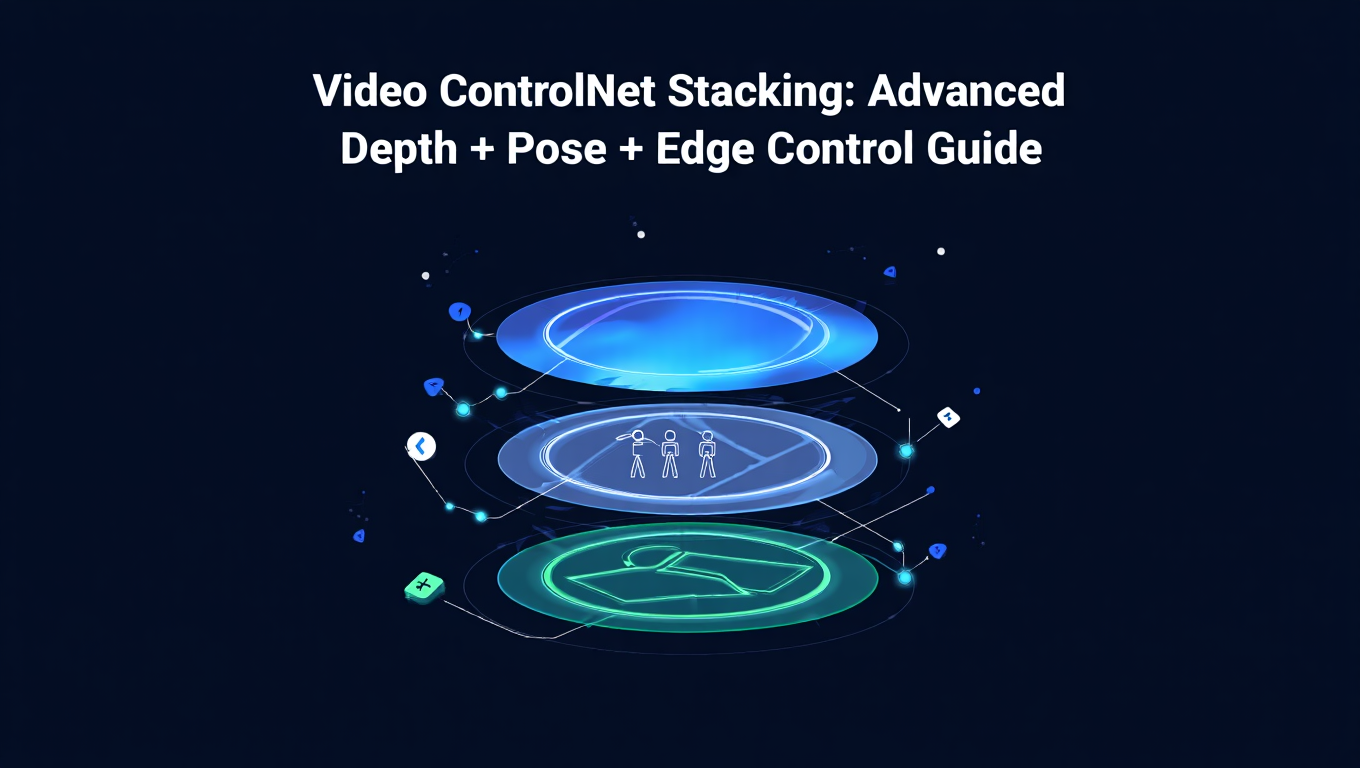

What Is ControlNet Stacking for Video Generation?

ControlNet stacking means running multiple ControlNet models simultaneously on the same generation process. Each ControlNet analyzes a different aspect of your reference video. Depth controls spatial relationships, pose maintains character skeleton structure, and edge detection preserves sharp boundaries and scene composition.

Think of it like layering transparent overlays on a video editor. Each layer constrains the AI differently. Depth prevents the background from suddenly moving closer or further away. Pose keeps your character's body proportions consistent. Edge ensures walls stay walls and doorframes don't randomly curve.

The magic happens when these controls work together. A character performing a backflip needs pose to track the body movement, depth to maintain distance from the ground, and edge to keep background elements from warping. One ControlNet alone can't handle all three constraints simultaneously.

While platforms like Apatero.com offer instant video generation without complex multi-ControlNet setup, understanding stacking gives you maximum creative control when you need frame-perfect consistency.

Why Single ControlNet Often Isn't Enough

Single ControlNet workflows fail video generation in predictable ways. A depth-only approach maintains scene geometry but lets characters morph. The AI knows how far away things are, but it doesn't understand that the person at 5 feet should stay the same person.

Pose-only control creates the opposite problem. Your character's skeleton stays consistent, but the scene around them warps and drifts. Backgrounds shift between frames even when the camera stays locked. Objects change size and position because nothing constrains spatial relationships.

Edge detection alone preserves sharp boundaries but misses depth information entirely. Flat surfaces look stable, but any 3D movement creates artifacts. A character walking toward the camera might maintain their outline but grow and shrink unpredictably because edge maps don't encode distance.

The real issue is temporal consistency across 60+ frames. Still image generation handles single ControlNet well because there's no "previous frame" to compare against. Video generation amplifies every inconsistency. A 2% variation per frame compounds into complete scene breakdown by frame 30.

How Do You Combine Depth + Pose for Character Consistency?

The Depth + Pose combination forms the foundation of character-focused video workflows. This pairing solves the two biggest consistency problems simultaneously - maintaining character identity and preserving spatial relationships.

Start with depth as your primary control at strength 0.7 to 0.8. This establishes the scene's spatial structure and keeps backgrounds stable. Process your reference video through a depth estimation model like MiDaS or ZoeDepth to generate grayscale depth maps for every frame.

Add pose control at strength 0.6 to 0.7 as your secondary constraint. Use OpenPose or DWPose to extract skeleton data from your reference footage. The pose model tracks 18-25 body keypoints per frame, ensuring the character's proportions and posture remain consistent.

The strength balance matters enormously. If depth overpowers pose (0.9 depth, 0.4 pose), the scene stays stable but characters can still morph. If pose dominates (0.4 depth, 0.9 pose), character consistency improves but backgrounds wobble. The sweet spot typically lands around 0.75 depth and 0.65 pose for dance videos and action sequences.

Test your strength values on a 3-5 second clip before rendering the full sequence. Generate the same short segment three times with different strength combinations. Compare frame 1 to frame 90 in each version. The combination that maintains both character features and background stability wins.

Adding Edge/Canny for Scene Stability

Edge detection provides the third layer of constraint that transforms good videos into professional results. Canny edge detection and similar methods create high-contrast maps of every sharp boundary in your scene, from doorframes to facial features.

Add Canny control at strength 0.4 to 0.6 after establishing your Depth + Pose foundation. This lower strength prevents edge maps from overpowering the other controls while still providing structural reinforcement. Edge detection excels at preventing scene drift, those subtle shifts where walls gradually move or furniture slowly changes position.

The processing pipeline for edge maps differs from depth and pose. Run your reference video through a Canny edge detector with threshold values between 100-200 for the lower bound and 200-300 for the upper bound. Lower thresholds capture more subtle edges, higher thresholds focus on major structural elements.

Edge control particularly shines in architectural scenes and environments with strong geometric elements. A character walking through a building benefits from edges that lock windows, walls, and floors in place while depth and pose handle the character's movement. Without edge control, you'll notice backgrounds gradually warping even when depth maps look perfect.

The triple combination creates redundant constraints that catch errors. If depth temporarily loses track of a surface, edge detection maintains the boundary. If pose misses a hand position, depth and edge together prevent the hand from generating in impossible locations. This redundancy is why stacked workflows outperform single ControlNet approaches.

What Are the VRAM Requirements for Multi-ControlNet Workflows?

Multi-ControlNet video generation demands significantly more memory than single control workflows. Each additional ControlNet model loads into VRAM along with the base video generation model, preprocessors, and frame buffers.

Minimum VRAM requirements by configuration:

- 2 ControlNets (Depth + Pose): 12-16GB VRAM for 512x512 at 60 frames

- 3 ControlNets (Depth + Pose + Edge): 16-20GB VRAM for 512x512 at 60 frames

- 3 ControlNets at 768x768: 20-24GB VRAM for 60 frames

- 3 ControlNets at 1024x1024: 24GB+ VRAM, may require offloading

Resolution impacts VRAM exponentially, not linearly. Doubling resolution from 512 to 1024 roughly quadruples memory requirements. Frame count scales more gently since frames process sequentially, but batch processing multiple frames simultaneously multiplies memory needs.

If you're working with 12GB or less, stick to two ControlNets maximum at 512x512 resolution. Enable model offloading in ComfyUI to move models between VRAM and system RAM between processing steps. This slows generation by 40-60% but makes multi-ControlNet feasible on consumer hardware.

For 16-20GB setups, you can comfortably run three ControlNets at 512-768 resolution. This sweet spot handles most professional video work without constant memory management.

The 24GB threshold enables 1024x1024 resolution with full three-ControlNet stacks. Professional workflows targeting high-resolution output need this tier. Consider that Apatero.com handles these memory optimizations automatically, delivering high-quality results without manual VRAM management.

Step-by-Step ComfyUI Workflow Setup

Building a multi-ControlNet video workflow in ComfyUI requires careful node arrangement and connection management. This workflow assumes you have a reference video already processed into individual frames.

Phase 1 - Load Reference Data

Create three separate Load Image Batch nodes, one for each control type. Point the first to your depth map folder, the second to pose skeleton images, and the third to edge detection maps. Set the batch size to match your total frame count, typically 60-120 frames for test runs.

Add a Load Checkpoint node with your preferred video generation model. AnimateDiff, Stable Video Diffusion, or video-specific fine-tunes work well. Connect this to your main KSampler node that will handle the actual generation.

Phase 2 - ControlNet Configuration

Add three Apply ControlNet nodes. Label them clearly as Depth Control, Pose Control, and Edge Control to avoid confusion in complex workflows. Each node needs two critical connections - the control images from your batch loaders and the conditioning from your prompts.

Set initial strength values as starting points. Depth gets 0.75, Pose gets 0.65, Edge gets 0.50. Set start_percent to 0.0 and end_percent to 1.0 for all three, meaning they influence the entire generation process from first step to last.

Connect the ControlNet nodes in series. The first ControlNet's output conditioning connects to the second ControlNet's input conditioning, then the second connects to the third. This chains the controls together so all three influence the final generation simultaneously.

Phase 3 - Sampling and Output

Wire the final ControlNet output to your KSampler's positive conditioning input. Set steps to 20-30 for video work, CFG scale between 7-9 for good prompt adherence without over-constraining. Use DPM++ 2M Karras or Euler A samplers, which handle multi-conditioning well.

Add a VHS Video Combine node at the end to compile your generated frames back into a video file. Set your target framerate, typically 24 or 30 FPS. Connect the KSampler output through any VAE Decode nodes to the video combiner.

Run a test generation on frames 0-30 only (about 1 second of video). Check temporal consistency by scrubbing back and forth. If you see character morphing, increase pose strength by 0.1. If backgrounds drift, increase depth strength. If edges look too rigid and artificial, decrease edge strength.

Control Strength Balancing Between Multiple ControlNets

Finding the right strength balance separates amateur results from professional output. Each ControlNet competes for influence over the generation process, and too much strength on any single control creates artifacts.

Strength interaction patterns you'll encounter:

When depth strength exceeds 0.85 combined with pose above 0.7, you'll see "frozen" characters that move rigidly like puppets. The AI over-constrains motion, sacrificing natural movement for perfect consistency. Reduce one control by 0.1-0.15 to restore fluidity.

Setting all three controls above 0.7 simultaneously typically breaks generation. You'll get either nearly identical copies of your reference video with minimal AI interpretation, or the competing constraints create visual glitches as the model struggles to satisfy all requirements. Keep your total combined strength under 2.0 for best results.

Edge control at 0.7+ often creates harsh, over-sharpened output that looks posterized. Edges should reinforce structure subtly, not dominate the aesthetic. If your generated video looks like it has a heavy edge detection filter applied, you've pushed edge strength too high.

Recommended strength ranges by use case:

For dance videos and choreography, run depth at 0.70, pose at 0.75, edge at 0.45. Pose takes priority because maintaining character consistency during complex movement matters most.

Action scenes and sports footage work best with depth at 0.80, pose at 0.60, edge at 0.50. Depth prevents spatial inconsistencies during rapid camera movement.

Talking head and interview footage needs depth at 0.75, pose at 0.70, edge at 0.55. The balanced approach handles subtle facial movements while keeping backgrounds perfectly stable.

Architectural walkthroughs benefit from depth at 0.85, pose at 0.50, edge at 0.60. Environment stability takes priority over character consistency.

Which Combinations Work Best for Different Use Cases?

Different video types demand different ControlNet combinations. Not every project needs all three controls, and sometimes specialized combinations outperform the standard stack.

Dance videos and choreography perform best with Depth + Pose + Hands. Replace edge detection with a dedicated hand pose ControlNet. Dance involves complex hand gestures that standard pose models sometimes miss. Hand-specific ControlNets track fingers and hand orientation separately, preventing the "blob hands" problem that plagues generated dance videos.

Action scenes with multiple characters benefit from Depth + Pose + Segmentation rather than edge detection. Add a segmentation ControlNet that tracks which pixels belong to which character. This prevents character merging during close contact or overlapping movements. Set segmentation strength around 0.55.

Vehicle and motion videos work well with Depth + Edge only, skipping pose entirely. Cars, bikes, and mechanical objects don't have skeletons, so pose ControlNet provides no value. Depth maintains spatial relationships while edge preserves vehicle outlines and mechanical details. Increase edge strength to 0.65-0.70 to compensate for missing pose data.

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

Talking head content can often use Pose + Edge without depth if the background stays static. This lighter two-ControlNet setup reduces VRAM requirements while maintaining facial consistency. Face-specific pose models like FacePose or MediaPipe Face Mesh outperform full-body pose for this use case.

Nature and landscape videos typically need only Depth + Edge. There are no characters to track, so pose adds no value. Depth maintains the 3D structure of terrain and foliage while edge preserves treelines, horizons, and geological features. This combination prevents the "melting landscape" effect common in nature video generation.

Platforms like Apatero.com automatically select optimal ControlNet combinations based on your content type, removing the guesswork from this decision process.

Processing Reference Videos for ControlNet Inputs

High-quality ControlNet inputs start with properly processed reference videos. Each control type requires specific preprocessing to extract useful data from your source footage.

Depth map generation begins with consistent lighting and minimal motion blur in your reference footage. Run your video through MiDaS v3.1 or ZoeDepth for relative depth estimation. These models output grayscale images where brightness represents distance - white for close objects, black for distant ones.

Process depth maps at the same resolution as your target output. If generating 512x512 video, create 512x512 depth maps. Mismatched resolutions force ComfyUI to resize on the fly, introducing artifacts and inconsistencies. Export depth frames as PNG files to preserve detail without compression artifacts.

Pose skeleton extraction requires clear visibility of the subject's body. Use DWPose or OpenPose preprocessors with confidence threshold set to 0.3-0.4. Lower thresholds capture more keypoints but introduce false detections. Higher thresholds miss subtle poses but ensure accuracy.

Save pose data as skeleton visualizations (white lines on black background) rather than raw JSON keypoints. Visual skeleton images work directly with ControlNet models without additional processing. Number your pose frames sequentially with leading zeros - frame_0001.png, frame_0002.png - so ComfyUI loads them in correct order.

Edge map creation works best with Canny detection at conservative threshold values. Set lower threshold to 100 and upper to 200 for general footage. Increase both by 50-100 for high-contrast scenes or detailed architectural content. Lower both by 30-50 for soft, organic subjects like faces or natural landscapes.

Apply slight Gaussian blur (radius 0.5-1.0) to edge maps before using them as ControlNet inputs. Pure Canny output can be too harsh and create overly rigid generations. The blur softens constraints slightly while preserving structural information.

Temporal Consistency Across Frames

Temporal consistency means each frame relates naturally to the previous frame without jarring changes. This is where multi-ControlNet workflows shine and single controls struggle.

The consistency challenge compounds with video length. A 2-second clip at 30 FPS contains 60 frames. Each frame must maintain character identity, spatial relationships, and scene structure while allowing natural motion. Single ControlNet workflows lose tracking around frame 20-30, manifesting as gradual character morphing or background drift.

Consistency metrics to monitor:

Character identity should remain stable across all frames. Export every 10th frame from your generated video and view them side by side. If the character's facial structure, body proportions, or clothing changes between frames, your pose or depth strength needs adjustment.

Background stability prevents scene elements from drifting. Lock a reference point like a doorframe or tree in your reference video. Track that same point through your generated output. Drift exceeding 5-10 pixels between frames indicates insufficient depth or edge control.

Motion fluidity ensures smooth transitions between poses. Jerky, stuttering movement suggests over-constrained ControlNets fighting each other. Reduce all strength values by 0.1 and regenerate. Smooth motion with consistent characters means you've found the balance point.

Improving temporal consistency:

Enable temporal smoothing in your video generation model if available. AnimateDiff and similar models include temporal layers that blend information between frames. These layers work synergistically with ControlNet constraints to improve frame-to-frame coherence.

Increase your reference video FPS before processing. If you shot at 30 FPS, interpolate to 60 FPS using RIFE or FILM before extracting ControlNet data. More reference frames provide more guidance points, improving consistency at the cost of doubled processing time.

Use overlap batching when possible. Instead of processing frames 1-60 then 61-120, process 1-60, then 51-110, then 101-160. The 10-frame overlap helps maintain consistency across batch boundaries. Blend the overlapping segments during final video assembly.

Common Artifacts from Conflicting Controls

Multi-ControlNet workflows introduce unique artifacts when controls conflict. Recognizing these patterns helps you diagnose and fix strength balance issues quickly.

The "puppet effect" happens when pose control dominates too strongly. Characters move with unnaturally stiff, jerky motion as if controlled by invisible strings. Their skeleton positions match the reference perfectly, but natural secondary motion like clothing movement or hair physics disappears. Reduce pose strength by 0.15-0.2 to restore natural movement.

Background breathing manifests as subtle pulsing or wavering in supposedly static scene elements. Walls seem to slightly expand and contract, or furniture gently shifts position frame to frame. This indicates insufficient edge or depth control. Increase depth strength by 0.1 first, then edge if the problem persists.

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

Depth conflict ghosting creates semi-transparent artifacts around objects at depth boundaries. You'll see faint outlines or doubled edges where foreground meets background. This happens when depth maps contain noise or inconsistencies. Reprocess your depth maps with smoother settings or apply slight blur to reduce sharp transitions.

Pose skeleton bleeding shows visible skeleton lines or keypoint markers in the final generation. The ControlNet influence is so strong that the AI literally draws the pose visualization into the output. Reduce pose strength immediately to 0.5 or lower and ensure your pose images have black backgrounds without noise.

Edge over-sharpening makes generated videos look posterized or cell-shaded when you wanted photorealism. Every boundary becomes too crisp and defined. Lower edge control strength to 0.3-0.4 and verify your edge maps aren't using excessive threshold values.

Character morphing reveals itself when the same person gradually changes facial features, hair, or body type across frames. This classic temporal inconsistency means pose control is too weak. Increase pose strength by 0.1-0.15 and verify your pose extraction correctly tracked the character through the entire sequence.

The fix for almost every artifact follows the same pattern. Identify which control is causing the problem, adjust its strength by 0.1-0.15 in the appropriate direction, regenerate a test clip, and compare results. Small incremental adjustments prevent overcorrection.

Optimization Techniques for Multi-ControlNet

Multi-ControlNet workflows demand optimization to remain practical on consumer hardware. These techniques reduce memory usage and processing time without sacrificing output quality.

Model offloading moves ControlNet models between VRAM and system RAM as needed. Enable this in ComfyUI's settings under "Execution Model Offloading." The workflow loads each ControlNet only when processing its influence, then unloads it before the next. This cuts VRAM requirements by 30-40% but increases generation time by 40-60%.

Sequential frame processing generates one frame completely before moving to the next, rather than batching multiple frames simultaneously. This trades speed for memory efficiency. A 60-frame video processes as 60 individual generations instead of 6 batches of 10. Memory usage stays constant regardless of video length.

Resolution staging generates at lower resolution first, then upscales. Create your video at 512x512 with all three ControlNets, then use Video2Video or frame interpolation to upscale to 1024x1024. The initial low-resolution pass requires half the VRAM while establishing temporal consistency. The upscale pass maintains that consistency while adding detail.

Preprocessor caching saves processed ControlNet inputs for reuse. Once you've created depth maps, pose skeletons, and edge maps for a reference video, save those folders. Testing different strength values doesn't require reprocessing the reference video each time. This saves 5-10 minutes per iteration during experimentation.

Selective control application applies different ControlNets to different frame ranges. Use all three controls for frames with complex motion, but drop to two controls for static scenes. A conversation scene might need full control during gestures but only pose and depth during still moments. This requires manual workflow setup but can reduce processing time by 25%.

Batch size optimization finds the sweet spot between speed and memory usage. Test batch sizes of 1, 4, 8, and 16 frames on your hardware. Monitor VRAM usage with nvidia-smi or Task Manager. The largest batch size that doesn't trigger out-of-memory errors gives you optimal speed.

Consider that Apatero.com handles all these optimizations automatically through cloud infrastructure, letting you focus on creative decisions rather than technical configuration.

When to Use ControlNet Union Instead

ControlNet Union represents a different approach to multi-control workflows. Instead of stacking separate ControlNet models, Union combines multiple control types into a single unified model that processes all constraints simultaneously.

ControlNet Union advantages:

Memory efficiency tops the list. A single Union model uses roughly the same VRAM as one standard ControlNet, but provides depth, pose, edge, and normal map control simultaneously. On 12GB cards where stacking three ControlNets is impossible, Union makes multi-control feasible.

Processing speed increases by 30-50% compared to stacked models. Union processes all control types in one forward pass rather than chaining multiple models sequentially. For 60-frame videos, this can mean 15-20 minutes saved on generation time.

Simplified workflow setup reduces complexity. Instead of three Apply ControlNet nodes with careful connection management, you need only one. The Union model internally balances control influences based on training.

ControlNet Union limitations:

Less granular control over individual constraint strengths represents the biggest drawback. You can't independently adjust depth to 0.75 and pose to 0.65. Union models typically accept a single strength value that affects all control types equally. Some Union implementations allow per-control weighting, but it's less flexible than separate models.

Training data determines available control combinations. If a Union model trained on depth, pose, canny, and normal maps, you're limited to those four types. Want to add segmentation or hands-specific control? You need a Union model specifically trained with those inputs, or you must fall back to stacking.

Temporal consistency can suffer compared to carefully tuned stacked workflows. Union models balance controls based on training data patterns, which may not match your specific use case. A dance video might need pose prioritization that the Union model doesn't provide.

Join 115 other course members

Create Your First Mega-Realistic AI Influencer in 51 Lessons

Create ultra-realistic AI influencers with lifelike skin details, professional selfies, and complex scenes. Get two complete courses in one bundle. ComfyUI Foundation to master the tech, and Fanvue Creator Academy to learn how to market yourself as an AI creator.

When to choose Union over stacking:

Use Union for VRAM-limited hardware where stacking isn't feasible. A 10GB card can run Union but struggles with stacked ControlNets.

Choose Union for quick iteration and experimentation. Testing multiple concepts benefits from faster generation, even if fine control suffers.

Stick with stacking for production work requiring precise control balancing. When you need pose at 0.75, depth at 0.65, and edge at 0.50, separate models deliver superior results.

Use Union for longer videos where processing time matters more than perfect optimization. A 5-minute video might take hours with stacked ControlNets but finish in reasonable time with Union.

Real-World Examples - Dance Videos

Dance video generation showcases multi-ControlNet workflows at their best. Complex movement, rapid pose changes, and the need for perfect character consistency create the ideal test case.

A typical dance workflow uses depth at 0.70, pose at 0.75, and hands at 0.65 (using a dedicated hand pose model instead of edge detection). The slightly higher pose strength ensures the character's body proportions remain stable through spins, jumps, and floor work.

Processing the reference footage:

Record your reference dance at 60 FPS minimum. The faster movement in dance benefits from higher temporal resolution. Shoot against a simple background to help depth estimation and pose extraction. Complex backgrounds can confuse preprocessors, especially during rapid movement.

Extract pose data using DWPose rather than OpenPose. DWPose handles partial occlusion better, which matters when dancers turn away from camera or when limbs cross in front of the body. Set confidence threshold to 0.35 to catch subtle hand positions during finger movements.

Add the hand pose ControlNet as a fourth control specifically for dance. Hands tell much of the story in dance performance, and standard full-body pose models often miss finger positions or hand orientation. The dedicated hand model tracks 21 keypoints per hand, ensuring finger choreography translates to the generated video.

Common dance-specific challenges:

Spin moves often cause pose tracking to fail for 2-3 frames as the dancer rotates. Build redundancy by increasing depth control during known spin sections. The depth map maintains spatial consistency while pose temporarily loses tracking.

Floor work and low poses create depth map artifacts as the dancer's distance from camera changes rapidly. Process depth maps with MiDaS v3.1 rather than older versions, as it handles these scenarios more accurately. Expect some trial and error with depth strength during floor sections.

Fast arm movements can create motion blur in reference footage that confuses ControlNet. Shoot at 1/250 second shutter speed or faster to freeze movement. If blur is unavoidable, slightly reduce pose strength during those sequences to prevent artifact generation.

Real-World Examples - Action Scenes

Action scene generation demands different ControlNet balancing than dance videos. Camera movement, rapid subject motion, and often multiple characters create unique challenges.

Set depth at 0.80, pose at 0.60, and edge at 0.55 for action footage. The higher depth strength stabilizes the environment during camera movement. Lower pose strength allows more dynamic, less rigid character motion suitable for fighting or sports.

Handling camera movement:

Action scenes often include panning, tracking, or handheld camera work. Process your depth maps with this movement in mind. Depth models sometimes struggle with moving cameras, creating inconsistent spatial relationships between frames. Consider stabilizing your reference footage before processing if camera shake is excessive.

Edge detection becomes critical during camera movement. Static edge maps would create sliding artifacts as the camera pans. Ensure your edge maps reflect the actual frame content, not a stabilized version. The ControlNet needs to see edges moving across frame in sync with camera motion.

Multiple character coordination:

When action scenes include multiple people, add a segmentation ControlNet as a fourth layer. This tracks which pixels belong to character A versus character B, preventing character merging during contact. Set segmentation strength around 0.55, between your pose and edge values.

Process segmentation maps with consistent color coding. Assign character one to red (255,0,0), character two to blue (0,0,255), and background to black (0,0,0). The ControlNet learns these associations and maintains character separation even during rapid exchanges.

Action-specific artifacts:

Motion blur in generated action scenes often looks wrong even when reference footage shows proper blur. ControlNet constraints can create frozen motion blur that doesn't match the action direction. If this happens, reduce all ControlNet strengths by 0.1 to give the model more freedom in motion interpretation.

Impact frames and extreme poses sometimes break ControlNet constraints entirely. A character mid-punch or mid-kick might generate with distorted proportions. Increase pose strength by 0.15 specifically for these frames, or manually adjust the pose skeleton to be slightly less extreme.

Real-World Examples - Talking Heads

Talking head and interview content needs subtlety that dance and action don't require. Facial micro-expressions and lip sync demand different ControlNet approaches.

Use depth at 0.75, facial pose at 0.70, and edge at 0.55. The balanced approach prevents the common talking head problems of morphing faces and drifting backgrounds. Switch from full-body pose to a face-specific pose model like MediaPipe Face Mesh for better facial tracking.

Face-specific considerations:

Facial pose models track 468 facial landmarks compared to the 5-8 facial points in full-body pose models. This granularity captures subtle expressions, eyebrow movement, and mouth shapes necessary for convincing talking head videos.

Eye contact consistency matters enormously for talking heads. Viewers notice even slight eye position changes between frames. Ensure your facial pose extraction captures iris position accurately. Some preprocessors miss this detail, creating characters whose gaze wanders unnaturally.

Lighting and background:

Static backgrounds in interview settings benefit from higher edge control at 0.60-0.65. Since nothing moves except the speaker's face and upper body, edge maps can strongly constrain the environment without creating rigidity.

Lighting changes during speech can confuse depth estimation. A person's face darkening as they turn toward shadow doesn't mean they moved further from camera. Process depth maps with lighting normalization if your reference footage has variable lighting. Some depth models include automatic normalization.

Lip sync accuracy:

The biggest challenge in talking head generation is maintaining lip sync with audio. While ControlNet doesn't directly handle audio, the temporal consistency it provides makes secondary audio lip sync tools work better. Generate your video with strong temporal consistency first, then apply audio-driven lip animation as a second pass.

If lip movements look wrong in the generated output despite good pose tracking, the AI might be fighting between the pose constraint (mouth position) and the text prompt (speaking). Test with minimal prompt focus on facial features, letting pose control handle expression details.

Platforms like Apatero.com specialize in these nuanced use cases, automatically adjusting control strengths for optimal talking head results without manual configuration.

Frequently Asked Questions

Can you stack more than three ControlNets for video generation?

Yes, but practical limits exist around four to five ControlNets depending on VRAM. Each additional control requires 2-4GB of memory and adds processing time. Beyond three controls, you often see diminishing returns. The fourth and fifth ControlNets add marginal improvements while doubling memory requirements. Most professional workflows find three well-chosen controls outperform five mediocre ones.

Why do my characters morph even with pose ControlNet enabled?

Pose strength is likely too low, or your pose extraction missed frames. Check that every frame has valid pose data by viewing your pose skeleton images sequentially. Missing or malformed skeletons for even a few frames cause morphing. Increase pose strength from 0.6 to 0.75 and verify pose data quality before adjusting other parameters.

How do you handle scenes where characters move in and out of frame?

Use pose ControlNet with automatic keypoint detection that can handle partial visibility. When the character exits frame, the pose model should output blank/black frames rather than hallucinating skeleton data. Your workflow should handle these blank frames gracefully, falling back to depth and edge control only. Some implementations require explicit blank frame handling in the node setup.

What's the difference between ControlNet Union and stacking individual models?

Union combines multiple control types in a single model, using less VRAM and processing faster but offering less individual control strength adjustment. Stacking runs separate models sequentially, using more resources but allowing precise strength balancing per control type. Union works better for resource-limited setups, stacking delivers superior results when hardware permits.

Can you use different ControlNet strengths for different frame ranges?

Yes, through workflow programming in ComfyUI. Set up conditional logic that applies different strength values based on frame number. This requires intermediate node scripting but enables adaptive control. For example, use higher pose strength during complex motion (frames 30-60) and lower strength during static poses (frames 1-29). This optimization can improve results while reducing processing requirements.

How do you fix background drift when depth ControlNet is already at 0.8?

Add edge control or increase edge strength if already present. Background drift with strong depth control suggests the depth maps themselves have inconsistencies between frames. Reprocess your reference video with a different depth model, or apply temporal smoothing to your depth maps before using them. Sometimes the source footage has actual camera movement that looks like drift.

What resolution should you process ControlNet inputs at?

Match your target generation resolution exactly. If generating 512x512 video, create 512x512 depth maps, pose skeletons, and edge maps. Mismatched resolutions force runtime rescaling that introduces artifacts. For 1024x1024 output, process all inputs at 1024x1024 even though it takes longer. The consistency improvement justifies the extra processing time.

Why does my video look like a posterized cartoon with ControlNet?

Edge control strength is too high, creating over-sharpened output. Reduce edge strength from 0.6 to 0.3-0.4 and verify your edge detection thresholds aren't excessive. Canny thresholds above 300 create very harsh edge maps that make everything look cell-shaded. Lower thresholds to 100-200 range for more natural results.

Can you mix video ControlNet with image ControlNet models?

Technically yes, but results vary. Some image-trained ControlNet models work acceptably on video when applied frame-by-frame. Video-specific models include temporal awareness that dramatically improves consistency. Use video-trained models when available, fall back to image models only for control types that lack video versions.

How long does multi-ControlNet video generation typically take?

Expect 2-5 minutes per second of video on a RTX 4090 with three ControlNets at 512x512 resolution. A 10-second clip takes 20-50 minutes. Processing scales roughly linearly with frame count but exponentially with resolution. The same 10 seconds at 1024x1024 might take 2-3 hours. Optimization techniques like model offloading add 40-60% to these times but enable generation on lower-end hardware.

Conclusion

Multi-ControlNet workflows transform video generation from experimental to professional. Stacking depth, pose, and edge controls creates the redundant constraints necessary for temporal consistency across hundreds of frames. Character identity stays stable, backgrounds don't drift, and scene structure maintains integrity through complex motion.

The key to success lies in strength balancing. Start with depth at 0.75, pose at 0.65, and edge at 0.50, then adjust based on your specific content. Dance videos need higher pose strength, architectural content needs stronger depth, and talking heads benefit from balanced settings. Test on short clips before committing to full renders.

VRAM requirements of 12-24GB depending on resolution and ControlNet count remain the main barrier to entry. Model offloading and resolution staging make multi-ControlNet accessible on consumer hardware, though processing times increase significantly. For production work demanding both quality and speed, dedicated GPU resources or platforms like Apatero.com provide instant professional results without the technical overhead.

The future of video generation belongs to these multi-constraint approaches. As models improve and Union implementations mature, we'll see even better consistency with lower resource requirements. But the fundamental principle remains unchanged: single controls can't match the quality of thoughtfully balanced multi-ControlNet workflows for professional video generation.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

10 Most Common ComfyUI Beginner Mistakes and How to Fix Them in 2025

Avoid the top 10 ComfyUI beginner pitfalls that frustrate new users. Complete troubleshooting guide with solutions for VRAM errors, model loading...

25 ComfyUI Tips and Tricks That Pro Users Don't Want You to Know in 2025

Discover 25 advanced ComfyUI tips, workflow optimization techniques, and pro-level tricks that expert users leverage.

360 Anime Spin with Anisora v3.2: Complete Character Rotation Guide ComfyUI 2025

Master 360-degree anime character rotation with Anisora v3.2 in ComfyUI. Learn camera orbit workflows, multi-view consistency, and professional...

.png)