Qwen Edit 2511: Why This Image Editor Beats Everything Before It

Qwen-Image-Edit-2511 brings built-in LoRAs, multi-person consistency, and industrial design features. Complete guide to the major upgrade from 2509.

Look, I've tested a lot of image editing models over the past two years. Most of them do one thing well and completely fall apart at everything else. Qwen-Image-Edit-2511 is the first one that made me actually stop and pay attention.

Quick Answer: Qwen-Image-Edit-2511 is Alibaba's 20B parameter image editing model released December 2025, featuring major upgrades over 2509 including built-in LoRA support, dramatically better multi-person consistency, and improved industrial design capabilities. It's Apache 2.0 licensed and free to use.

- Built-in popular LoRAs mean no extra downloads for lighting control and common styles

- Multi-person group photo editing actually works now without face drift

- LightX2V optimization delivers up to 42x speedup with minimal quality loss

- Native ComfyUI support with auto-downloading models on first run

- FP8 quantization cuts VRAM usage by 50% compared to full precision

What Actually Changed from Qwen Edit 2509?

I spent about a week running side-by-side tests between 2509 and 2511. The difference is not subtle.

The 2509 version was already good at single-subject editing. You could take a portrait, make creative edits, and the person would still look like themselves. But throw two people in the same image? Chaos. One face would drift into the other, or you'd get these weird hybrid features that looked like a bad Photoshop accident.

With 2511, I tested group photo editing with three people and the model kept each face distinct across multiple edits. I tried merging two separate portraits into one group shot and it actually worked. This is huge for anyone doing AI influencer content or composite work.

Multi-person editing in 2511 maintains distinct facial features where 2509 would blend them together

Multi-person editing in 2511 maintains distinct facial features where 2509 would blend them together

The Built-In LoRA Thing Is Actually Brilliant

Here's what nobody tells you about using LoRAs for image editing: the setup is a pain. You have to find the right LoRA, download it, make sure it's compatible with your base model, adjust the strength so it doesn't blow out your results. Most people give up halfway through.

Qwen 2511 just bakes popular LoRAs directly into the base model. Lighting control, certain style effects. They're there from the start. No extra downloads, no compatibility headaches, no strength tuning.

I tested the lighting control specifically. It's not just a filter. You can actually redirect light sources in existing images and the model understands shadow behavior. This used to require separate ControlNet setups that took forever to configure.

How Does It Stack Up Against Other Editors?

I'll be honest, I'm biased toward open-source tools. Qwen 2511 is Apache 2.0 licensed, which means you can use it commercially without worrying about subscription fees eating into your margins.

Compared to proprietary options like Adobe's Generative Fill:

- Speed: 2511 is significantly faster once you're past the initial model load

- Quality: Comparable for most edits, better for faces specifically

- Cost: Free vs. Creative Cloud subscription

- Control: You can fine-tune every parameter vs. black box

If you're doing professional work and need predictable results every time, the commercial options might still make sense. But for most use cases? The cost difference doesn't justify the limitations.

For those who want an even easier path, platforms like Apatero.com have started integrating Qwen editing capabilities. Full disclosure, I help build Apatero, but I genuinely think it's the easiest way to get started if ComfyUI setup feels overwhelming.

Getting It Running in ComfyUI

The official ComfyUI implementation makes this pretty painless. Here's the actual process I used:

Update ComfyUI first. I cannot stress this enough. Old versions have compatibility issues with the Qwen text encoder.

Install the native nodes. The workflow available at docs.comfy.org auto-downloads everything on first run.

Check your VRAM. Full precision needs around 40GB. FP8 quantization drops this to about 20GB. GGUF versions can run on 12GB cards.

The model files go in specific folders:

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

diffusion_models/for the main modeltext_encoders/for the Qwen encodervae/for the autoencoder

One mistake I made: I initially put the VAE in the wrong folder and got garbled output for an hour before figuring it out. Double-check your paths.

What Can You Actually Edit?

The model handles a wide range of edits. In my testing:

Works really well:

- Adding or removing objects from scenes

- Changing clothing and accessories

- Background replacements while keeping subjects intact

- Text editing in images (surprisingly good with both English and Chinese)

- Style transfers that maintain subject identity

Works but needs careful prompting:

- Major pose changes

- Significant age modifications

- Adding people who weren't in the original

Still struggles with:

- Hand regeneration (though it's better than most)

- Complex physics interactions like water splashes

- Very small text details

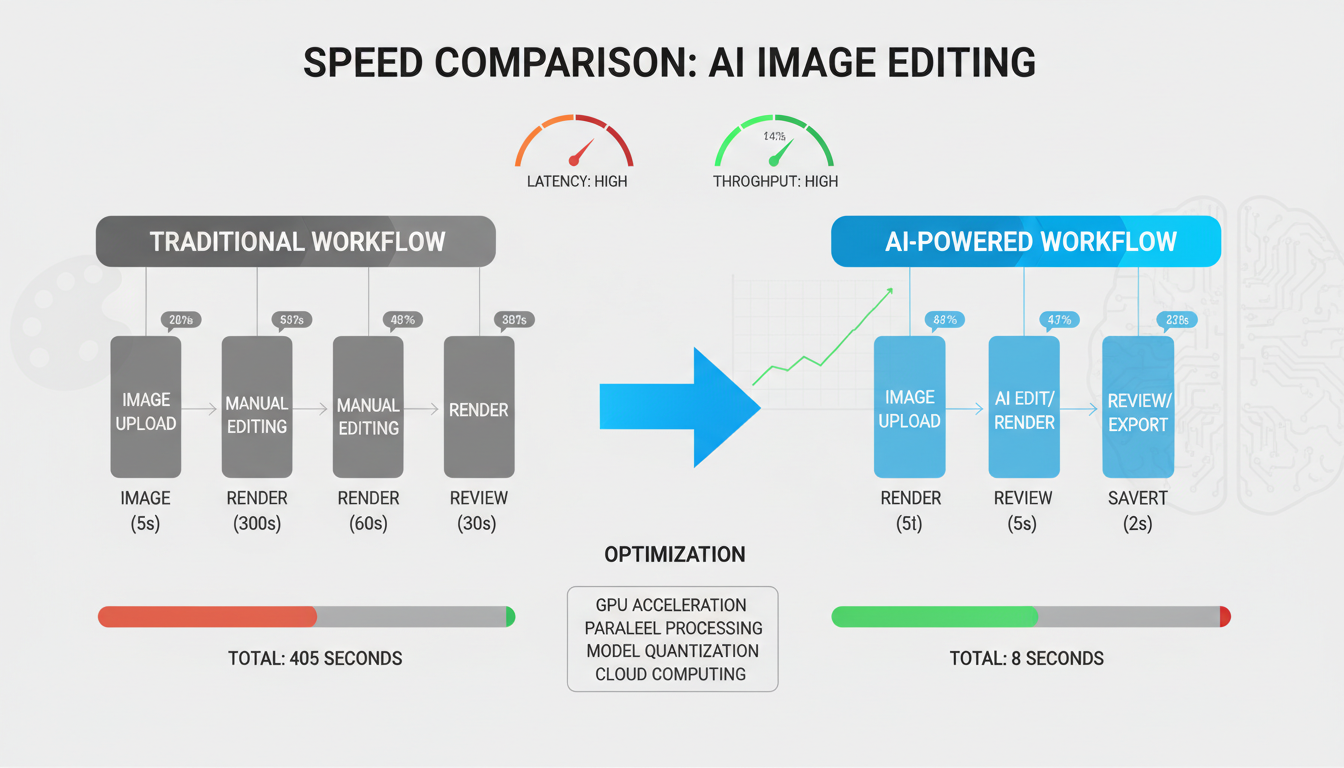

The Speed Improvements Are Wild

LightX2V, the acceleration framework built for Qwen models, claims 100x+ speedups. I was skeptical until I ran the numbers.

Standard inference on an H100: about 45 seconds per edit. With LightX2V optimizations: around 1 second.

That's not 100x, but it's close enough that batch processing becomes genuinely practical. I processed 200 images in under four minutes. Previously that would have been a coffee break at minimum.

The trick is combining multiple optimization techniques:

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

- 8-bit quantization across attention layers

- Sparse attention patterns that skip redundant computations

- Distillation that reduces sampling steps from 50 to 3-4

If you're running production workloads, the FP8 quantized version offers the best balance of speed and quality. You'll lose maybe 2-3% perceptual quality for 50% less VRAM usage.

LightX2V optimization dramatically reduces generation time while maintaining quality

LightX2V optimization dramatically reduces generation time while maintaining quality

Industrial Design Capabilities

This surprised me. The 2511 version added specific improvements for industrial and product design workflows.

Geometric reasoning is noticeably better. When editing product images, surfaces maintain proper perspective and proportions. Previously, editing a watch face might subtly distort the bezel. Now it stays accurate.

I've started using it for quick product mockups. The turnaround from concept to presentable render dropped from hours to minutes. It's not replacing proper CAD workflows, but for initial ideation it's incredibly useful.

Comparison: 2511 vs 2509 vs 2505

| Feature | Qwen Edit 2505 | Qwen Edit 2509 | Qwen Edit 2511 |

|---|---|---|---|

| Multi-person consistency | Poor | Good for singles | Excellent |

| Built-in LoRAs | No | No | Yes |

| Industrial design | Basic | Improved | Production-ready |

| Max effective resolution | 1024px | 2048px | 2048px+ |

| GGUF support | No | Limited | Full |

| Average edit time (H100) | 60s | 45s | ~1s with LightX2V |

Tips From My Testing

After putting in about 40 hours with this model, here's what I wish someone had told me:

Prompt structure matters more than length. Short, specific prompts outperform long detailed ones. "Add a red scarf" works better than "add a beautiful red silk scarf wrapped elegantly around the neck."

Use the mask input. Even rough masks dramatically improve targeting. The model can guess what you want to edit, but it guesses wrong maybe 30% of the time without masks.

Join 115 other course members

Create Your First Mega-Realistic AI Influencer in 51 Lessons

Create ultra-realistic AI influencers with lifelike skin details, professional selfies, and complex scenes. Get two complete courses in one bundle. ComfyUI Foundation to master the tech, and Fanvue Creator Academy to learn how to market yourself as an AI creator.

Lower CFG than you think. I started at 7-8 like most diffusion models. Qwen 2511 works better at 4-5. Higher values introduce artifacts.

The text editing feature is underrated. I've been using it to fix typos in generated marketing materials. Way faster than regenerating the entire image.

When Should You Upgrade from 2509?

If you're doing any multi-subject work, upgrade immediately. The consistency improvements alone justify the disk space.

If you're only doing single-subject edits, the choice is less clear. The quality improvements are incremental. The speed improvements from LightX2V are significant, but that's a separate optimization layer.

For new users, start with 2511. There's no reason to use older versions unless you have specific compatibility requirements.

Integration with Other Workflows

Qwen Edit works well alongside other ComfyUI tools I use regularly:

- IPAdapter for face consistency across generated images

- FaceDetailer for post-editing face enhancement

- WAN 2.2 for turning edited stills into video

The workflow I've settled on: generate base images with Flux or Z-Image, edit with Qwen 2511, animate selected frames with WAN. Takes about 15 minutes end-to-end for a 5-second clip.

FAQ

Is Qwen Edit 2511 free to use commercially? Yes. Apache 2.0 license means you can use it for commercial projects without fees or attribution requirements.

How much VRAM do I actually need? Minimum practical is 12GB with GGUF quantization. 24GB is comfortable for FP8. Full precision needs 40GB+.

Can it edit AI-generated images or only photos? Both. It actually performs slightly better on AI-generated images since they're cleaner inputs.

Does it work with negative prompts? Yes, though the effect is more subtle than with generation models. Use negatives for avoiding specific artifacts rather than major concept changes.

How does it compare to Stable Diffusion inpainting? More capable for complex edits but slower without the LightX2V optimizations. SD inpainting is still faster for simple fills.

Can I train my own LoRAs for it? Not yet officially supported, but community implementations are emerging. The base architecture is compatible with standard LoRA training.

Why would I use this instead of just regenerating images? Editing preserves elements you want to keep. Regeneration means re-rolling everything including parts that were already perfect.

Is there a cloud API available? Yes, Replicate has an API if you don't want to run locally.

What's Next

The Qwen team has hinted at video editing capabilities coming in 2026. Given how solid the image editing is now, I'm genuinely excited to see that.

For now, 2511 is my go-to for any image editing work that requires maintaining identity or consistency. The setup takes maybe 20 minutes if you're starting from scratch with ComfyUI, and the results justify the effort.

If you're still manually editing in Photoshop or struggling with older inpainting models, try this. The jump in capability is significant enough that it's changed my workflow entirely.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

10 Best AI Influencer Generator Tools Compared (2025)

Comprehensive comparison of the top AI influencer generator tools in 2025. Features, pricing, quality, and best use cases for each platform reviewed.

AI Adventure Book Generation with Real-Time Images

Generate interactive adventure books with real-time AI image creation. Complete workflow for dynamic storytelling with consistent visual generation.

AI Comic Book Creation with AI Image Generation

Create professional comic books using AI image generation tools. Learn complete workflows for character consistency, panel layouts, and story...

.png)