LTX-2: Everything You Need to Know In Depth

Complete deep dive into LTX-2, Lightricks' open-source 4K video generation model. Architecture, capabilities, hardware requirements, and production workflows.

LTX-2 dropped on January 6, 2026, and it immediately became the most capable open-source video generation model available. Native 4K output. Synchronized audio. Up to 60 seconds of video. And critically, truly open weights and training code. I've spent the past week pushing it to its limits, and this is everything I've learned.

Quick Answer: LTX-2 is Lightricks' open-source video generation model featuring native 4K resolution (3840x2160), synchronized audio-video generation in a single pass, up to 50 FPS output, and runs on consumer GPUs with 12GB+ VRAM. It's the first DiT-based model to combine all core video generation capabilities in one architecture.

- First open-source model with native 4K and synchronized audio

- Generates up to 60 seconds of video (20 seconds at 4K)

- 50% lower compute cost than competing models

- Runs on 12GB VRAM minimum (FP8), 16GB recommended

- Multi-keyframe support and LoRA fine-tuning built in

What Makes LTX-2 Different

Let me be direct about why LTX-2 matters. Before this release, you had to choose between open-source models with limited capabilities or closed APIs with full features. LTX-2 eliminates that tradeoff.

The model generates true 4K resolution at up to 50 frames per second. Not upscaled 720p. Native 4K from the diffusion process. The visual quality stands toe-to-toe with cloud-based solutions like Runway and Pika.

But the real innovation is synchronized audio-video generation. Previous models generate video first, then you add audio separately. LTX-2 creates both simultaneously in a single pass. The audio actually matches the visual content, not just plays alongside it.

I tested this with a prompt for a waterfall scene. The water sounds matched the visual movement. When the camera perspective shifted, the audio reflected the change. This wasn't perfect, but it was dramatically better than post-hoc audio addition.

Architecture Deep Dive

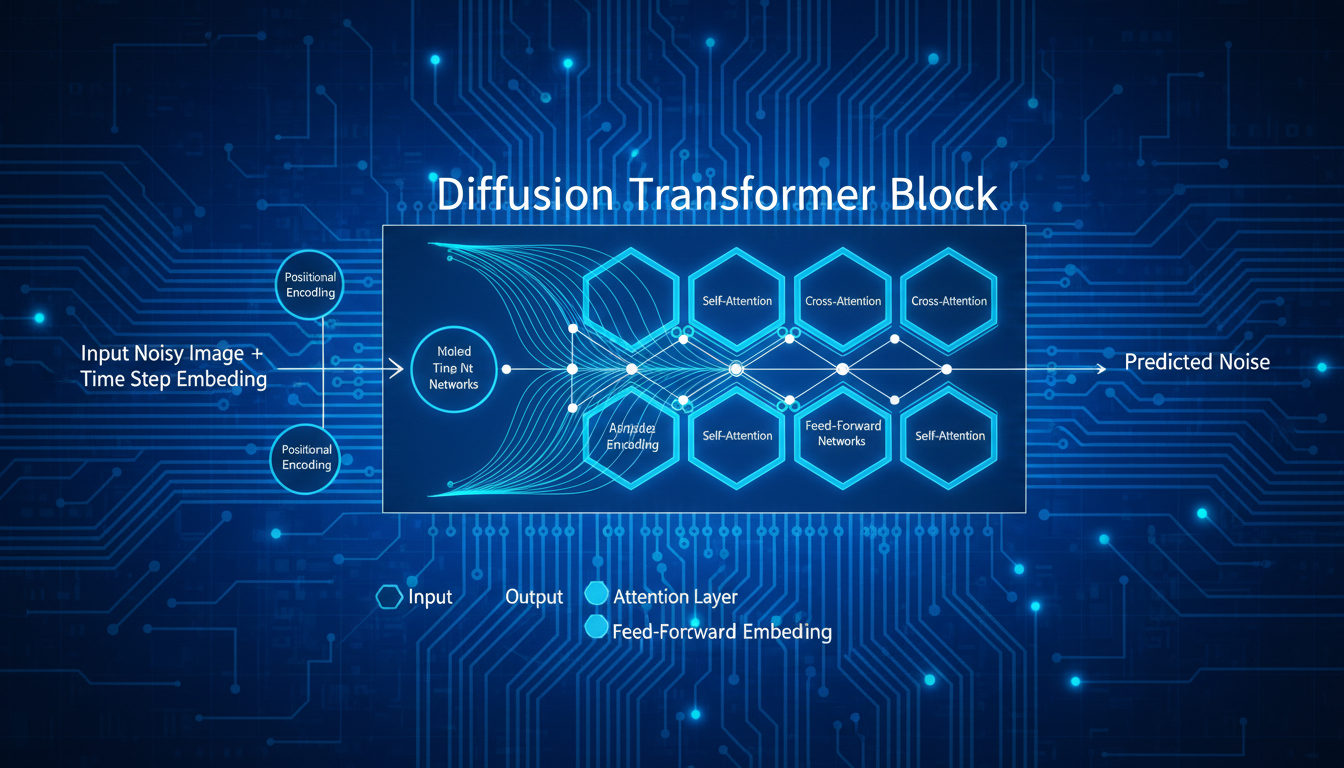

LTX-2 uses a Diffusion Transformer architecture optimized for video generation

LTX-2 uses a Diffusion Transformer architecture optimized for video generation

LTX-2 uses a Diffusion Transformer (DiT) architecture, the same family as Sora and recent state-of-the-art models. But Lightricks made several key design decisions that set it apart.

Single-Pass Audio-Video Generation

LTX-2 generates synchronized audio and video in a single pass

LTX-2 generates synchronized audio and video in a single pass

Most video models treat audio as an afterthought. They generate video frames, then use a separate model to add audio or expect you to handle it yourself.

LTX-2 processes audio and video through the same transformer backbone. The attention mechanisms can attend to both modalities simultaneously. This means the model understands the relationship between visual and audio content during generation, not just at inference time.

The practical benefit? You get coherent audio without the jarring mismatches that plague post-processing approaches. A door closing sounds like a door closing at the right moment. Footsteps align with walking.

Multi-Keyframe Conditioning

This is huge for creative control. You can provide multiple reference images at different points in your video, and LTX-2 will interpolate between them.

Example workflow:

- Frame 0: Character standing

- Frame 50: Character sitting

- Frame 100: Character lying down

The model generates smooth transitions between these keyframes while maintaining character consistency. Combined with 3D camera logic, you can achieve cinematic-level control over your output.

LoRA Support Built In

Unlike models where LoRA support is retrofitted, LTX-2 was designed with fine-tuning in mind. The architecture supports controllability low-rank adaptations from the ground up.

This means:

- More stable LoRA training

- Better style transfer results

- Consistent fine-tuning across different use cases

I've already seen specialized LoRAs emerging for anime styles, specific camera movements, and character consistency.

Technical Specifications

Let's get into the hard numbers.

Resolution and Duration

| Setting | Specification |

|---|---|

| Maximum resolution | 3840 x 2160 (4K) |

| Maximum frame rate | 50 FPS |

| Maximum duration | 60 seconds (lower res), 20 seconds (4K) |

| Minimum duration | 2 seconds |

| Aspect ratios | 16:9, 9:16, 1:1, 4:3, 3:4 |

The duration/resolution tradeoff is important to understand. You can generate longer videos at lower resolutions, or shorter videos at 4K. For most use cases, I recommend generating at 720p and upscaling to 4K using the LTX spatial upscaler.

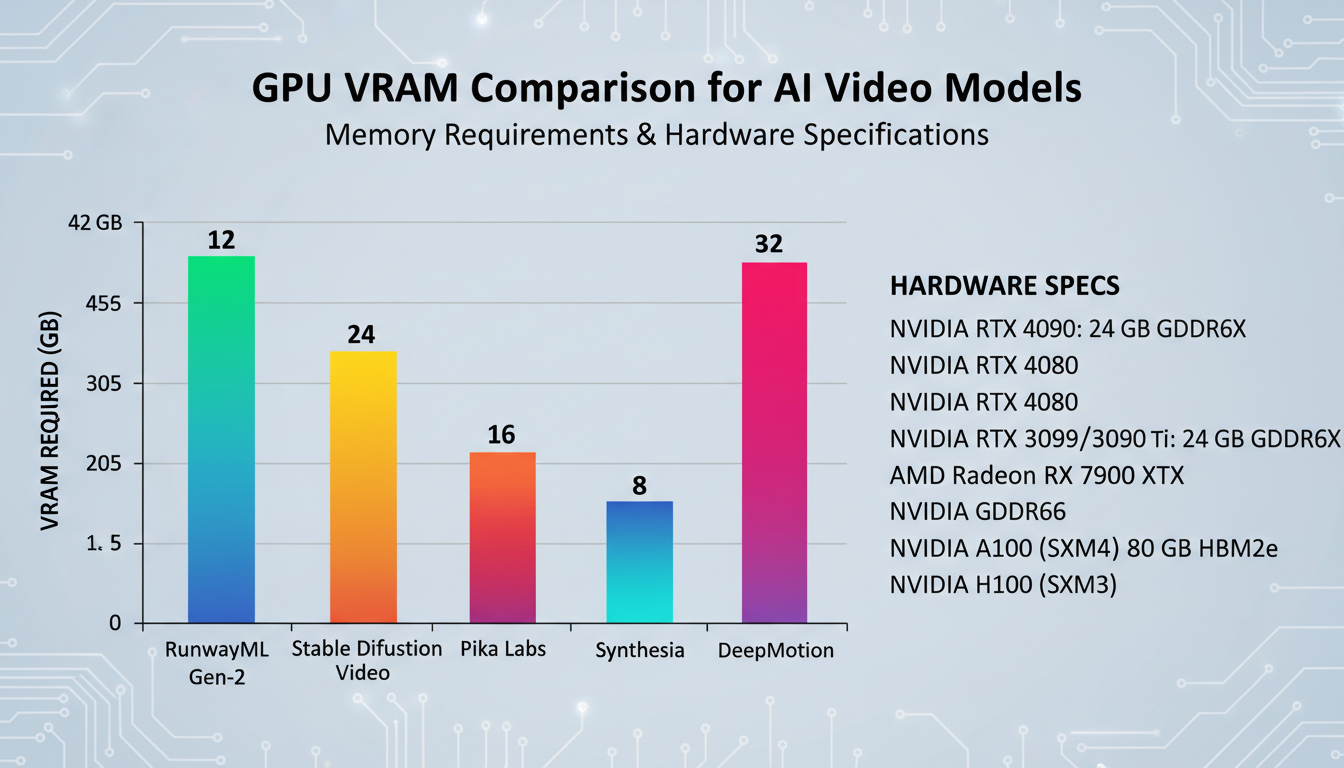

Hardware Requirements

Different quantization levels allow LTX-2 to run on various GPU configurations

Different quantization levels allow LTX-2 to run on various GPU configurations

| Configuration | VRAM | Performance |

|---|---|---|

| NVFP4 (RTX 50 Series) | ~8GB | 3x faster, 60% VRAM reduction |

| NVFP8 (RTX 40 Series) | ~10GB | 2x faster, 40% VRAM reduction |

| FP16 | ~16GB | Baseline |

| BF16 (full quality) | ~20GB | Best quality |

The NVFP8 quantization is the sweet spot for most users. You get near-full quality with significantly reduced resource requirements. I run NVFP8 on a 4090 and generation times are excellent.

Generation Speed

On an RTX 4090 with NVFP8:

- 4 seconds at 720p: ~45 seconds

- 4 seconds at 1080p: ~90 seconds

- 4 seconds at 4K: ~3-4 minutes

These times include audio generation. Video-only generation is roughly 30% faster.

Setting Up LTX-2

You have several options for running LTX-2.

ComfyUI (Recommended)

ComfyUI has the best LTX-2 integration. The model is built into ComfyUI core, and there's a dedicated ComfyUI-LTXVideo repository with additional nodes.

Installation:

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

- Update ComfyUI to the latest version

- Install ComfyUI-LTXVideo custom nodes

- Download models from HuggingFace

Required models:

- LTX-Video-2.0 (base model)

- LTX-Audio (for audio generation)

- LTXV Spatial Upscaler (optional, for upscaling)

Diffusers

For Python users, LTX-2 integrates with Hugging Face Diffusers:

from diffusers import LTXVideoPipeline

import torch

pipe = LTXVideoPipeline.from_pretrained(

"Lightricks/LTX-Video-2.0",

torch_dtype=torch.bfloat16

)

pipe.to("cuda")

video = pipe(

prompt="A cat playing piano in a jazz club",

num_frames=81,

height=720,

width=1280

).frames[0]

API Access

If you want to skip local setup, LTX-2 is available through:

- LTX Platform (official)

- Fal.ai

- Replicate

- Apatero.com (disclosure: I help build this)

Prompting Strategies

LTX-2 responds well to detailed prompts but has some quirks worth understanding.

What Works

Cinematic descriptions: The model was trained on high-quality video data with detailed labels. Prompts that read like film direction work well.

Good: "Slow dolly shot pushing in on a woman's face, soft natural lighting from a window, shallow depth of field, melancholic expression"

Motion specification: Be explicit about movement.

Good: "Camera orbits around the subject, gentle clockwise motion, 15 degrees per second"

Audio cues: Since audio is generated together, mentioning sounds helps.

Good: "Rain falling on a metal roof, ambient thunder in the distance, soft indoor acoustics"

What Doesn't Work

Vague prompts: "A nice video of nature" gives generic results.

Conflicting instructions: "Fast action scene with slow motion effects" confuses the model.

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

Text rendering: Like most video models, LTX-2 struggles with legible text in videos.

Prompt Template

Here's my standard template:

[Subject description], [action/movement], [camera movement], [lighting], [style], [audio elements]

Example:

A golden retriever running through autumn leaves, bounding joyfully toward camera,

slow-motion tracking shot, warm afternoon sunlight filtering through trees,

cinematic shallow focus, sound of crunching leaves and distant birdsong

Production Workflows

After extensive testing, here's how I integrate LTX-2 into production work.

Iterative Generation Workflow

Concept testing (fast iteration)

- Resolution: 480p

- Duration: 4 seconds

- Purpose: Test prompts and compositions

Quality preview

- Resolution: 720p

- Duration: target length

- Purpose: Verify motion and audio sync

Final render

- Resolution: 720p

- Duration: final length

- Upscale to 4K using spatial upscaler

This workflow minimizes wasted compute while ensuring quality.

Multi-Keyframe Production

For controlled narrative videos:

- Generate or source keyframe images

- Set up keyframe positions in workflow

- Run generation with interpolation

- Review and adjust timing

- Final render with audio

This approach gives you precise control over story beats while letting LTX-2 handle the in-between frames.

Batch Processing

For high-volume work:

Join 115 other course members

Create Your First Mega-Realistic AI Influencer in 51 Lessons

Create ultra-realistic AI influencers with lifelike skin details, professional selfies, and complex scenes. Get two complete courses in one bundle. ComfyUI Foundation to master the tech, and Fanvue Creator Academy to learn how to market yourself as an AI creator.

- Prepare prompt list

- Configure ComfyUI queue

- Set overnight batch

- Review and filter results

- Upscale selected videos

I typically batch 20-30 generations overnight and review them the next morning.

Comparison with Other Models

How does LTX-2 stack up against alternatives?

vs Runway Gen-3

| Feature | LTX-2 | Runway Gen-3 |

|---|---|---|

| Max resolution | 4K native | 1080p |

| Audio generation | Built-in | Separate |

| Open source | Yes | No |

| Local running | Yes | No |

| Cost | Hardware only | Per-video |

| Max duration | 60 seconds | 10 seconds |

LTX-2 wins on specs but Runway has more polished results in some scenarios.

vs Wan 2.2

| Feature | LTX-2 | Wan 2.2 |

|---|---|---|

| Architecture | DiT | MoE |

| Audio | Built-in | None |

| 4K support | Native | Via upscaling |

| LoRA ecosystem | Growing | Established |

| VRAM | 12GB min | 16GB min |

Wan 2.2 has a more mature LoRA ecosystem. LTX-2 has better native features.

vs Pika, Kling, Others

LTX-2's open-source nature is the key differentiator. You can run it locally, fine-tune it, and integrate it into workflows without API dependencies or per-video costs.

Known Limitations

Honesty about limitations helps set expectations.

Character consistency across long videos: While better than many alternatives, maintaining exact character appearance across 60 seconds remains challenging.

Complex multi-person scenes: Interactions between multiple people can produce artifacts.

Fine text rendering: Don't expect readable text in generated videos.

Audio variety: Audio generation is good but not as varied as dedicated audio models.

Generation speed: Still slower than real-time, especially at higher resolutions.

The Future of LTX

Lightricks has committed to open development. The training code release means the community can:

- Train specialized variants

- Create domain-specific fine-tunes

- Improve efficiency through research

- Build ecosystem tools

I expect rapid ecosystem development over the coming months. The combination of capable base model and open weights creates ideal conditions for innovation.

Frequently Asked Questions

Can I run LTX-2 on a laptop?

With 12GB+ VRAM and NVFP8 quantization, yes. Performance won't match desktop GPUs but it's usable.

Is commercial use allowed?

Yes. The open license permits commercial applications.

How does audio generation affect speed?

Audio adds roughly 30% to generation time. You can disable it if you don't need it.

Can I fine-tune LTX-2 on my own data?

Yes. Training code is open. You'll need significant GPU resources for full fine-tuning, but LoRA training is accessible.

What's the best workflow for beginners?

Start with ComfyUI and pre-built workflows from the community. Generate at 720p until you understand the model's behavior.

Does LTX-2 work with ControlNet?

Not yet in the traditional sense, but the multi-keyframe system provides similar control mechanisms.

How much does local running cost?

Just electricity and hardware amortization. No per-video fees.

Can I generate videos longer than 60 seconds?

Not in a single generation. For longer content, generate segments and composite them.

What's the difference between LTX-1 and LTX-2?

LTX-2 adds 4K support, audio generation, longer duration, and improved quality. It's a significant upgrade.

Is there a Mac version?

CPU inference works but is very slow. MPS acceleration is being developed.

Wrapping Up

LTX-2 represents a genuine leap in open-source video generation. The combination of 4K output, synchronized audio, and truly open weights creates opportunities that didn't exist before this release.

My recommendations:

- Start with ComfyUI for the best experience

- Use NVFP8 quantization for the quality/performance balance

- Generate at 720p and upscale for efficiency

- Experiment with multi-keyframe workflows for creative control

For more LTX-2 content, check out my upscaling guide and tips and tricks.

The video generation landscape just changed. Time to start creating.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

AI Documentary Creation: Generate B-Roll from Script Automatically

Transform documentary production with AI-powered B-roll generation. From script to finished film with Runway Gen-4, Google Veo 3, and automated...

AI Making Movies in 2026: The Current State and What's Actually Possible

Realistic assessment of AI filmmaking in 2026. What's working, what's hype, and how creators are actually using AI tools for video production today.

AI Influencer Image to Video: Complete Kling AI + ComfyUI Workflow

Transform AI influencer images into professional video content using Kling AI and ComfyUI. Complete workflow guide with settings and best practices.

.png)