Generate Clean Spritesheets in ComfyUI for Game Development (2025)

Stop manually cutting frames. Learn how to generate production-ready sprite sheets in ComfyUI with consistent character poses and clean transparency.

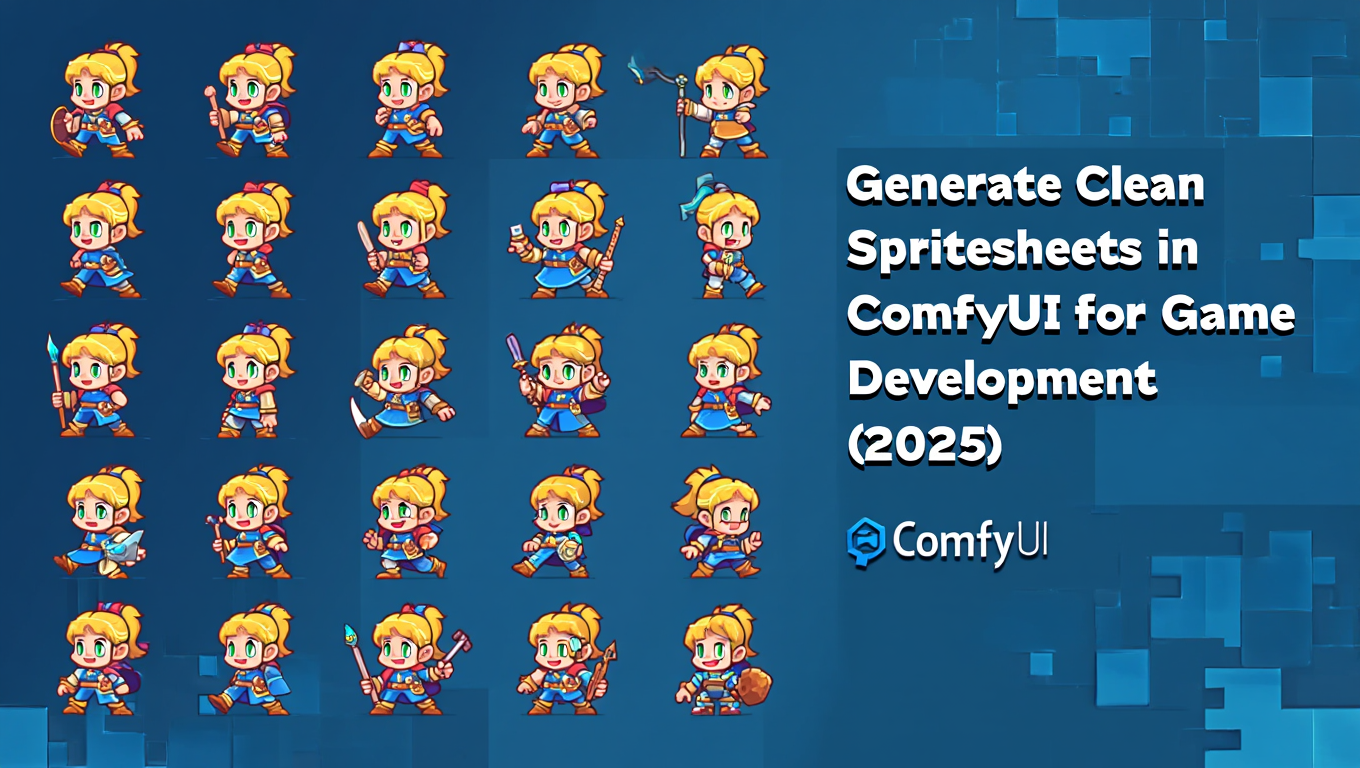

Your indie game needs a character with eight-direction movement, four-frame walk cycles, and consistent visual style across all 32 sprites. The traditional approach involves commissioning an artist for several hundred dollars and waiting weeks, or spending hours in Aseprite drawing frames yourself if you have the skills. Or getting wildly inconsistent results from AI because it generates each sprite as a completely separate image with zero frame coherence.

Game developers figured out how to make AI sprite generation actually usable about six months ago. The technique isn't obvious and search results still point to outdated manual methods. If you know the workflow, you can generate a complete, consistent spritesheet in about an hour including iteration time.

Quick Answer: Generating clean spritesheets in ComfyUI requires training a character LoRA for consistency, using batch generation with carefully structured prompts for each pose, the Background Removal node for clean transparency, and the Image Grid node or custom Python scripts to arrange frames into proper spritesheet layout. The key is separating character consistency (LoRA), pose variation (prompting), and post-processing (background removal and arrangement) into distinct workflow steps rather than trying to generate the final spritesheet in one pass.

- Character LoRA training is essential for consistent sprites across all frames

- Batch generation with pose-specific prompts works better than trying to generate full sheets at once

- Background removal and transparency handling require dedicated nodes

- Grid arrangement should be automated through ComfyUI nodes rather than manual editing

- The workflow is reusable once built - new characters need only LoRA retraining

Why Normal AI Generation Fails for Spritesheets

The problems are predictable. Generate eight images of your character facing different directions and you get eight variations of a character concept rather than one character in eight poses. Height changes, face structure shifts, outfit details drift. You can't use inconsistent sprites in a game because players notice immediately when their character morphs while walking.

The second problem is background. AI image generation produces complete scenes by default. Your character standing on grass, indoors on wood floors, in front of textured walls. Spritesheets need transparent backgrounds so the game engine can composite sprites over the game environment. Manual background removal for 32+ sprites is tedious and error-prone.

The third problem is layout. Spritesheets follow specific grid structures where game engines expect to find frames at predictable positions. Row one is walk cycle facing north, row two is facing northeast, and so on. Getting AI-generated frames into this structure traditionally meant manual composition in image editors, defeating the automation benefit.

The solution isn't a single node or setting. It's a complete workflow that handles consistency, background removal, and arrangement as separate addressed problems. Once built, this workflow becomes a template you customize for each game character rather than solving from scratch every time.

Platforms like Apatero.com have started offering spritesheet generation as a packaged service specifically because the workflow complexity deters individual developers, even though the underlying technology works well when properly configured.

The Character Consistency Foundation

Everything starts with a LoRA trained on your specific character. This isn't optional for production-quality spritesheets. You need the AI to generate the exact same character in every frame.

The reference images for sprite character LoRAs differ from typical character LoRA training sets. You want consistent character depiction across simple, clean images from multiple angles. Ideally in sprite art style or pixel art style if that's your target aesthetic. 15-20 reference images work well - front, back, three-quarter views, side profiles, all showing the same character design.

These references can be AI-generated and curated. Generate 50 variations of your character concept in sprite art style using your base model. Select the 15-20 that best match your vision and maintain consistency. Train your LoRA on this curated set. The training reinforces the consistent features across the selection.

Training parameters for sprite character LoRAs should prioritize feature stability over flexibility. You want rigid adherence to character design, not creative interpretation. Learning rate around 0.0002, train for 15-20 epochs on your reference set. Preview generations during training - if the character features are solid and consistent by epoch 12-15, you're on track.

The trained LoRA becomes your character identity. Every sprite generation uses this LoRA at strength 1.0-1.2 to lock in the character's appearance. Prompts control pose and angle, the LoRA controls identity. This separation is what makes consistent spritesheets possible.

Name your LoRAs clearly by character and project. "RPG_Warrior_Character_01" tells you what it is six months later when you need to generate additional poses. Version your LoRAs if you refine the character design mid-project. "RPG_Warrior_v2" after design revisions keeps your workflow organized.

Batch Generation Strategy for Poses

Generating sprites individually is tedious but generating them in a structured batch is where ComfyUI excels. The key is systematic prompt variation that covers all required poses while maintaining character consistency through your LoRA.

Prompt structure template for sprite generation looks like this: "[character description with LoRA], [pose description], [angle description], simple background, sprite art style, clean lines, [your quality tags]"

The character description stays constant and is reinforced by your LoRA. The pose and angle descriptions change for each sprite. "standing idle pose" versus "walking forward, mid-stride" versus "running, both feet off ground." Each describes a specific frame you need.

Angle descriptions match your spritesheet requirements. "facing camera directly" for south-facing sprites, "three-quarter view facing left" for southwest, "profile view facing left" for west, and so on. Be explicit because subtle angle differences matter for sprite coherence.

ComfyUI's batch processing lets you queue multiple generations with different prompts. Some custom nodes add CSV or text file prompt loading, letting you prepare all 32 sprite prompts in a text file and batch process them automatically. The workflow runs through each prompt, generates with your character LoRA, outputs individual sprite frames.

Consistency tricks beyond just the LoRA include seed control and parameter locking. Use the same seed with variation for related poses (different walk cycle frames) to maintain maximum coherence. Lock sampling parameters so generation quality stays consistent across all frames. You want consistency within pose sets, not creative variation.

Frame-by-frame generation rather than trying to generate the complete spritesheet gives you quality control. If one frame generates poorly, you regenerate just that frame without redoing everything. This iterative refinement is impossible if you're generating the full sheet at once.

The batch workflow outputs 32 individual image files with transparent backgrounds (handled in next step). These become your sprite frames ready for arrangement into the final spritesheet grid.

- Checkpoint loader with your chosen model: Anime or cartoon models work best for sprite art aesthetics

- LoRA loader with your character LoRA: Applied at strength 1.0-1.2

- Prompt loader node: Loads your list of pose/angle prompts

- Batch processor: Iterates through prompts with consistent sampling parameters

- Save image node: Outputs each frame as individual file with systematic naming

Background Removal and Transparency

Sprites need clean transparency, which means background removal is a critical workflow step. ComfyUI has several approaches with different quality tradeoffs.

Rembg node is the most straightforward background removal tool. It's fast, integrates easily into workflows, and produces acceptable results for most sprite work. Add the Rembg node between your generation output and save node. It automatically removes backgrounds and outputs PNG with transparency. Quality is good enough for indie game sprites where pixel-perfect edges aren't critical.

SAM-based segmentation offers better edge quality when character boundaries are important. Use SAM2 or the SAM3 Lite we covered earlier to segment the character precisely, then use that mask to extract the character onto transparent background. More complex workflow but higher quality edges, especially around hair, clothing details, and curved surfaces.

Color-based removal works if you consistently generate characters against the same colored background. Generate on white background (via prompting), use color threshold nodes to remove white, output transparency. Fast and simple but can fail with white clothing elements or doesn't handle edge quality as well as AI segmentation.

The choice depends on your quality requirements and how close-up players see your sprites. Pixel art style games where sprites are small on screen can use Rembg without issues. HD 2D games where sprites are large and detailed benefit from SAM segmentation quality.

Edge cleanup matters for professional results. AI background removal sometimes leaves slight halos or rough edges. Some workflows add a slight edge erosion followed by a gentle feather to clean edges without losing important detail. The Image Filter nodes in ComfyUI can handle this post-processing.

Batch process background removal for all your generated frames. Don't remove backgrounds manually in external editors. Keep everything in ComfyUI so the entire workflow is repeatable. When you need to regenerate frames or create new character variations, one workflow run handles everything from generation through transparency.

Output naming convention should indicate frame purpose. "Character_Walk_N_Frame_01.png" tells you this is first frame of north-facing walk cycle. Systematic naming makes the next step (grid arrangement) much easier.

Arranging Frames into Spritesheet Grids

Individual transparent sprites need arrangement into the grid layout your game engine expects. This is where many workflows break down into manual composition, but ComfyUI can automate it.

Free ComfyUI Workflows

Find free, open-source ComfyUI workflows for techniques in this article. Open source is strong.

Image Grid node takes multiple images and arranges them into a specified grid pattern. Feed it your 32 individual sprite frames, specify 8 columns by 4 rows (or whatever your layout requires), it outputs a single spritesheet image with proper spacing. This node is often called "Image Batch to Grid" or similar in different custom node packs.

Grid configuration includes spacing between frames and padding around edges. Most game engines expect sprites either directly adjacent (0px spacing) or with minimal spacing (2-4px) for easier parsing. Set these values in the grid node to match your engine's expectations. Include consistent padding on all edges if your engine needs it.

Frame ordering matters critically. The grid node arranges images based on input order. Your batch generation output needs to be in the correct sequence for the grid arrangement to match game engine expectations. Systematic file naming enables alphabetical ordering that maps to correct grid positions. "Frame_01" through "Frame_32" orders correctly, "Frame_1" through "Frame_32" might not depending on sorting behavior.

Some sprite workflows use multiple grid nodes to create separate sheets for different animation sets. Walking animations on one sheet, attack animations on another. This lets you manage game engine asset loading more efficiently. Generate all poses, segment by animation type, create multiple grids from the segmented batches.

Custom grid scripts in Python provide maximum control if the standard grid nodes don't match your requirements. ComfyUI can execute custom Python nodes, and sprite grid arrangement is straightforward Python imaging code. Load individual sprites, calculate positions based on index and grid dimensions, composite onto canvas with transparency. This approach handles edge cases like variable frame counts per animation or non-rectangular grid layouts.

The automated grid arrangement means your complete workflow goes from character concept to finished spritesheet without leaving ComfyUI or manually touching Photoshop. This repeatability is what makes AI sprite generation practical for actual game development instead of just generating one-off assets.

Optimizing for Different Sprite Styles

The workflow adapts for different aesthetic requirements but core principles remain constant.

Pixel art sprites require generating at appropriate resolution then potentially downscaling with proper pixel art algorithms. Generate sprites at 2x or 4x your target size with pixel art style prompts and specialized pixel art models, then downscale using nearest-neighbor or specialized pixel art scaling algorithms. Direct generation at tiny sizes like 32x32 produces poor quality.

HD 2D sprites for modern engines with high-resolution sprite support can generate at 512x512 or higher. These benefit most from SAM-based background removal for clean edges. The character LoRA becomes even more critical at high resolution because inconsistent details are more visible.

Chibi or super-deformed styles need reference datasets specifically in that style for LoRA training. The proportion differences from normal anatomy are significant enough that LoRAs trained on realistic proportions struggle to generate good chibi sprites. Curate chibi-style references, train chibi-specific LoRA, use chibi-focused base models if available.

Top-down versus side-view sprites have different perspective requirements that need different prompt engineering. Top-down ("bird's eye view, overhead perspective") requires specific angle language. Side-view ("profile view, side perspective") is more natural for most models. Train your LoRA on references matching your required perspective.

Animation frame counts vary by movement type. Idle animations might need only 2-4 frames, walk cycles typically 4-8 frames, attack animations could need 6-12 frames. Build your workflow to handle variable frame counts per animation row rather than forcing everything to the same count. This saves generation time and spritesheet file size.

The style variations don't change the workflow structure, they change specific parameters and model choices within the established workflow. Consistency layer (LoRA) plus variation layer (prompts) plus post-processing (background removal and grid arrangement) works across all sprite styles.

Handling Common Sprite Generation Problems

Even with proper workflow, specific issues recur. Here's how to solve them systematically.

Want to skip the complexity? Apatero gives you professional AI results instantly with no technical setup required.

Pose inconsistency between similar frames like walk cycle frames not flowing smoothly means your prompts aren't specific enough or seed variation is too high. For animation frames that should flow, use nearly identical prompts with just slight pose description changes. Use sequential seeds or controlled seed variation. Consider using ControlNet with pose skeletons to force exact pose sequences.

Character details drifting despite LoRA suggests LoRA strength is too low or competing style modifiers in prompts are overriding it. Increase LoRA strength to 1.3-1.5. Remove or reduce weight on artist tags or style modifiers that might conflict with character identity. Simplify negative prompts that might be suppressing character features.

Background removal leaving artifacts around edges usually means the segmentation or color removal didn't cleanly separate character from background. For Rembg, try generating characters on more contrasting backgrounds. For color-based removal, use more extreme background colors. For SAM, verify your segmentation is tight around character boundaries. Add edge cleanup post-processing.

Sprite proportions not matching across angles means your LoRA training set didn't have enough angle variety or your prompts aren't maintaining consistent size language. Add "full body visible, standing, same height in every frame" type prompts to enforce consistency. Retrain LoRA with more angle variety if problem persists.

Grid arrangement placing frames in wrong positions traces to file naming or node configuration. Verify your frame files sort correctly in the order the grid node receives them. Check grid node settings match your intended layout dimensions. Test with a small batch first (4 frames, 2x2 grid) to verify arrangement logic before processing full spritesheet.

Generated sprites don't match game's existing art style means your base model, LoRA, or prompts aren't aligned with target aesthetic. This is the hardest problem to fix because it requires potentially changing models or completely retraining LoRAs. Study existing game assets closely, identify what specific visual characteristics define the style, translate those into prompt language or seek models trained on similar aesthetics.

The debugging approach is always isolate which workflow stage is failing. Generate frames manually to verify prompts work. Test background removal on a single frame. Verify grid arrangement with test images before processing actual sprites. Systematic isolation finds problems faster than regenerating complete sheets and hoping.

Workflow Templates for Specific Game Types

Different game genres need different sprite configurations. Here are starting templates.

RPG Character Spritesheet typically needs 8-direction movement (N, NE, E, SE, S, SW, W, NW), 4-frame walk cycle per direction, plus idle poses. That's 32 walk frames plus 8 idle frames. Organize as 8 rows (one per direction) with 4-5 columns (walk frames plus idle). Character identity consistency is critical because players look at these sprites for hours.

Fighting Game Character requires extensive frame counts. Standing, crouching, jumping, various attacks, hit reactions, victory/defeat poses. Easily 100+ frames per character. Break these into multiple sheets by action type. Standing/walking/jumping on sheet one, light attacks on sheet two, heavy attacks on sheet three, and so on. The grid arrangement becomes more complex but organization prevents unwieldy single sheets.

Platformer Character needs fewer directions (usually just left and right) but more detailed animations. Run cycle, jump, fall, land, attack while jumping, wall slide, etc. 40-60 frames covering all movement states. Two rows (left and right facing) with multiple columns per animation. The LoRA training should especially focus on maintaining silhouette consistency since platformer sprites are often viewed as silhouettes.

Top-Down Action Game sprites need 4 or 8 directions of facing, multiple action animations (walk, attack, item use, hurt), potentially equipment variations. 60-80 frames common. The perspective being overhead changes how you prompt for angles. "Overhead view, top-down perspective, character facing north" becomes the angle description pattern.

Simulation Game Citizens need variety within consistency. You're generating multiple different citizens, but each should feel cohesive with the game's art style. Train one LoRA on the general style, use prompt variation to create different citizens rather than training separate LoRAs per citizen. Simpler workflow for quantity over individual character detail.

Join 115 other course members

Create Your First Mega-Realistic AI Influencer in 51 Lessons

Create ultra-realistic AI influencers with lifelike skin details, professional selfies, and complex scenes. Get two complete courses in one bundle. ComfyUI Foundation to master the tech, and Fanvue Creator Academy to learn how to market yourself as an AI creator.

The template approach means you build the workflow once per game type, then customize prompts and character LoRAs for specific games within that type. Don't rebuild from scratch for every new character or project. Maintain a library of workflow templates for the game types you develop.

Services like Apatero.com maintain these workflow templates internally and let you specify game type and requirements rather than building workflows yourself, translating game dev needs directly to sprite generation without the technical workflow construction.

Integration with Game Engines

The generated spritesheets need to work with your actual game engine. Different engines have different requirements.

Unity uses sprite slicing where you import the spritesheet, define grid parameters, and Unity splits it into individual sprites for animation. Your ComfyUI output should match Unity's expected format - consistent spacing, power-of-two texture dimensions if targeting mobile. 1024x1024 or 2048x2048 final spritesheet sizes work well. Unity handles transparency automatically from PNG alpha channel.

Godot has similar sprite slicing but can also use pre-split sprite collections. The grid arrangement from ComfyUI works directly for Godot's automatic slicing. Define frames per animation in Godot's animation editor, and it reads the spritesheet row by row. Godot prefers transparent PNG, which your workflow already outputs.

GameMaker expects sprites either as separate files or properly formatted spritesheets. The grid arrangement must match GameMaker's import expectations exactly. Consistent frame dimensions, optional spacing based on your settings. GameMaker handles transparency but verify alpha blending is configured correctly for your sprite import.

Unreal Engine for 2D games (Paper2D plugin) needs sprite atlases that can be generated from your spritesheet output. The spacing and padding are critical because Unreal's texture atlas packer is particular about formats. Test a small spritesheet early to verify your workflow output is compatible with Unreal's import process.

Custom engines or frameworks have wildly varying requirements. Document exactly what your engine expects - dimensions, spacing, file format, naming conventions. Configure your ComfyUI workflow to output exactly that format. The flexibility of ComfyUI means you can match any reasonable specification, but you need to know the specification first.

The workflow modularity helps because you can swap the final grid arrangement stage for different engines while keeping generation and background removal identical. Build the core workflow once, maintain multiple export templates for different engine targets.

Cost and Time Comparison to Traditional Methods

Actual numbers matter for evaluating if this workflow makes sense for your project.

Traditional 2D artist commission for 32-frame character spritesheet costs $200-500 depending on quality and artist rates. Turnaround is 1-3 weeks. Revisions are limited and expensive. You get exactly what you asked for, hopefully, but iteration is slow.

Self-creation in Aseprite or similar if you have pixel art skills takes 8-20 hours depending on complexity and experience level. Free beyond your time, but requires artistic skill. Many game developers lack this skill or prefer spending time on code and design.

AI generation with this ComfyUI workflow requires upfront setup time (4-8 hours first time building and testing the workflow), then approximately 1-2 hours per character including LoRA training, generation, and refinement. Hardware cost is your GPU or rental fees. After initial setup, additional characters are fast. Quality is very good for indie games though not quite matching top-tier hand-drawn work.

The economic case for AI sprite generation is strongest when you need multiple characters or frequent iterations. One character might be cheaper to commission. Ten characters definitely favor AI workflow. Prototyping where you're iterating on character designs heavily favors AI because revision time drops from weeks to hours.

Quality-wise, AI sprites work well for indie games where "pretty good" is sufficient and production velocity matters. AAA mobile games or highly art-focused projects might still benefit from traditional artists for hero assets while using AI for background characters or prototyping.

The calculation changes as you build your workflow library. Initial workflow creation is significant time investment. Second project using existing workflow is much faster. Tenth project is nearly trivial for sprite generation, all time focuses on game logic and design.

- First character: Workflow setup + generation time, likely slower than commission but builds reusable asset

- Second character: Just generation time, already faster than commission

- Fifth character: Workflow optimization complete, fraction of commission time

- Future projects: Template reuse makes sprite generation nearly trivial time commitment

Frequently Asked Questions

Can the same workflow generate sprites for different art styles within one game?

Yes, by training separate LoRAs for each art style and swapping which LoRA loads during generation. The workflow structure stays identical. This works well for games with different character types (humans versus monsters) that need different aesthetic treatments. The consistency mechanisms work per-LoRA, so each style subset stays internally consistent even if overall game mixes styles.

How do you handle equipment variations or outfit changes for the same character?

Two approaches work. Either include equipment variations in your LoRA training dataset so outfit is part of learned character identity, or train the base character LoRA on nude/basic outfit and add equipment through prompting or layering in your game engine. The first approach is simpler but less flexible. Second approach takes more workflow complexity but lets you mix-and-match equipment procedurally.

What's the minimum VRAM required for sprite generation workflows?

8GB can work for lower resolution sprites (up to 512x512) with optimization. 12GB is comfortable for most sprite work. The workflow isn't as VRAM-intensive as video generation because you're generating single frames rather than sequences. Batch processing doesn't require keeping multiple generations in memory simultaneously, each generates sequentially. Modest hardware suffices for production sprite work.

Can you generate animation frames directly as temporal sequences?

Video generation models can create animation but producing individual clean frames at sprite quality is harder than frame-by-frame generation with this workflow. Some experimental workflows use img2img with AnimateDiff to generate temporal sequences, then extract frames, but quality and control are currently worse than systematic frame-by-frame generation. Technology may improve here, but current best practice is discrete frame generation.

How do you maintain pixel-perfect precision for retro-style games?

Generate at integer multiples of your target resolution, use pixel art specific models or LoRAs, and downscale with nearest-neighbor interpolation. For true pixel art, some post-processing in dedicated pixel art tools may still be necessary to achieve specific pixel placement requirements. AI excels at generating pixel art aesthetic but achieving pixel-perfect precision for very low resolution retro sprites (16x16, 32x32) often needs human cleanup.

Does this workflow work for isometric perspective sprites?

Yes, with appropriate prompting and potentially specialized base models. Isometric requires "isometric perspective, three-quarter view from above" type prompting. Training your LoRA on isometric references is critical because the perspective is less natural for most models. Some models trained specifically on isometric game art exist and work better than general models for this use case. The workflow structure doesn't change, just prompting and model selection.

How do you handle sprites that need to interact with each other?

Generate each character separately then composite in your game engine rather than trying to generate characters interacting in single sprites. AI struggles with maintaining multiple character consistency simultaneously in static images. The game engine handles interaction rendering. Your sprites just need to be individually consistent. For fighting games where characters touch, generate attacking/defending poses separately and let engine collision systems handle the interaction.

Can you batch-generate sprites for dozens of characters efficiently?

Yes, the workflow scales well. The bottleneck is LoRA training per character. If you're generating many similar characters (like RPG enemies of the same type), train one LoRA then use prompt variation to create variants. For truly distinct characters, each needs a LoRA but the training is parallelizable. Generate reference sets for all characters, queue all LoRA trainings overnight, then batch sprite generation becomes systematic processing.

Making Sprite Generation Actually Practical

The workflow seems complex initially because it is complex. Professional sprite creation always was complex, AI just shifts where the complexity lives. Instead of mastering pixel art or animation, you master workflow construction and prompt engineering.

The payoff is production velocity for game development. Once your workflow functions reliably, you can iterate character designs rapidly, generate multiple character variations for playtesting different aesthetics, and create complete sprite sets in hours instead of weeks.

Don't expect perfect output immediately. AI sprite generation produces 70-80% quality automatically with the remaining 20-30% requiring human refinement. For indie development where perfection takes backseat to shipping, this is acceptable. You're optimizing for getting playable games built rather than portfolio pieces.

Build your workflow incrementally. Start with simple 4-frame single-direction sprites to prove the concept. Expand to 8-direction walk cycles once basic generation works. Add complex animations only after simpler cases succeed. This staged approach prevents overwhelming complexity while building toward full production capability.

Document your workflow thoroughly. Six months later when you need new sprites, you'll have forgotten specific settings and node configurations. Documented workflows with example outputs let you resume production quickly. Version control your ComfyUI workflow files like code.

The game development landscape shifted when AI made sprite generation accessible to programmers and designers rather than requiring dedicated artists. It's not replacing artists for high-end work, but it's enabling small teams to create games that previously would have been blocked by art production bottlenecks.

Build the workflow, generate your sprites, ship your game. The technology works when properly applied. The complexity is manageable. The results are good enough for real games. That's what matters.

Ready to Create Your AI Influencer?

Join 115 students mastering ComfyUI and AI influencer marketing in our complete 51-lesson course.

Related Articles

10 Most Common ComfyUI Beginner Mistakes and How to Fix Them in 2025

Avoid the top 10 ComfyUI beginner pitfalls that frustrate new users. Complete troubleshooting guide with solutions for VRAM errors, model loading...

25 ComfyUI Tips and Tricks That Pro Users Don't Want You to Know in 2025

Discover 25 advanced ComfyUI tips, workflow optimization techniques, and pro-level tricks that expert users leverage.

360 Anime Spin with Anisora v3.2: Complete Character Rotation Guide ComfyUI 2025

Master 360-degree anime character rotation with Anisora v3.2 in ComfyUI. Learn camera orbit workflows, multi-view consistency, and professional...

.png)